Image information and radar information fusion method and system for traffic scene

A technology of image information and radar information, applied in radio wave measurement system, character and pattern recognition, radio wave reflection/re-radiation, etc., can solve the problem of not paying attention to the construction of fusion structure and optimization of overall performance, and poor adaptability to application scene changes , without considering the advantages of sensors and other issues, to achieve the effect of improving scene adaptability, improving robustness and reliability, and high real-time performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The technical methods in the embodiments of the present invention will be clearly and completely described below. Obviously, the described embodiments are only some of the embodiments of the present invention, rather than all of them. Based on the embodiments of the present invention, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts belong to the protection scope of the present invention.

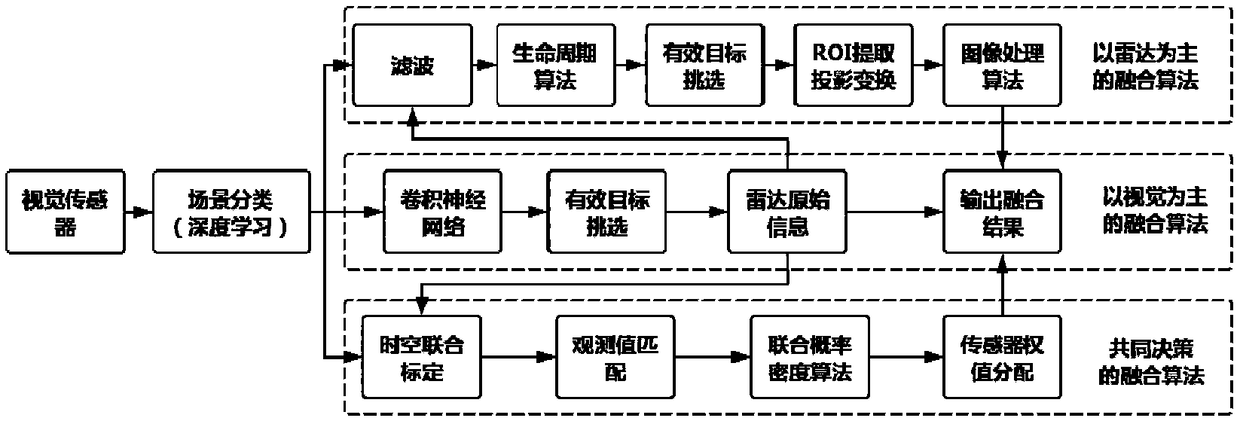

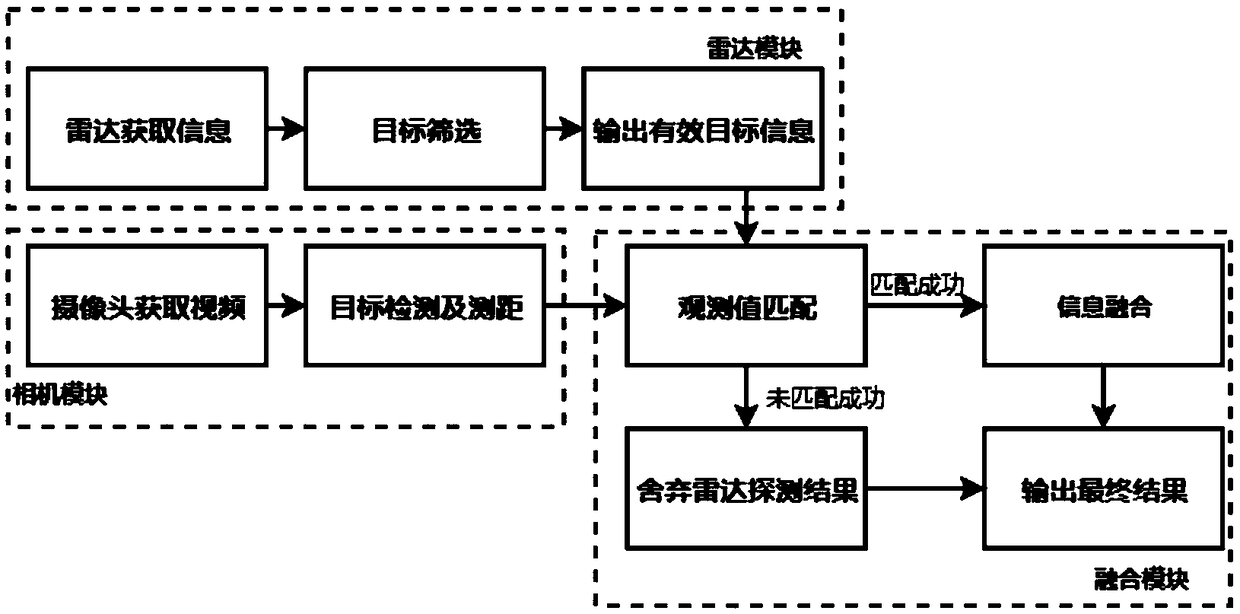

[0026] refer to figure 1 , figure 1It is a schematic diagram of the principle framework of a fusion method and system embodiment of image information and radar information of a traffic scene in the present invention. Firstly, using the method of deep learning, classify the extracted typical traffic scenes in life, such as: straight road in sunny day, straight road in rainy day, ramp in sunny day, curve in sunny night, curve in rainy night, etc., and according to these classification information The corresponding fusion method of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com