Joint modeling method of indoor and outdoor scenes based on line features

A modeling method, indoor and outdoor technology, applied in the field of 3D reconstruction, can solve the problems of different data quality, low overlap rate, difficult to handle, etc., to achieve the effect of improving the coincidence rate, improving the success rate, and simple expression

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

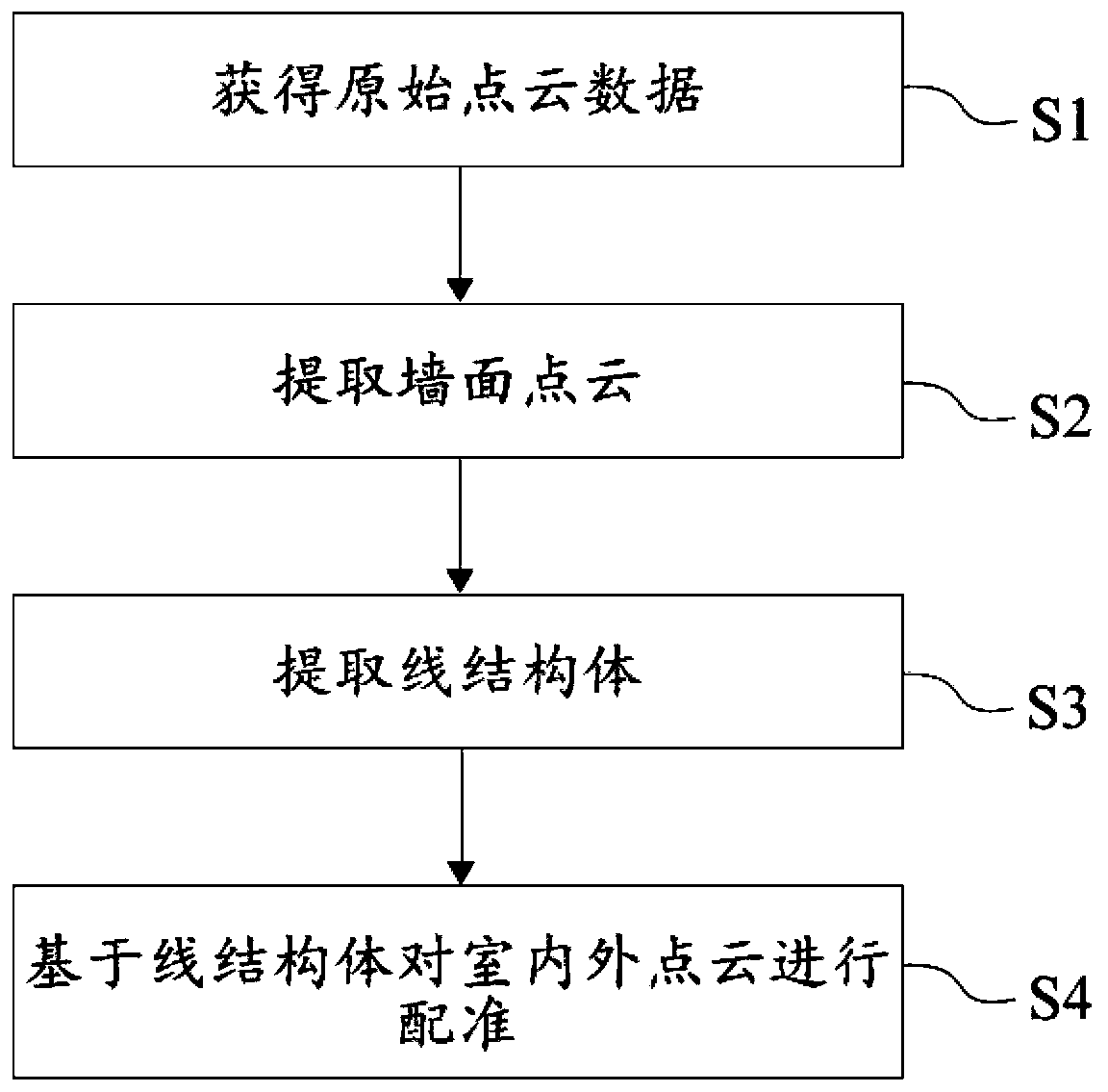

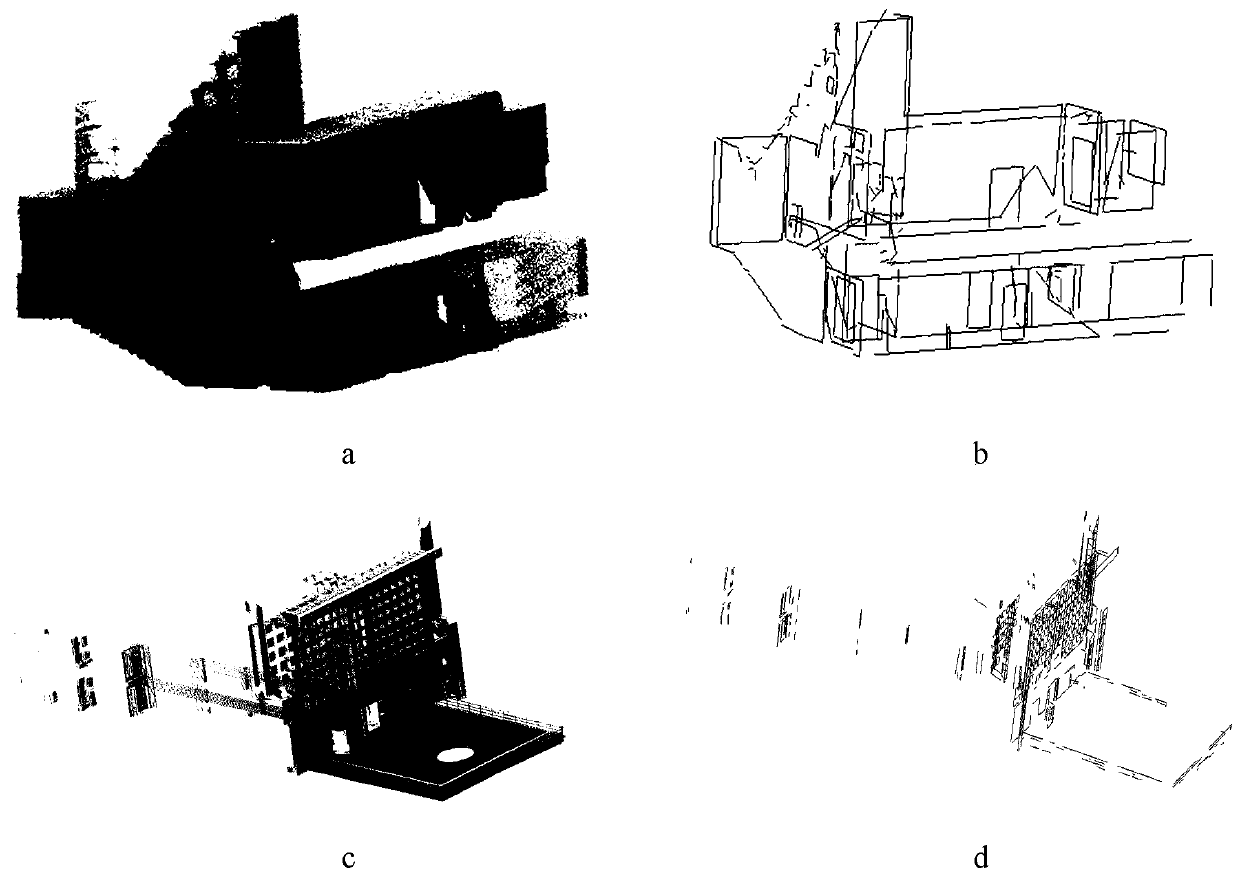

[0047] see figure 1 , the invention discloses a joint indoor and outdoor scene modeling method based on line features, comprising the following steps:

[0048] S1. Obtaining original point cloud data, the original point cloud data includes indoor point cloud and outdoor point cloud.

[0049] S2. Wall surface extraction is performed on the indoor point cloud and the outdoor point cloud respectively to obtain wall surface point clouds. This step is achieved through the following sub-steps:

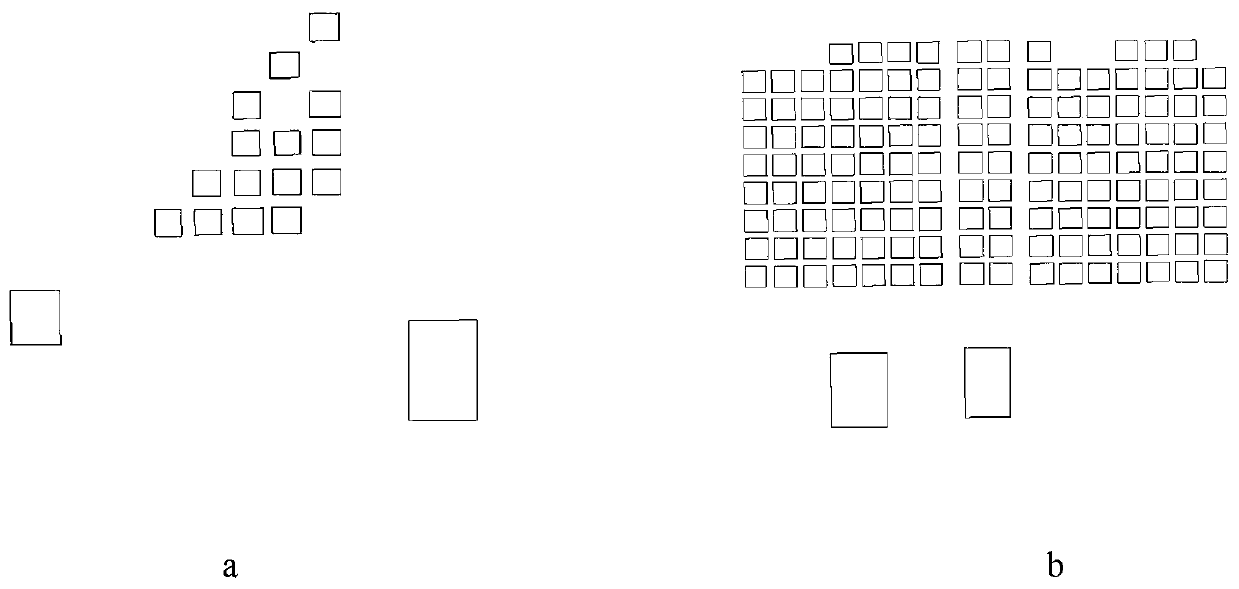

[0050] S21. Divide the indoor point cloud and the outdoor point cloud into small blocks based on the octree, obtain point cloud blocks, and classify the point cloud blocks. The specific categories to be marked include walls, floors, ceilings and others. .

[0051] S22, using FPFH features and height features to describe the point cloud segmentation, denoted as x i Represents the feature vector of the point cloud patch (patch) i, x ij Represents the feature vector of point cloud block ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com