A method for identifying wetted areas of multi-color fabrics based on hyperspectral image processing

A hyperspectral image and area recognition technology, applied in the field of textile and clothing performance testing, can solve the problems of low ratio of wetted area and unwetted area, wrong segmentation of test area, etc., to overcome noise, improve image contrast, and improve self The effect of adaptability and automation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040]In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below with reference to the accompanying drawings and embodiments. However, it should be understood that the specific embodiments described here are only used to explain the present invention, and are not intended to limit the scope of the present invention. Also, in the following description, descriptions of well-known structures and techniques are omitted to avoid unnecessarily obscuring the concept of the present invention.

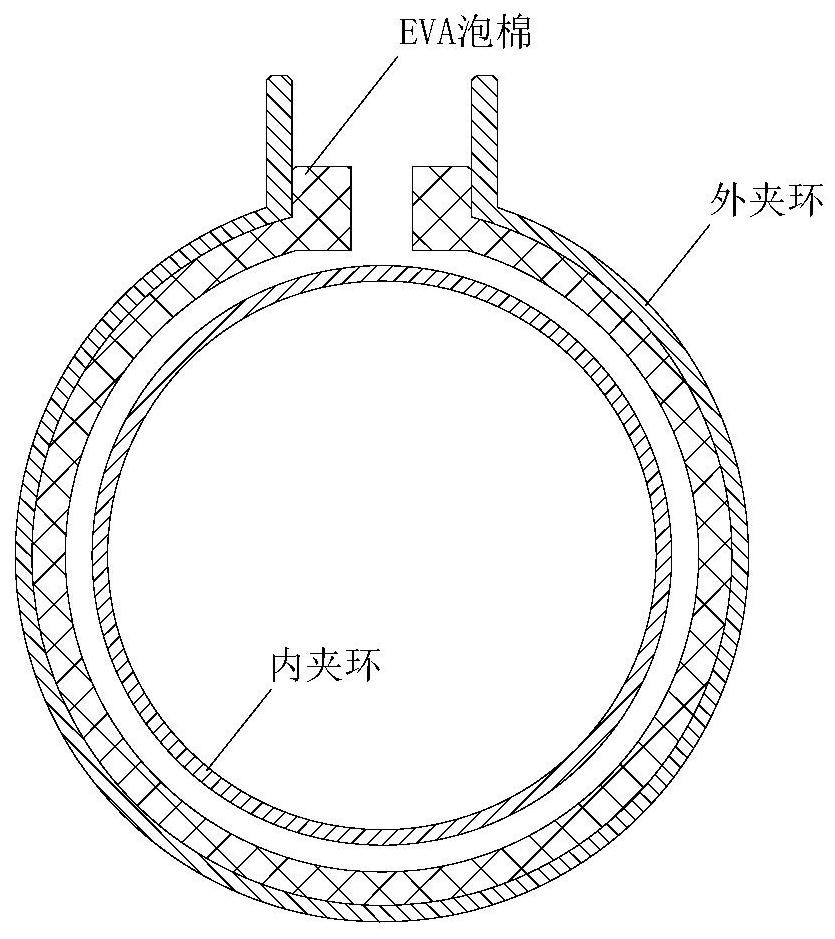

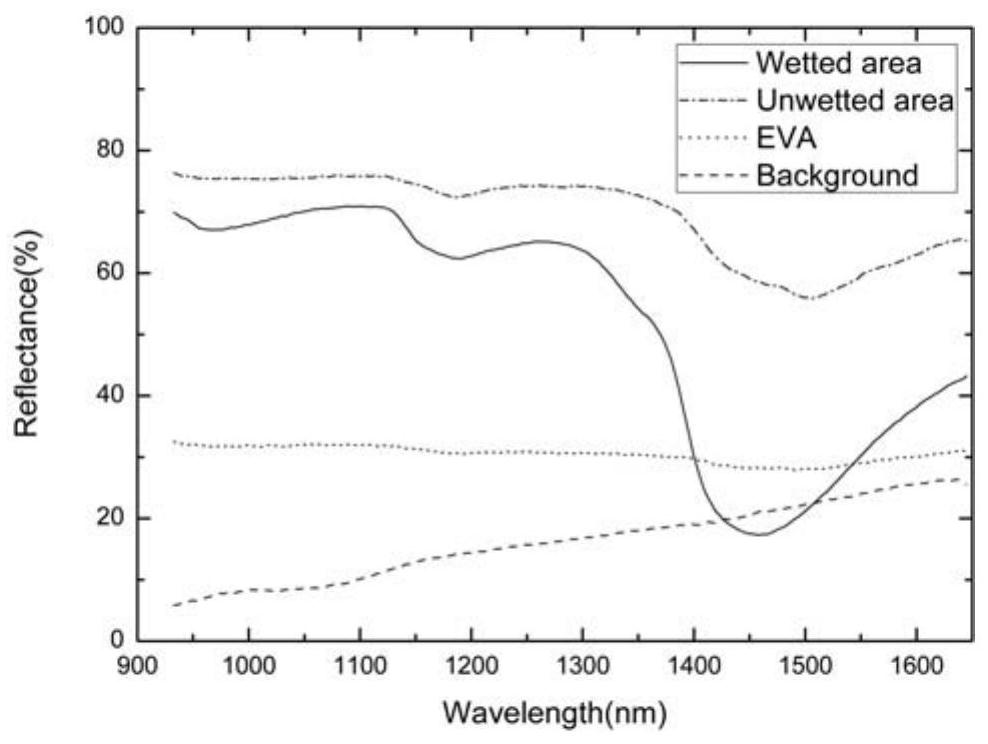

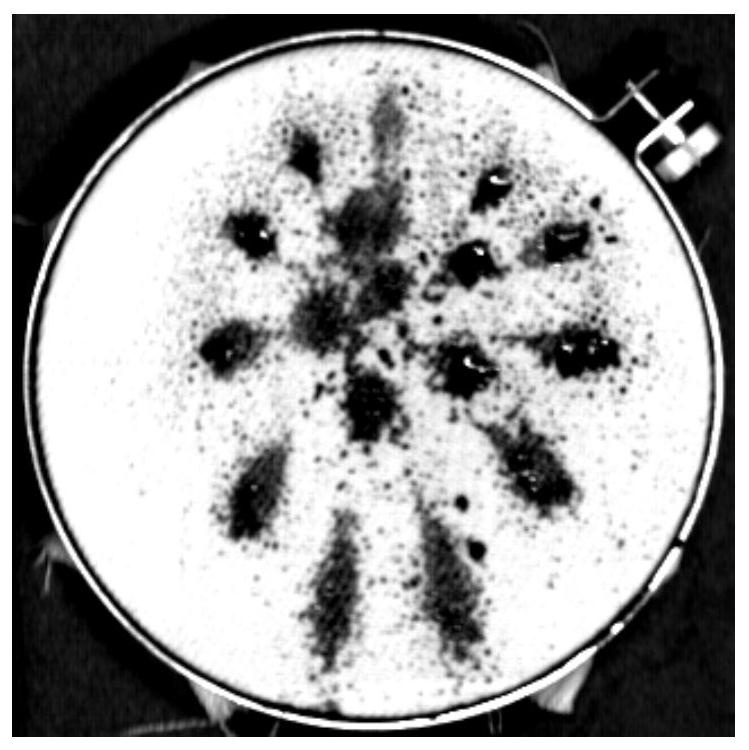

[0041] The method of the invention has good versatility for the identification of the wetted area and the detection of the water-stained level of fabrics of different materials. Because there are many kinds of fabrics, the present invention only uses Scottish worsted tweed fabrics made of 100% wool as an implementation example; the image processing process is completed in the Matlab R2014b soft...

PUM

| Property | Measurement | Unit |

|---|---|---|

| thickness | aaaaa | aaaaa |

Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com