Coding and decoding method based on block cyclic sparse matrix neural network

A neural network and sparse matrix technology, applied in the field of sparse deep neural network compression, can solve problems such as complex encoding and decoding methods, irregular operations, and unbalanced loads, and achieve the effects of reducing storage requirements, improving throughput, and facilitating hardware implementation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The solution of the present invention will be described in detail below in conjunction with the accompanying drawings.

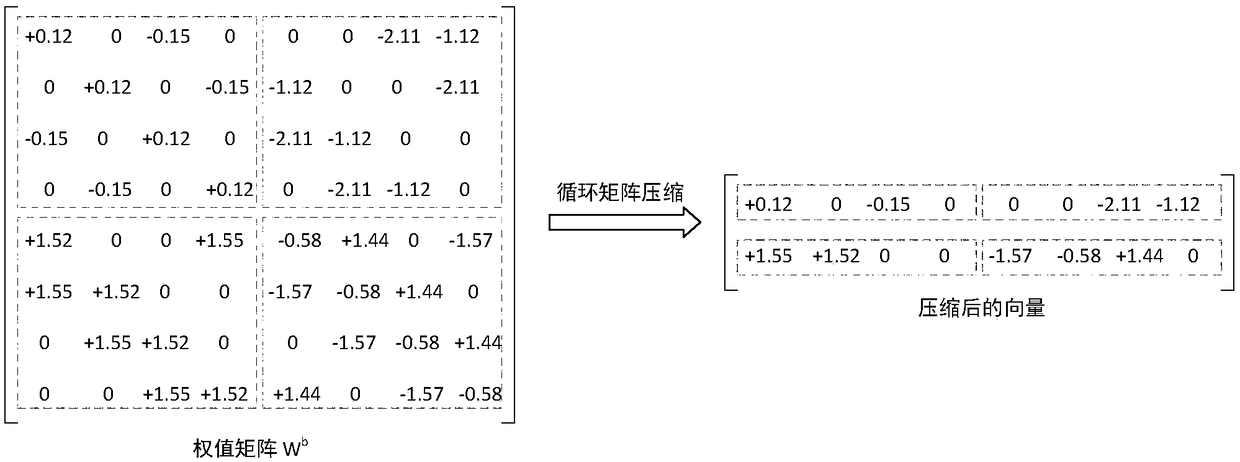

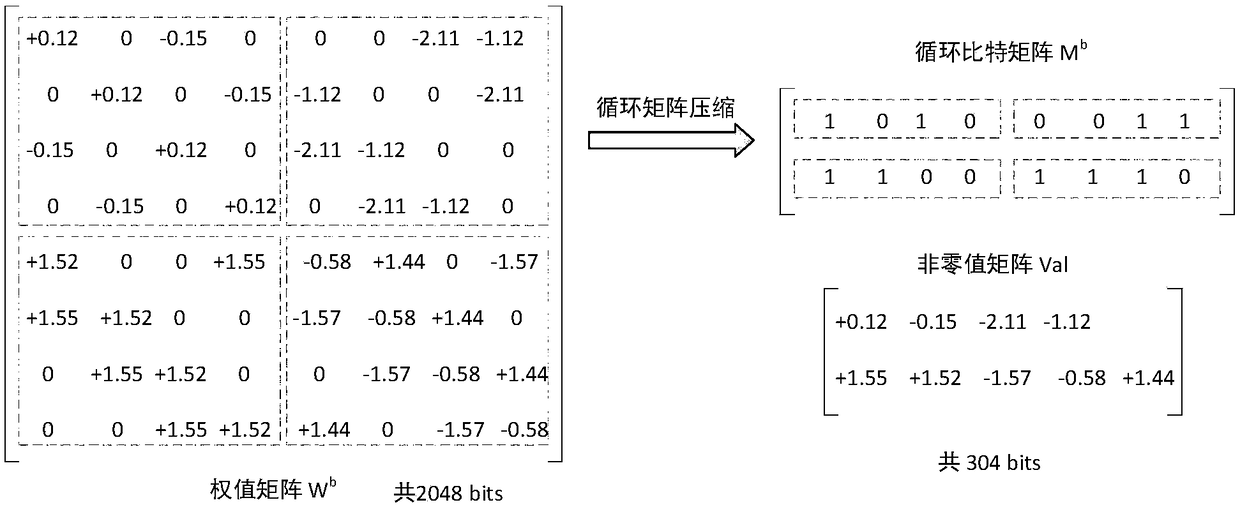

[0038] The encoding and decoding method described in this solution is mainly designed for the fully connected deep neural network, and combines the characteristics of block cyclic matrix and sparse matrix for network compression.

[0039] The calculation formula of the fully connected layer algorithm is as follows:

[0040] y=f(Wa+b) (1)

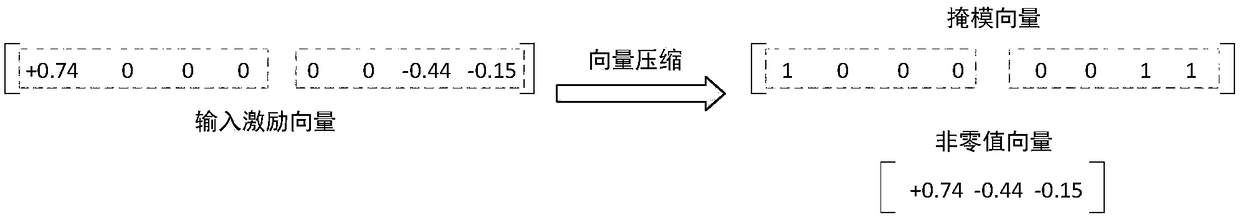

[0041] Among them, a is the excitation vector of the calculation input, y is the output vector, b is the bias, f is the nonlinear function, and W is the weight matrix.

[0042] The operation of each element value of the output vector y in formula (1) can be expressed as:

[0043]

[0044] In formula (2), i represents the row number of the element, j represents the column number of the element, and n represents the number of input stimuli (the total number of columns of the weight matrix).

[0045] Therefore, t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com