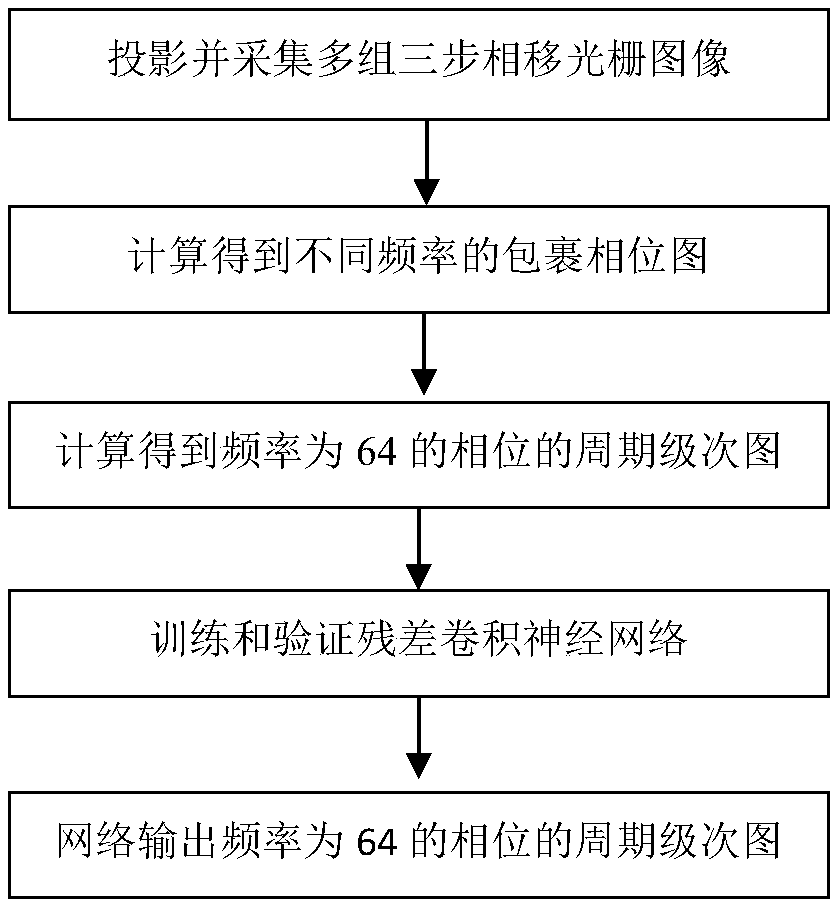

Fringe projection time phase unwrapping method based on deep learning

A time phase, fringe projection technology, applied in the field of optical measurement, to achieve the effect of less error points and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

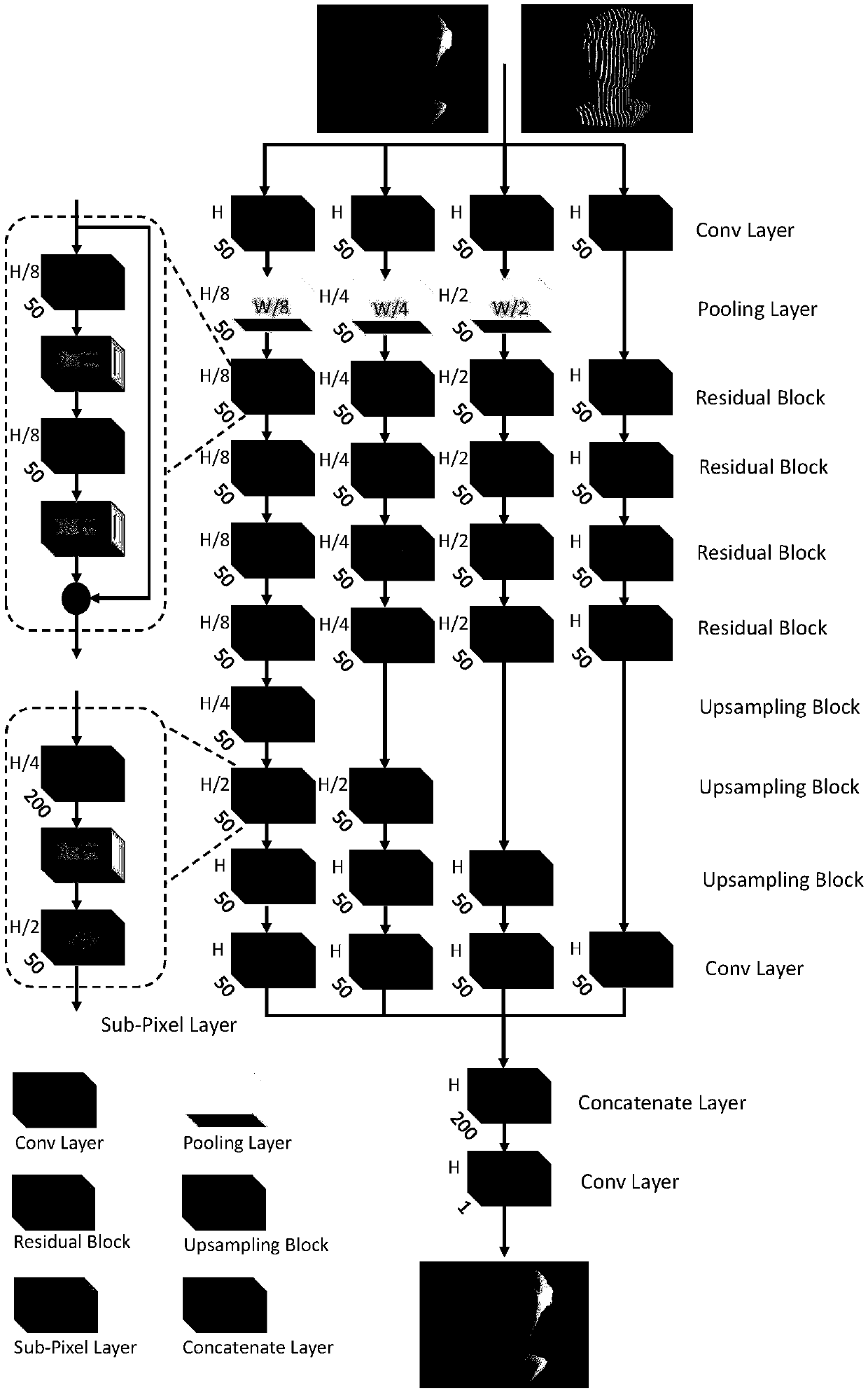

Method used

Image

Examples

Embodiment

[0039] For verifying the effectiveness of the method described in the present invention, use a camera (model acA640-750um, Basler), a DLP projector (model LightCrafter 4500PRO, TI) and a computer to construct a set of fringe projection based on deep learning Three-dimensional measurement setup for time-phase unwrapping methods, such as figure 2 shown. The shooting speed of this set of devices is 25 frames per second when performing three-dimensional measurement of objects. Project and collect four sets of three-step phase-shifted grating images with different frequencies as described in step 1. The frequencies of the four sets of grating patterns are 1, 8, 32, and 64, respectively. Using step 2, four groups of wrapping phase diagrams with different frequencies can be obtained. By using step three, the periodic order diagram and the absolute phase diagram of the phase with a frequency of 64 can be obtained. Use step 4 to build the image 3 The residual convolutional neural...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com