Feature extraction and fusion recognition of dual-source images based on convolution neural network

A convolutional neural network and feature extraction technology, which is applied in the field of dual-source image feature extraction and fusion recognition, can solve the problems of target transformation sensitivity, environmental factors, and low classification recognition rate, and achieve good results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The present invention will be further described below in conjunction with the accompanying drawings and examples.

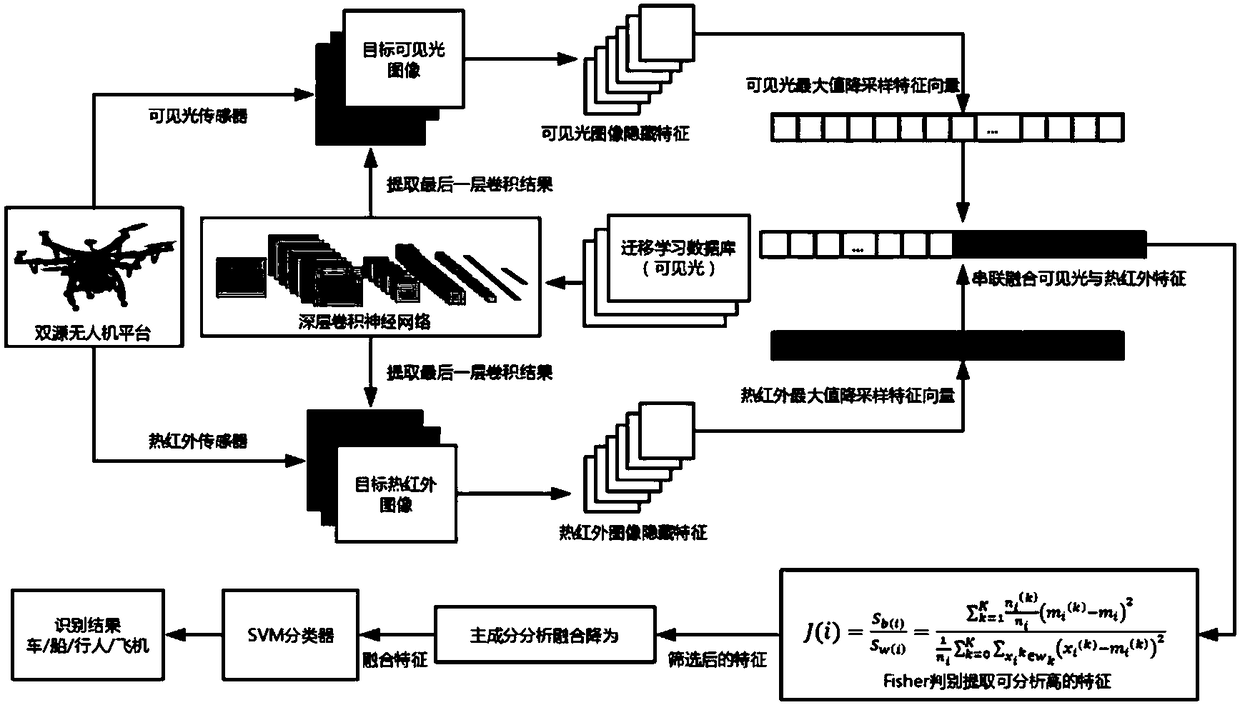

[0036] Such as figure 1 Shown is a dual-source image feature extraction and fusion recognition method based on convolutional neural network, including the following steps:

[0037]Step 1: Establish image databases of visible light and thermal infrared imaging sensor sources for multiple types of targets. Each library contains L types of targets corresponding to each other. The number of samples for each type of target is n, and the total number of samples is N=nL. There are 15 types of objects in the self-built database, and the number of samples of each type of objects is 375, and the total number of samples is 5625.

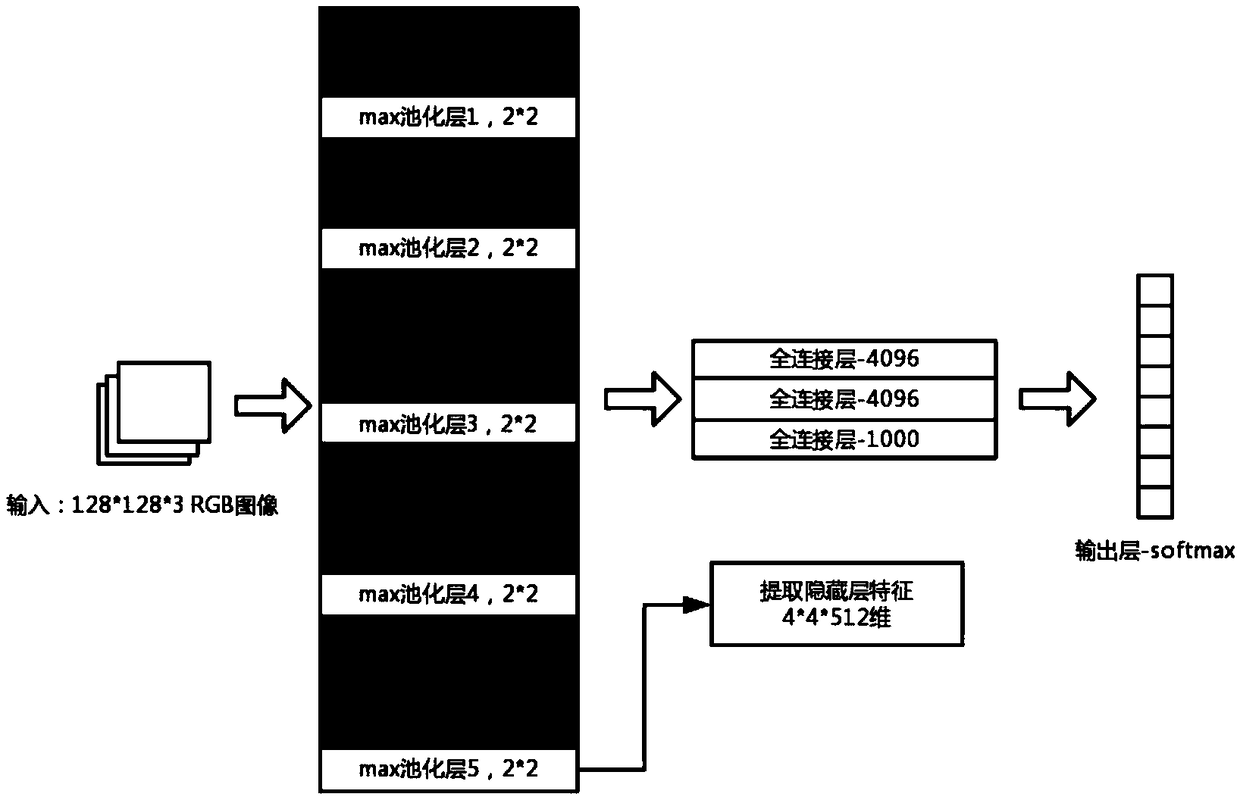

[0038] Step 2: Build a deep convolutional neural network model. The model structure is the image input layer (InputLayer), the convolution layer (Convolution Layer) with a total of 13 layers, the pooling layer (Pooling Layer) with a total ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com