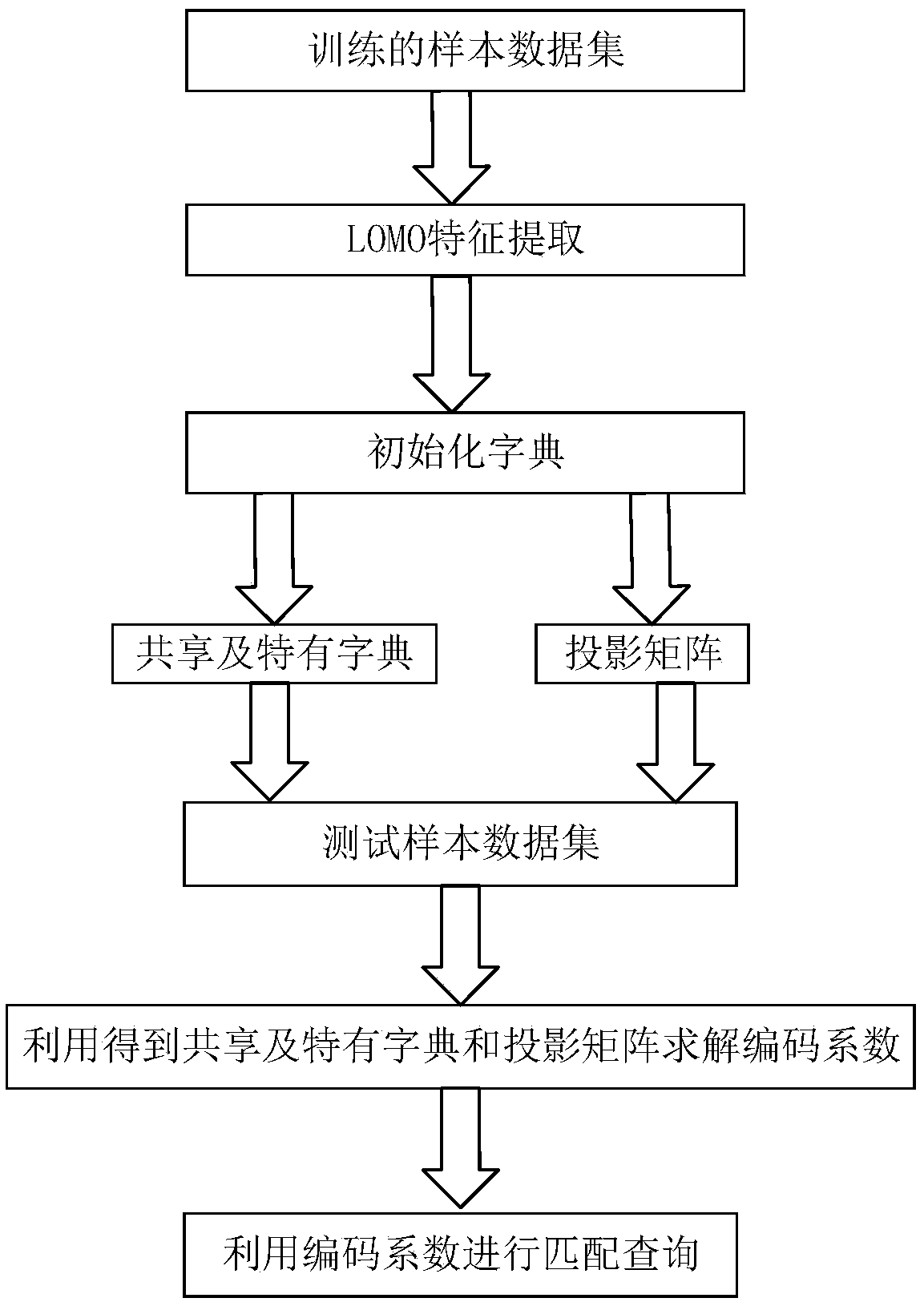

A pedestrian re-identification method based on joint learning of shared and unique dictionary

A technology of pedestrian re-identification and dictionary learning, which is applied in character and pattern recognition, instruments, computer parts, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0077] Example 1: The common components of the same pedestrian under different viewing angles do not reduce the recognition rate in the similarity measurement. The fundamental reason for reducing the recognition rate lies in the similarity shown by different pedestrians from different perspectives, and this similarity is often reflected by the common components between images of different pedestrians. According to the low-rank sparse representation theory, the shared components between different pedestrians often show strong correlation, so they have a strong low-rank. According to this idea, the present invention proposes a joint learning framework of pedestrian-specific dictionary and shared dictionary, and realizes the separation of pedestrian-specific components and shared components based on this, so as to solve the problem caused by the similarity components of pedestrian image appearance features from different perspectives. The problem of ambiguity in appearance charac...

example 1

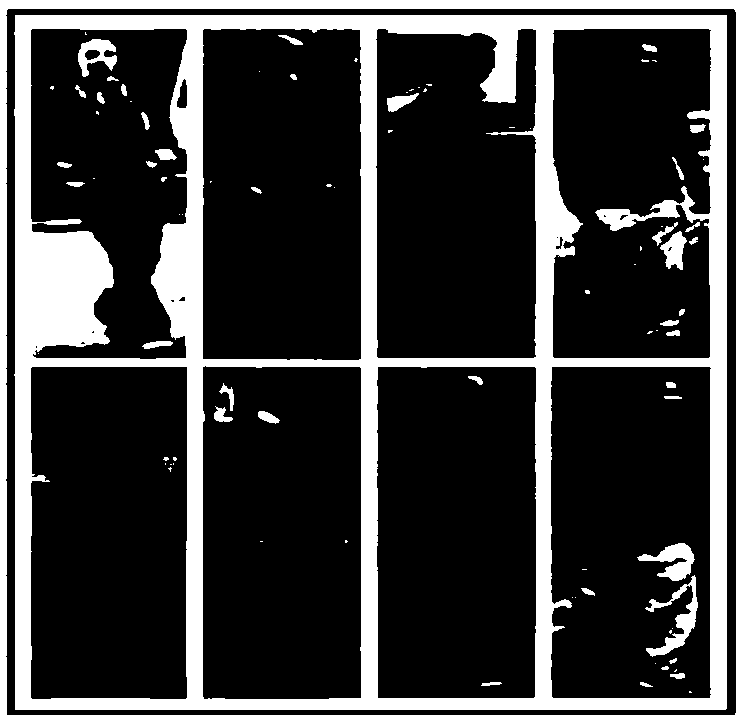

[0142] Example 1: VIPeR dataset

[0143] The images in this dataset come from 632 pedestrians from two non-overlapping camera views, each pedestrian has only one image per view, for a total of 1264 images. During the experiment, the size of each pedestrian image in the dataset is set to 128 × 48. figure 2 Partial sample pairs of pedestrian images from this dataset are given. The pedestrian images in each row are from the same perspective, and the pedestrian images in the same column are the visual representations of the same pedestrian from different perspectives. It can be seen from this that the appearance characteristics of the same pedestrian from different perspectives are quite different due to the change of posture and the difference of the background. Therefore, this dataset can be used to measure the performance of the algorithm in mitigating the effects of pedestrian pose changes and complex backgrounds.

[0144] In order to prove the effectiveness of the algorit...

example 2

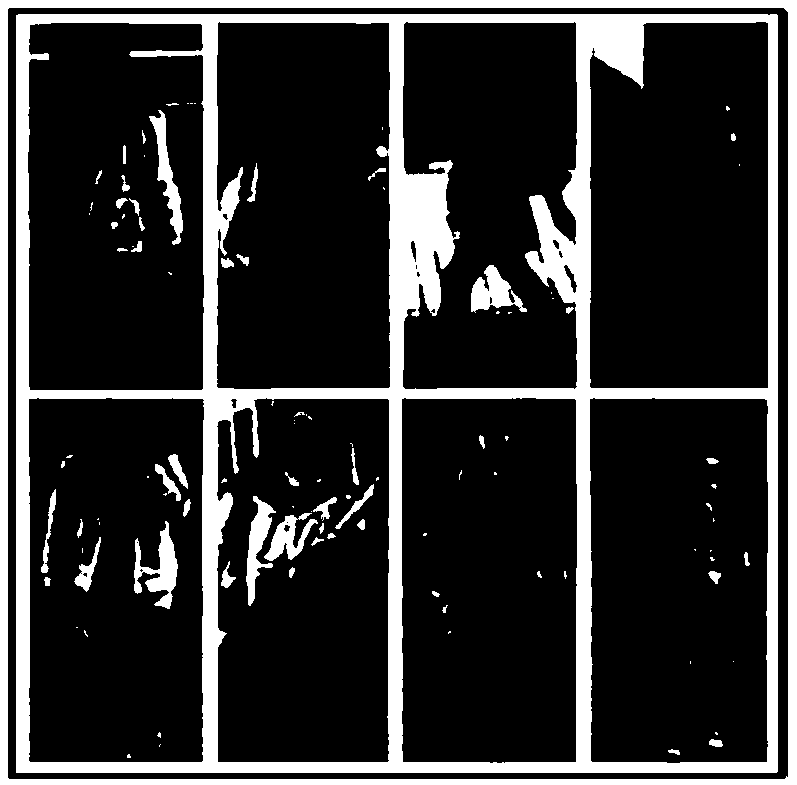

[0148] Example 2: CUHK01 dataset

[0149] The pedestrian images in this dataset are composed of 3884 images of 971 pedestrians captured by two non-overlapping cameras in the campus. Among them, there are 2 pictures for each pedestrian in the same view. During the experiment, the image size was adjusted to 128*60. image 3 Pairs of images of the same pedestrian under different viewing angles are given. It can be seen that the images of the same pedestrian from different perspectives show great differences due to differences in posture, perspective, lighting, and background. Therefore, it is extremely challenging to achieve correct matching of pedestrian images on this dataset.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com