A flexible configurable neural network computing unit, computing array and construction method thereof

A computing unit and neural network technology, applied in the field of neural network hardware architecture, can solve the problems of not being able to make full use of the convolutional layer data reusability, and not being able to support different types of convolutional layer calculations.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0058] Below in conjunction with accompanying drawing, the present invention is further described in detail,

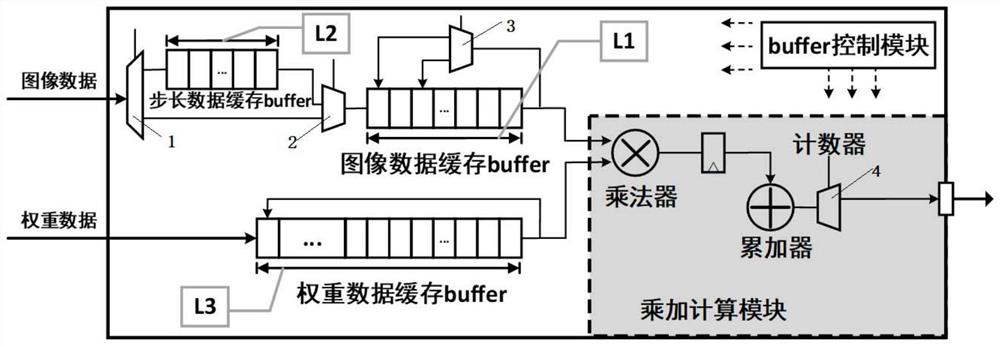

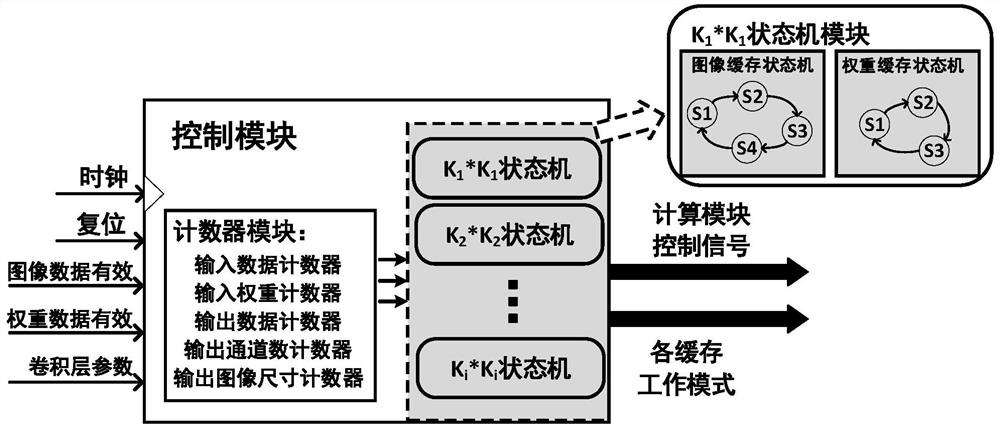

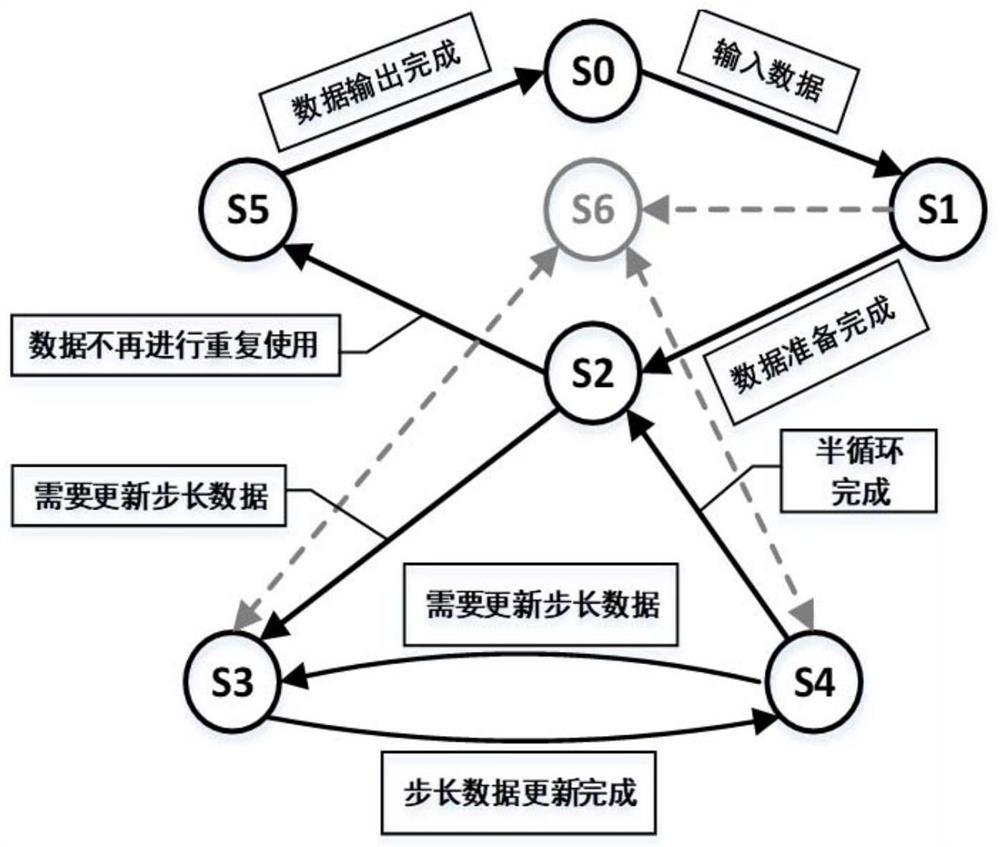

[0059] see figure 1 As shown, a flexible configurable neural network computing unit of the present invention includes: a configurable storage module, a configurable control module, and a time-division multiplexable multiply-add computing module; the configurable storage module includes: a feature map data cache buffer, Step data cache buffer and weight data cache buffer; configurable control module includes: counter module and state machine module; multiplication and addition calculation module includes: multiplier and accumulator.

[0060] The feature map data cache buffer is used to store part of the feature map data used in the convolution calculation, and to recycle the feature map data with data sharing. The maximum length of the buffer is L1, and the size is max{K 1 A 1 , K 2 A 2 ,...,K i A i}, where K is the size of the convolution kernel in the convoluti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com