Method, application, device and system for positioning object step by step based on vision fusion

A positioning device and target technology, applied in the field of visual positioning, can solve the problems of the visual system taking a long time to take repeated pictures, the positioning accuracy of screw holes is reduced, and the assembly task cannot be completed, so as to reduce the number of pictures, improve production efficiency, high precision effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

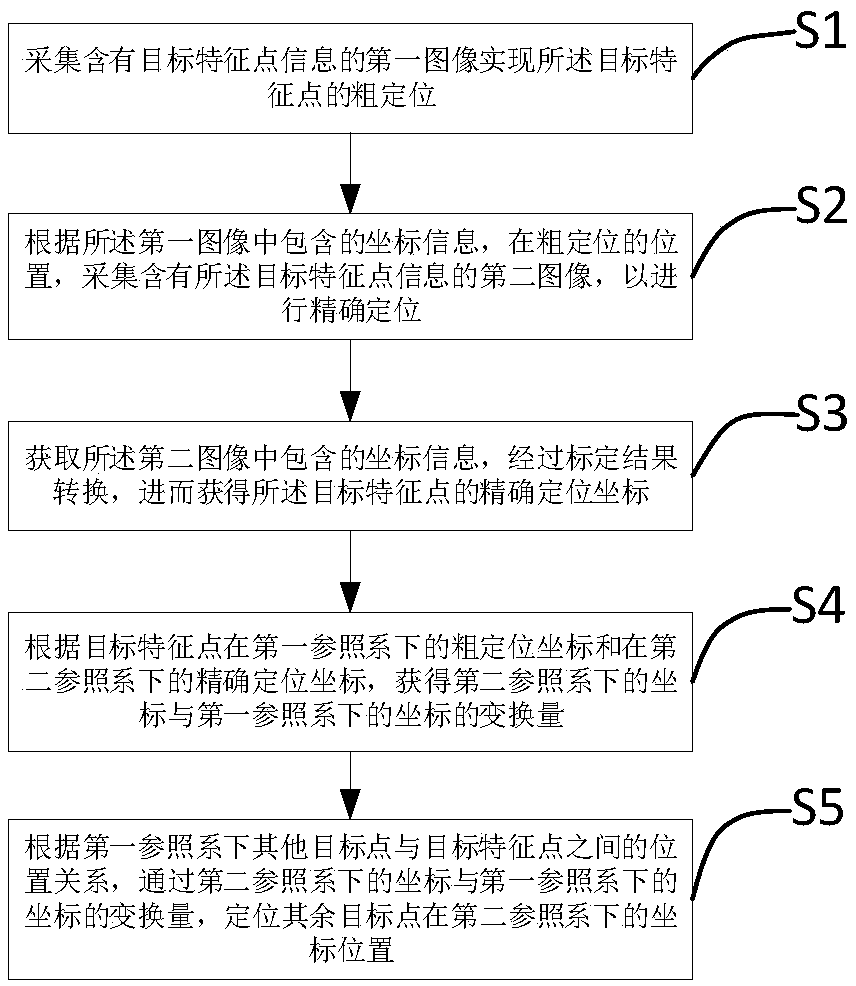

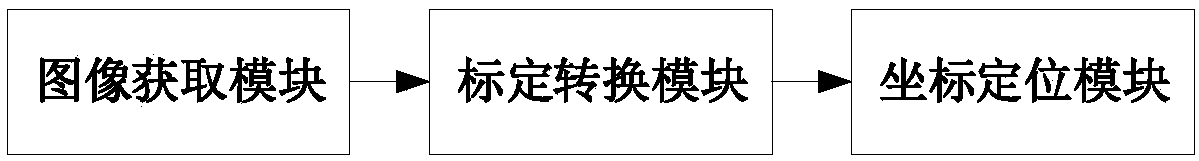

[0046] refer to figure 1 , is a flow chart of a step-by-step target positioning method based on vision fusion in Embodiment 1 of the present invention. The step-by-step positioning method of a target based on vision fusion in this embodiment includes the following steps:

[0047] S1. Acquiring a first image containing target feature point information to achieve rough positioning of the target feature point;

[0048] S2. According to the coordinate information contained in the first image, at the position of coarse positioning, collect the second image containing the target feature point information for fine positioning, specifically: acquire the first image containing the target feature point information After taking the image, by changing the range of the image acquisition field of view, the image acquisition of the target feature point is performed again, and the second image is obtained;

[0049] S3. Obtain the coordinate information included in the second image, and conv...

Embodiment 2

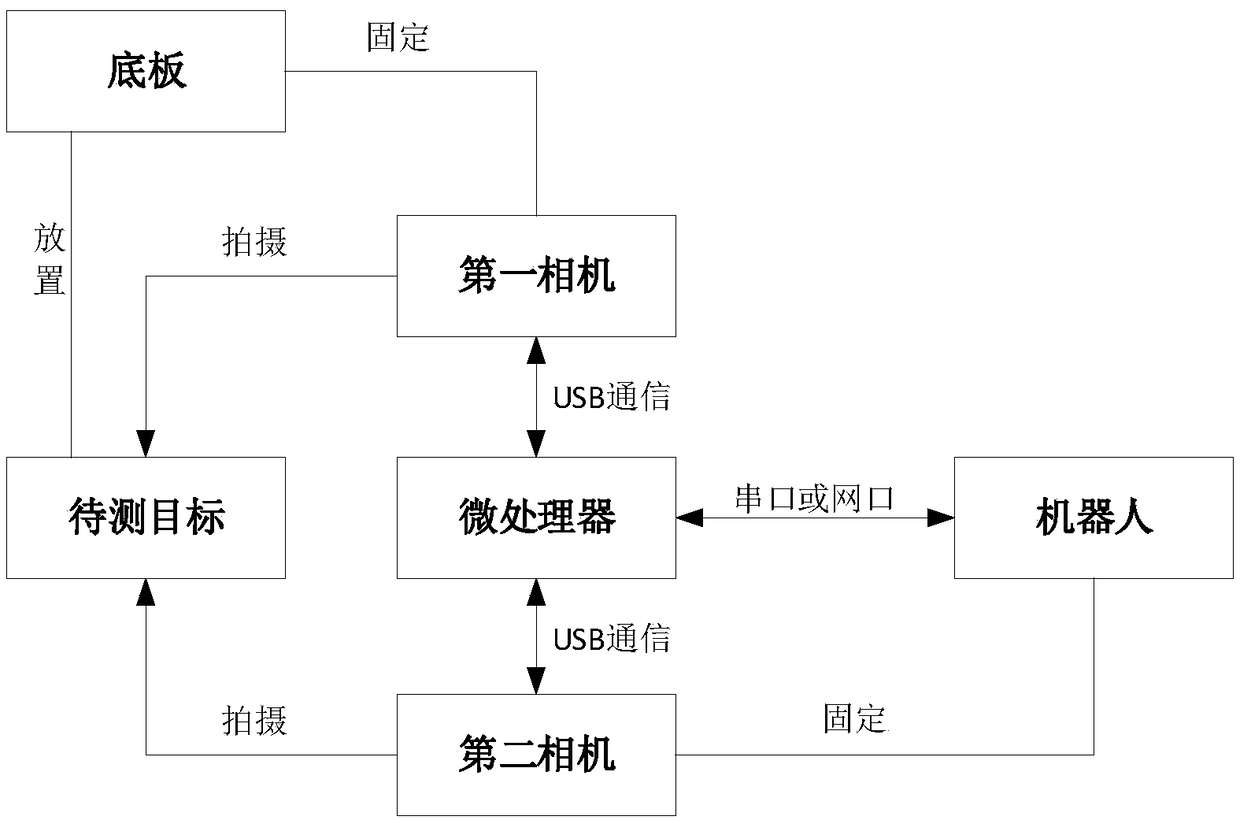

[0059] Figure 4 It is a schematic diagram of the robot coordinate system and the camera coordinate system in Embodiment 2 of the present invention. Wherein the SCARA manipulator includes a manipulator base 13 and a manipulator end 14, also includes a base plate 15, the origin 101 of the manipulator end coordinate system, the first camera 11 is a kind of Eye-to-hand camera, adopts a short focal length lens, and the second camera 12 is a An Eye-in-hand camera with a long focal length lens, the first camera 11 is responsible for the rough positioning of the large field of view, and the second camera 12 is responsible for the fine positioning of the small field of view, wherein the second camera 12 and the end 14 of the manipulator are connected by a rigid structure. As the tip 14 moves, the second camera 12 also moves.

[0060] Figure 5 It is a schematic diagram of a step-by-step target positioning method based on visual fusion in Embodiment 2 of the present invention. It tak...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com