A multi-modal motion recognition method based on depth neural network

A technology of deep neural network and action recognition, applied in the field of multi-modal action recognition based on deep neural network, can solve problems such as time information loss, achieve the effect of improving accuracy and precision, improving precision, and reducing computing time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

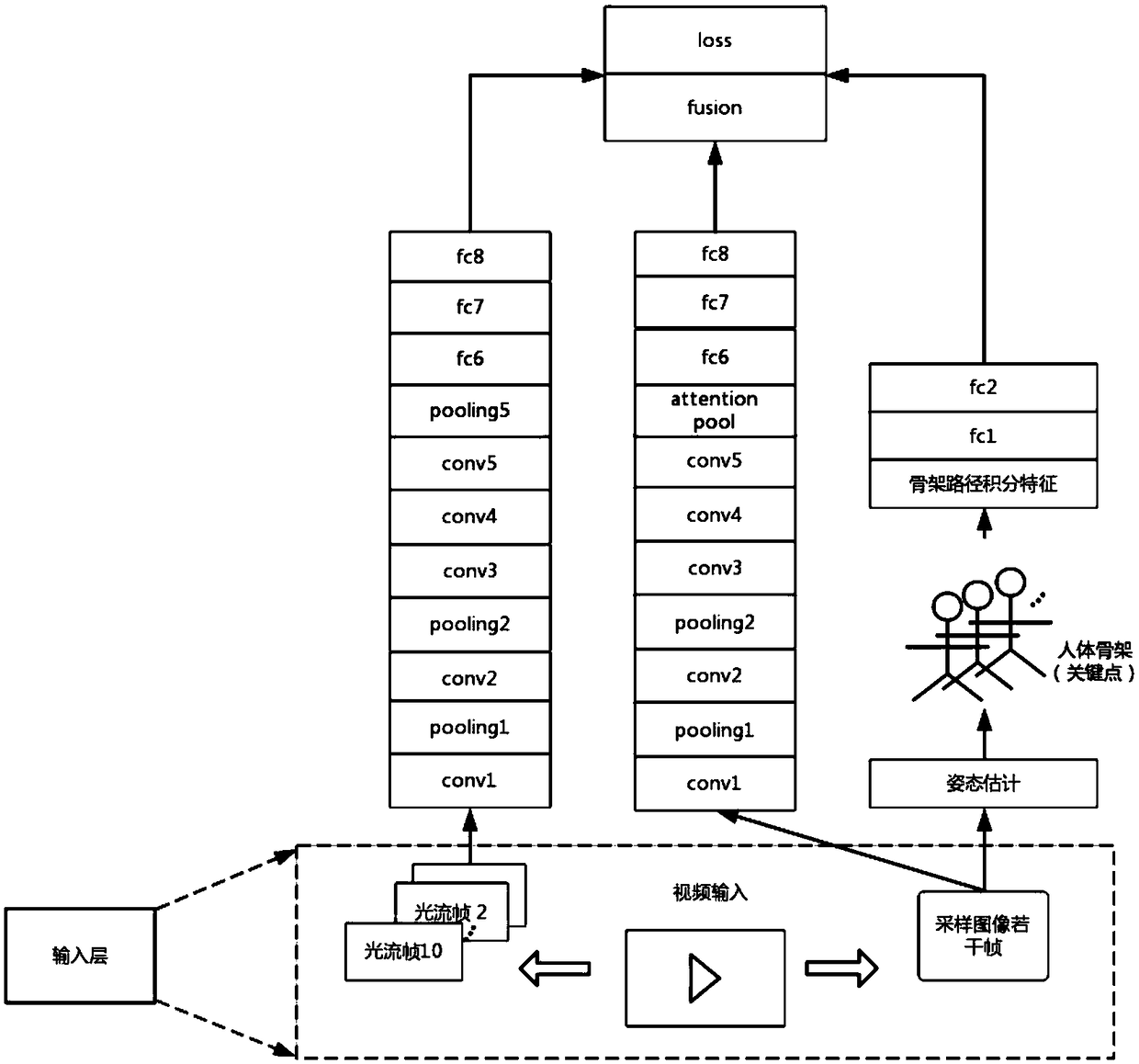

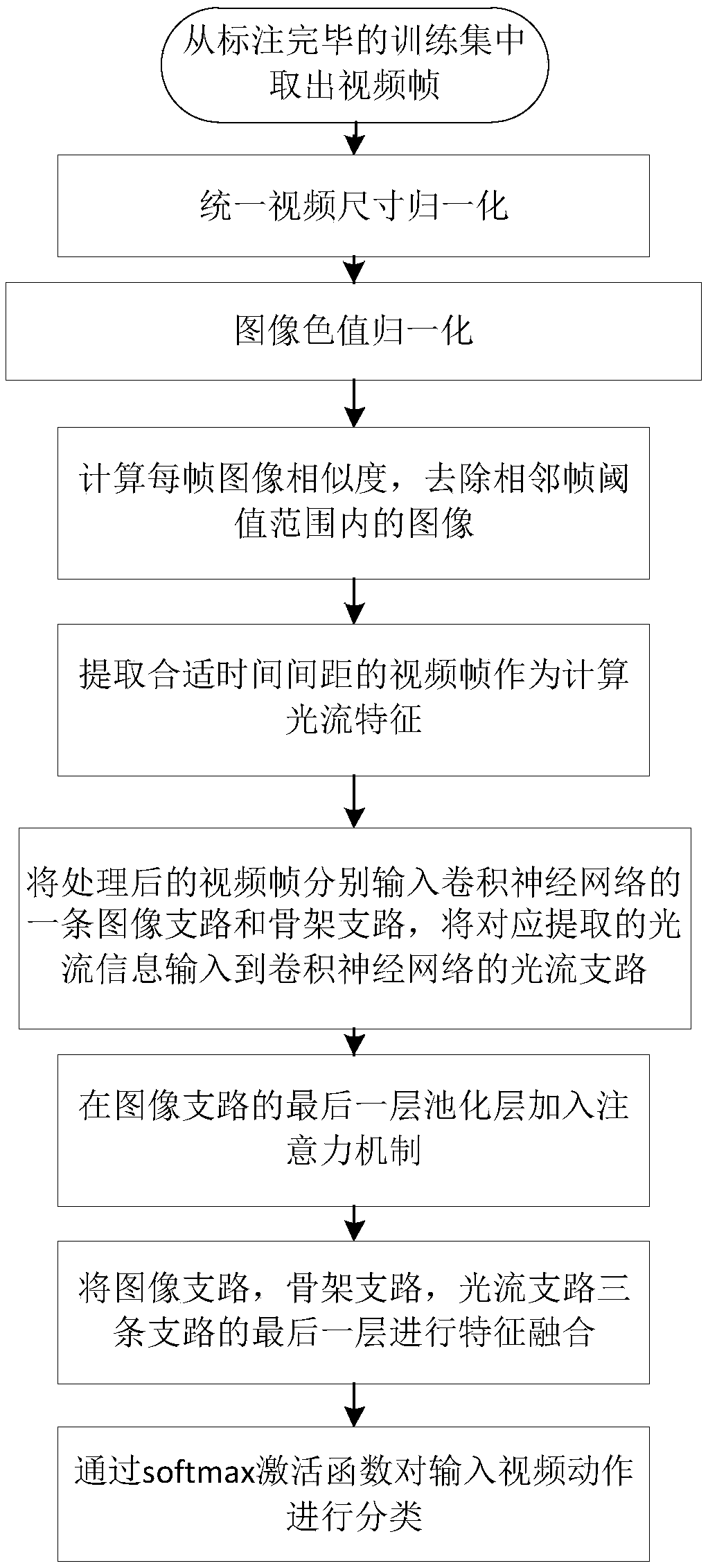

[0039] Such as figure 1 As shown, this embodiment discloses a multi-modal action recognition method based on a deep neural network.

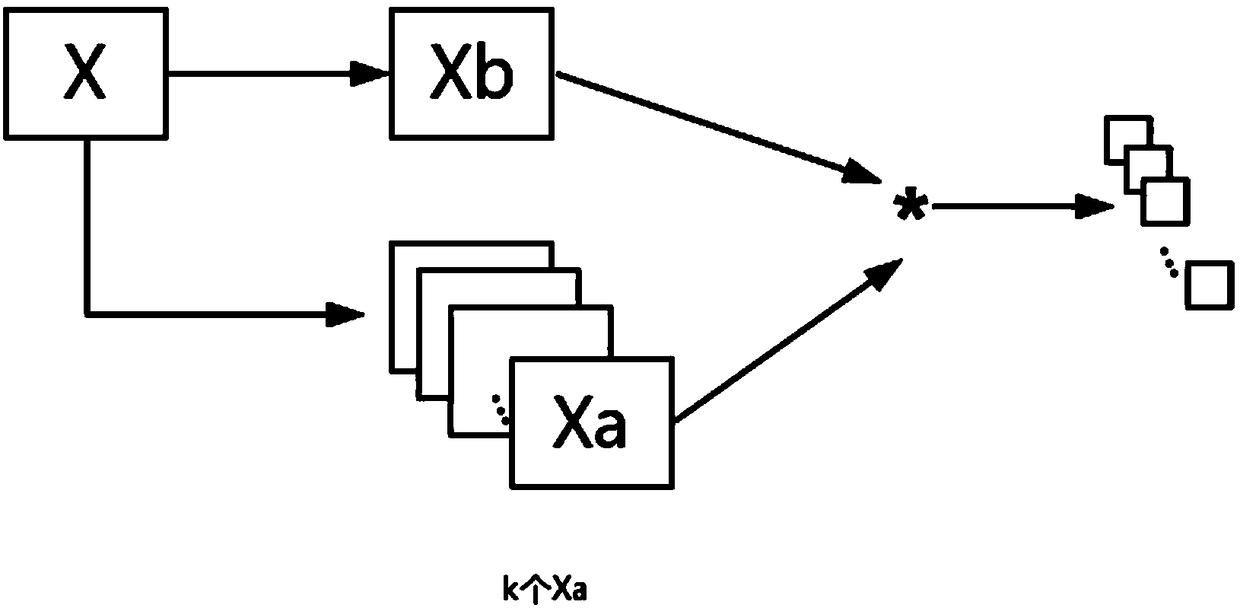

[0040] The deep neural network used in this embodiment has three branches in the lower layer - a convolutional neural network for extracting temporal features, a convolutional neural network for extracting spatial features, and a convolutional neural network for processing skeleton path integral features Fully connected network. At a high level, the three branches are merged into one branch through feature fusion, and the classification id of the video action is predicted by the softmax activation function. In the image branch, a pooling structure based on the attention mechanism is introduced, which can help the network structure to focus on features that are conducive to recognizing actions without changing the existing network structure, thereby reducing the interference of irrelevant features and improving the existing network structure. T...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com