Chromatic Aberration Correction Method Suitable for Video Stitching

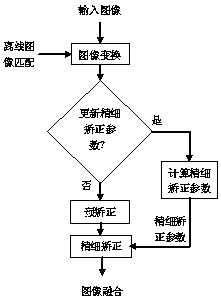

A chromatic aberration correction and video splicing technology, which is applied in image enhancement, instruments, graphics and image conversion, etc., can solve the problems of large calculation, coarse correction granularity, and fine correction granularity for solving fine-grained correction parameters, so as to reduce the amount of data processing , Improve the effect of update lag and eliminate image chromatic aberration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

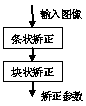

Method used

Image

Examples

Embodiment Construction

[0034] In order to facilitate the understanding of the present invention, the basic principle of chromatic aberration correction is briefly described below:

[0035] Generally, chromatic aberration correction can be expressed by the following formula:

[0036] I'(x,y)=gI(x,y) formula (1)

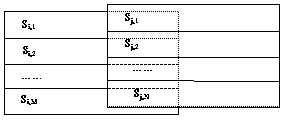

[0037] Among them, I represents the image before correction, g represents the correction coefficient, and I′ represents the image after correction. If I is a color image, each color channel is corrected with different g; if g is a constant, it corresponds to global correction. If As the pixel coordinates (x, y) change, it can be considered as local correction.

[0038] The calculation of the correction coefficient can be regarded as solving an optimization problem, so that the corresponding pixels of the corrected image in the overlapping area have the minimum square error, and its objective function can be expressed by the following formula [2] :

[0039]

[0040] Among them, n is the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com