Mechanical arm vision grabbing system and method based on self-supervised-learning neural network

A neural network and supervised learning technology, applied in the field of robotic arms, can solve the problems of not taking into account the important factors affecting the actual mass distribution of the grasped object, misestimation, etc., and achieve the effect of improving the success rate and accurately grasping the pose

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

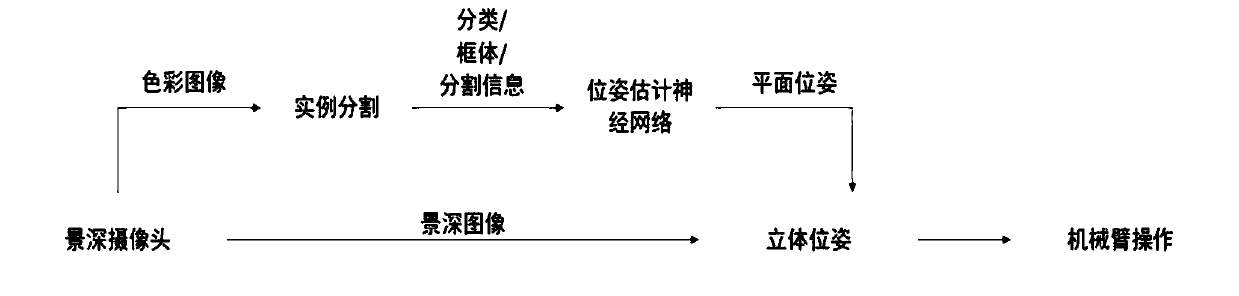

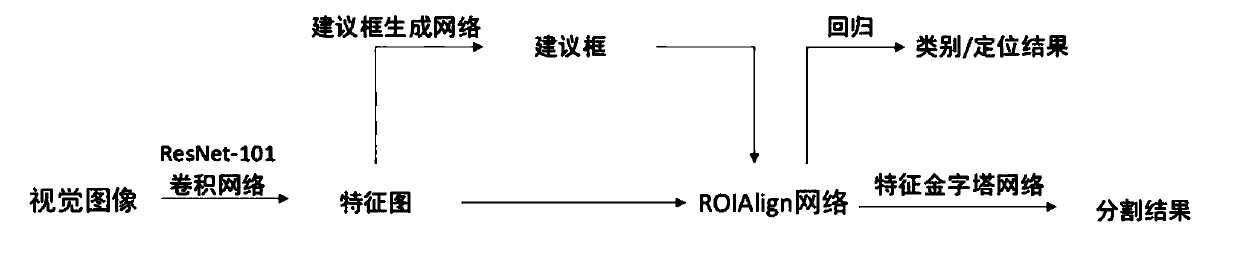

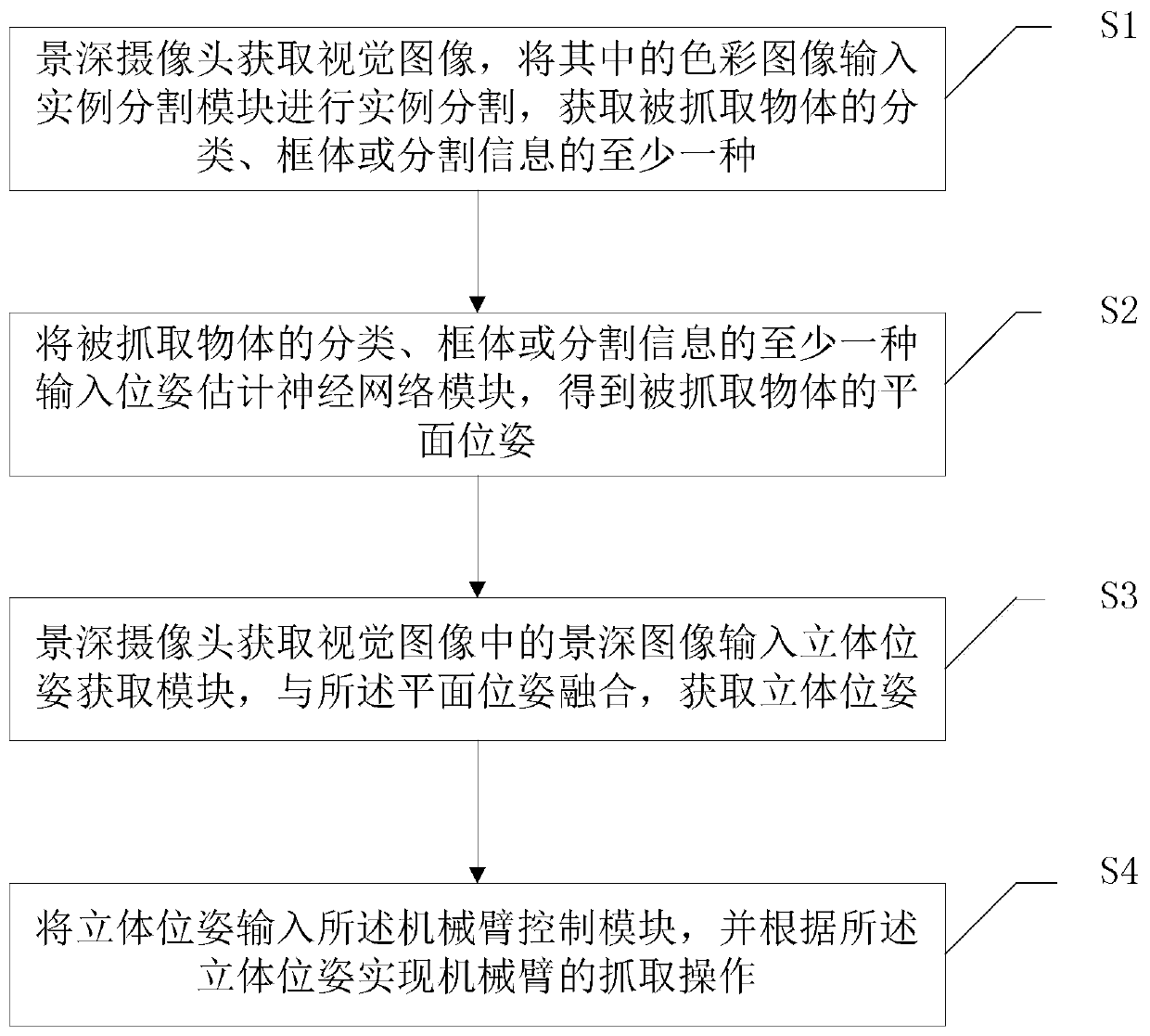

[0034] The present disclosure provides a robotic arm visual grasping system and method based on a self-supervised learning neural network, including acquiring a visual image of an object to be grasped, and neural network regression to estimate the approximate position of the grasped object and the precise estimation of the grasping attitude. The convolutional neural network first recognizes the object and regresses to obtain the approximate outline position of the grasped object in the visual image. Then, sample different grasping positions and grasping angles in the object outline, score each position and angle after passing through the fully connected layer of the neural network, and use the position and angle corresponding to the highest score as the selected position and angle for the robotic arm to grasp the object. Precise gesture. Among them, the weight of the neural network is obtained by the self-supervised training of the manipulator, considering the actual density d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com