Neural network acceleration method and device, neural network acceleration chip and storage medium

A neural network and acceleration chip technology, applied in the field of artificial intelligence, can solve the problems of long acceleration time and low efficiency of neural network

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

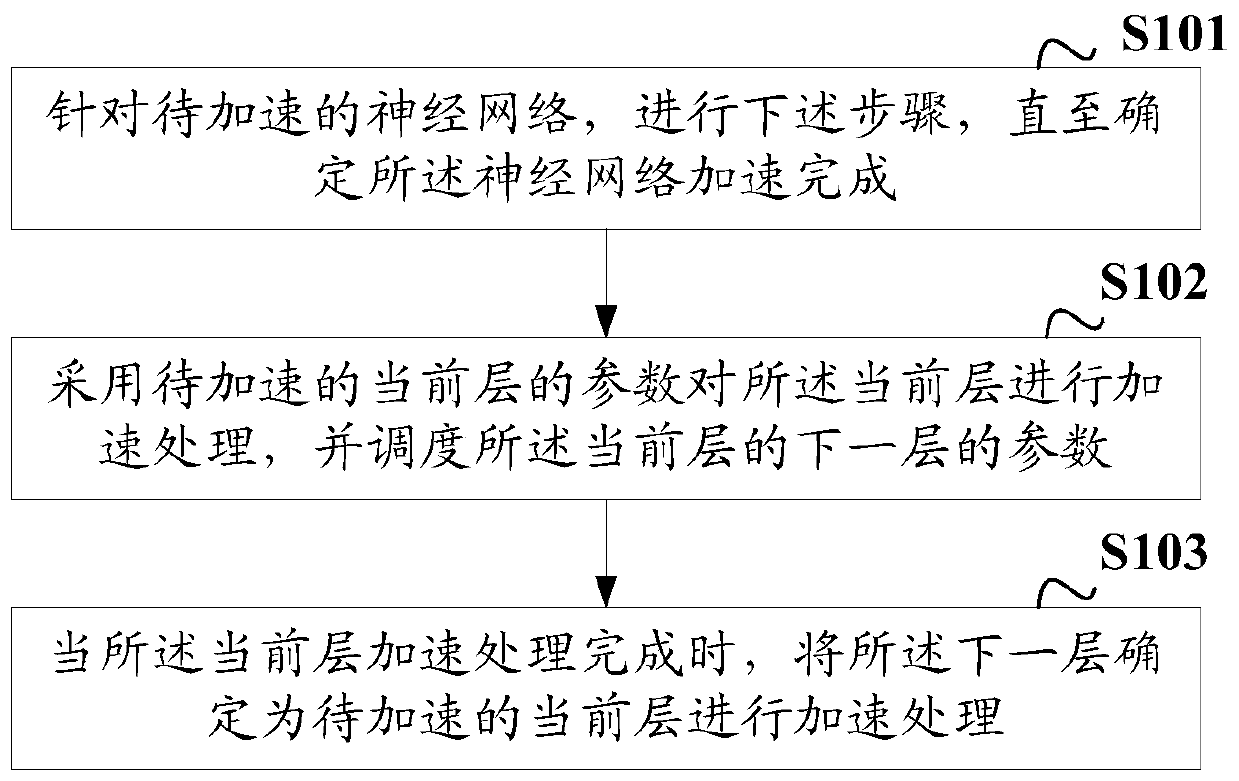

[0038] figure 1 A schematic diagram of a neural network acceleration process provided by an embodiment of the present invention, the process includes the following steps:

[0039] S101: For the neural network to be accelerated, perform the following steps until it is determined that the acceleration of the neural network is completed.

[0040] The neural network acceleration method provided by the embodiment of the present invention is applied to a neural network acceleration chip, and the neural network acceleration chip can be a GPU (Graphics Processing Unit, graphics processor), AI (Artificial Intelligence, artificial intelligence) chip, FPGA (Field-Programmable Gate Array, Field Programmable Gate Array) chips, or other chips capable of accelerating neural networks. Specifically, it may be a computing unit applied in a neural network acceleration chip.

[0041] The neural network acceleration chip stores an algorithm for accelerating processing of the neural network, so t...

Embodiment 2

[0063] On the basis of the above embodiments, in the embodiments of the present invention, if the current layer to be accelerated is the last layer, the parameters for scheduling the next layer of the current layer include:

[0064] Scheduling parameters for the first layer.

[0065] Since each layer of the neural network needs to be cyclically accelerated before the acceleration of the neural network is completed, if the current layer is the last layer, the first layer can be used as the next layer of the last layer for accelerated processing .

[0066] Therefore, the parameters for scheduling the next layer of the last layer are specifically the parameters for scheduling the first layer.

[0067] Because in the embodiment of the present invention, when the current layer is the last layer, the parameters of the first layer are scheduled as the parameters of the next layer of the last layer, which can ensure that the neural network is cycled layer by layer before the accelera...

Embodiment 3

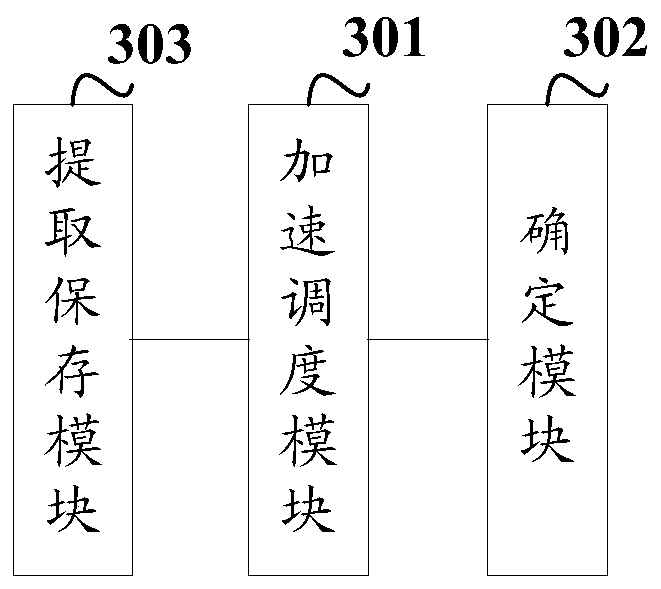

[0069] On the basis of the above embodiments, in the embodiment of the present invention, the parameters for scheduling the next layer of the current layer include:

[0070] Scheduling the parameters of the next layer of the current layer stored in the on-chip memory.

[0071] In order to further improve the acceleration efficiency of the neural network, the parameters of each layer are pre-saved in the internal storage module of the neural network acceleration chip, that is, the on-chip memory, rather than in the external processor, so that the parameters of the next layer can be dispatched more quickly.

[0072] Specifically, the neural network acceleration chip can directly schedule the parameters of the next layer of the current layer in the on-chip memory, or indirectly schedule the parameters of the next layer of the current layer in the on-chip memory through other files, such as other files can be REG document.

[0073] The on-chip memory includes a read-only memory (...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com