Robot visual guide positioning algorithm

A robot vision, guided positioning technology, applied in manipulators, program-controlled manipulators, manufacturing tools, etc., can solve problems such as the inability to meet offset material pose correction, and achieve improved material grabbing efficiency, production rhythm, and cost savings. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

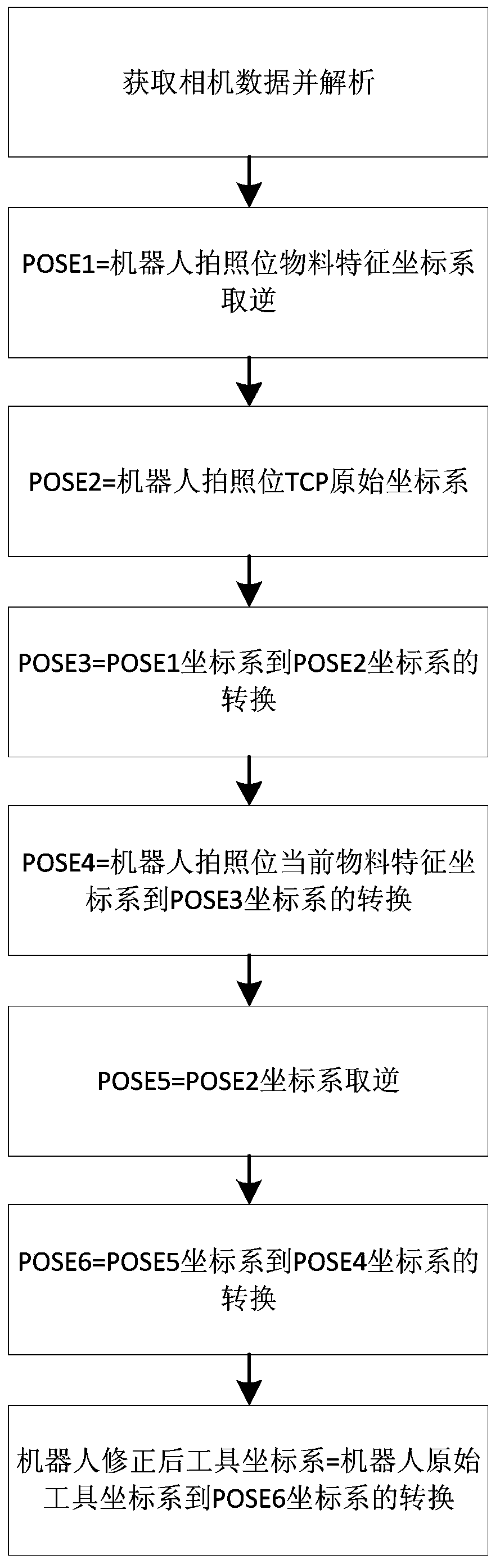

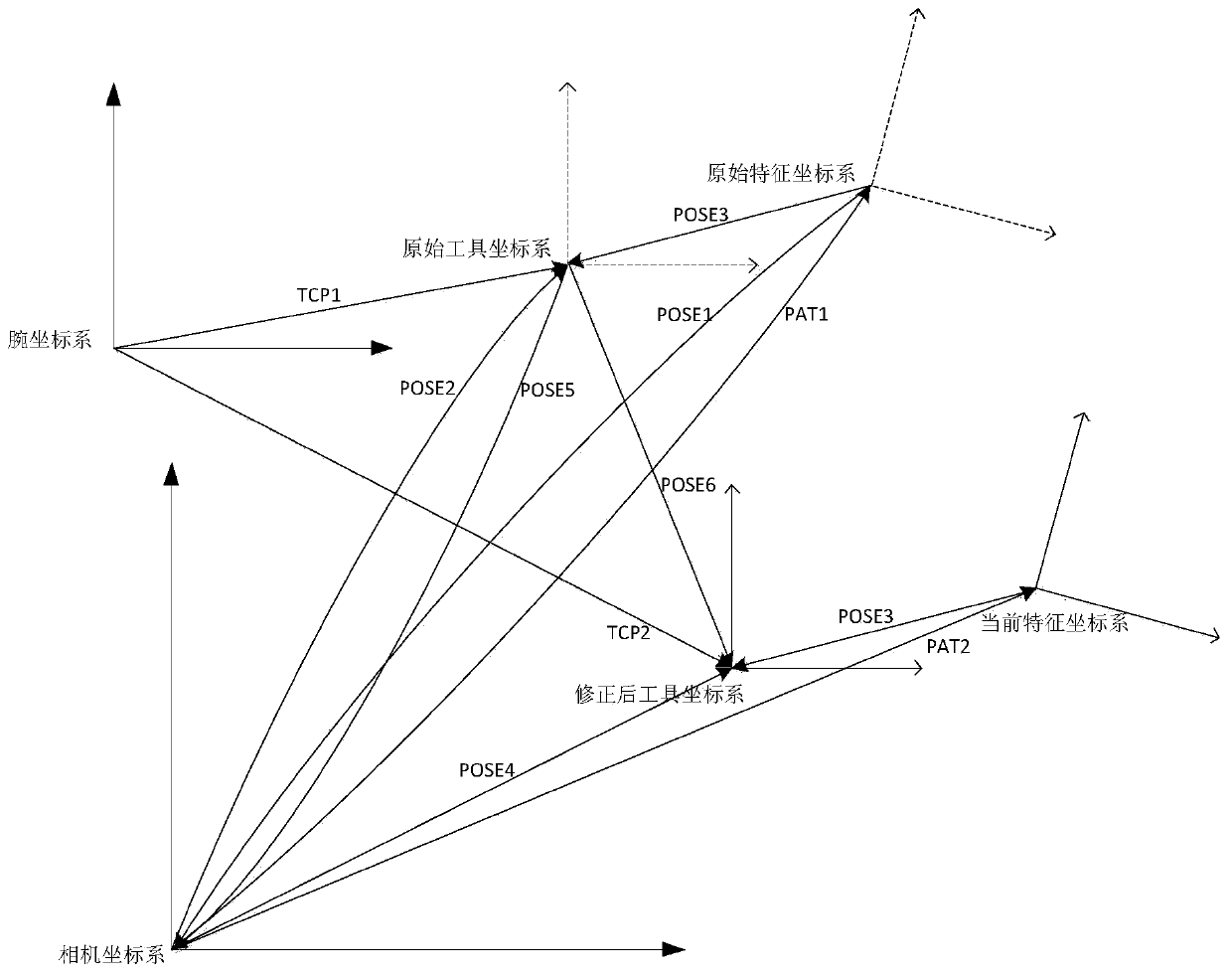

[0028] refer to figure 1 , figure 2 , image 3 , Figure 4 with Figure 5 , a kind of robot visual guidance positioning algorithm of the present invention, comprises the following steps:

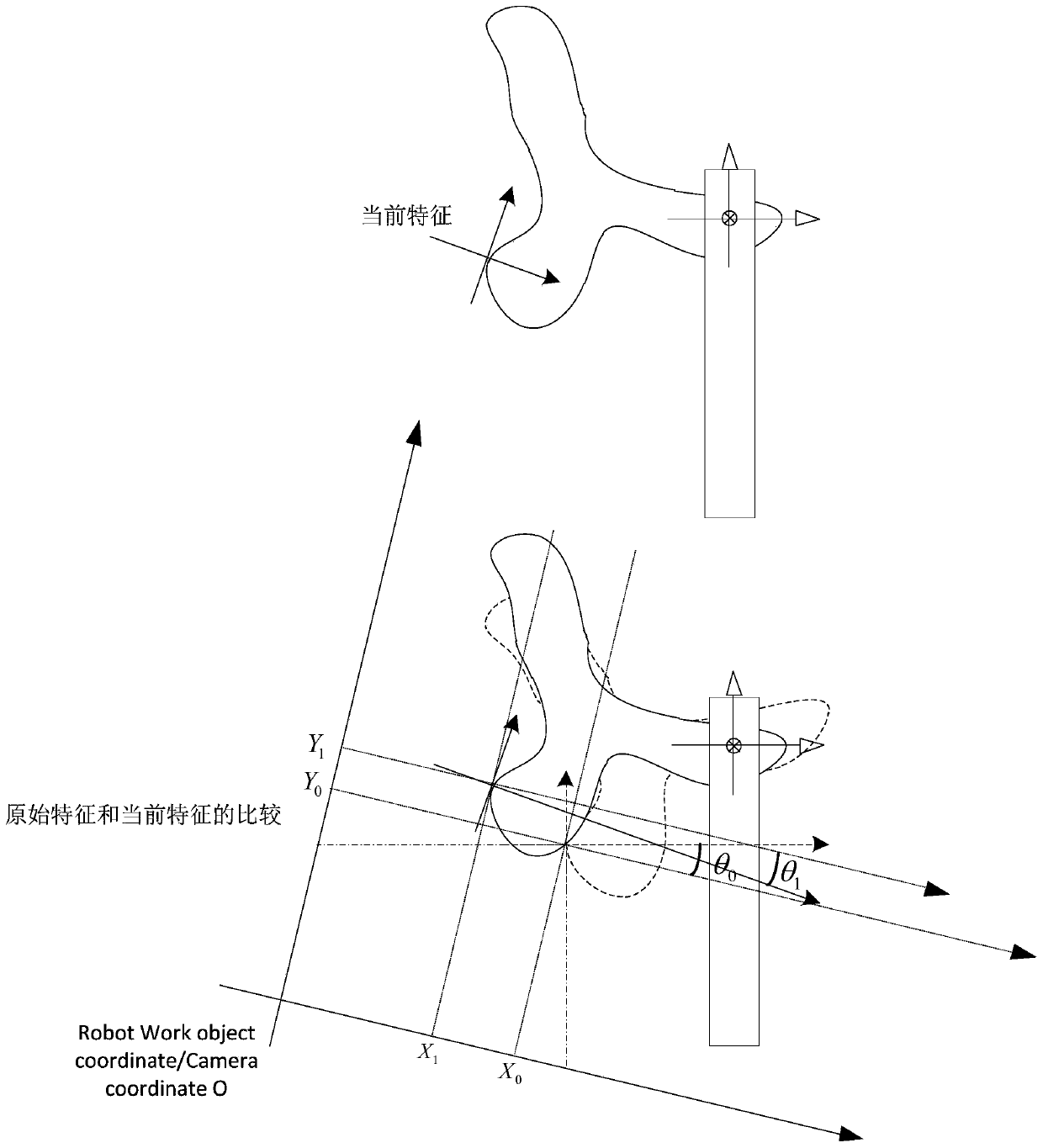

[0029] Step s1: The robot grabs the material. At this time, the pose of the material relative to the fixture is deviated from the pose of the original material relative to the fixture;

[0030] Step s2: The robot grabs the material, places it under the smart camera, and takes pictures;

[0031] Step s3: The camera calculates the pose of the current material in the camera coordinate system, and transmits the pose data of the original material and the current material to the robot;

[0032] Step s4: The robot undergoes the conversion of the coordinate system pose and corrects the robot TCP;

[0033] Step s5: The robot uses the corrected TCP to accurately place the material into the precise position of the mold.

[0034] Preferably, the step s5 includes the following steps: step s51: ob...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com