An image gesture action online detection and recognition method based on deep learning

A gesture detection and deep learning technology, applied in the field of gesture recognition, can solve problems such as stop image recognition, and achieve the effect of accurate detection results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] The present invention will be further described below in conjunction with drawings and embodiments.

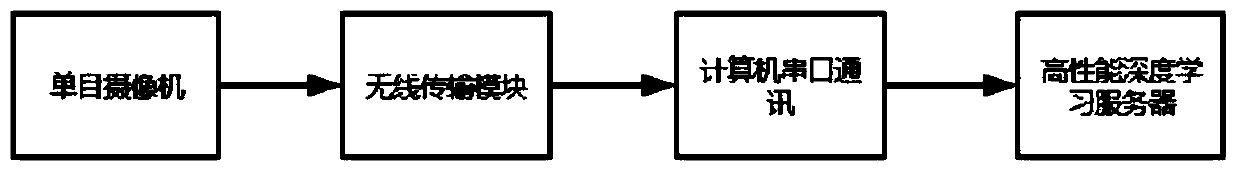

[0052] The implementing device of the method of the present invention is as figure 2 As shown, the video is captured by the monocular camera installed on the smart car or drone, and the video stream is transmitted to the server through the wireless transmission module. After decoding, the server inputs the decoded video stream into the trained neural network model , and transmit the obtained results back to the smart car or drone.

[0053] The present invention first needs to train the deep learning model, and then deploy the trained model on a high-performance deep learning server to process unmodified video streams transmitted from clients such as smart cars or drones.

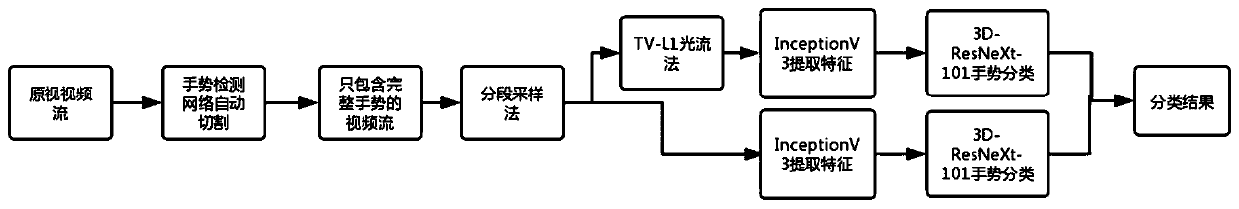

[0054] Such as figure 1 Shown, the embodiment of the inventive method is as follows:

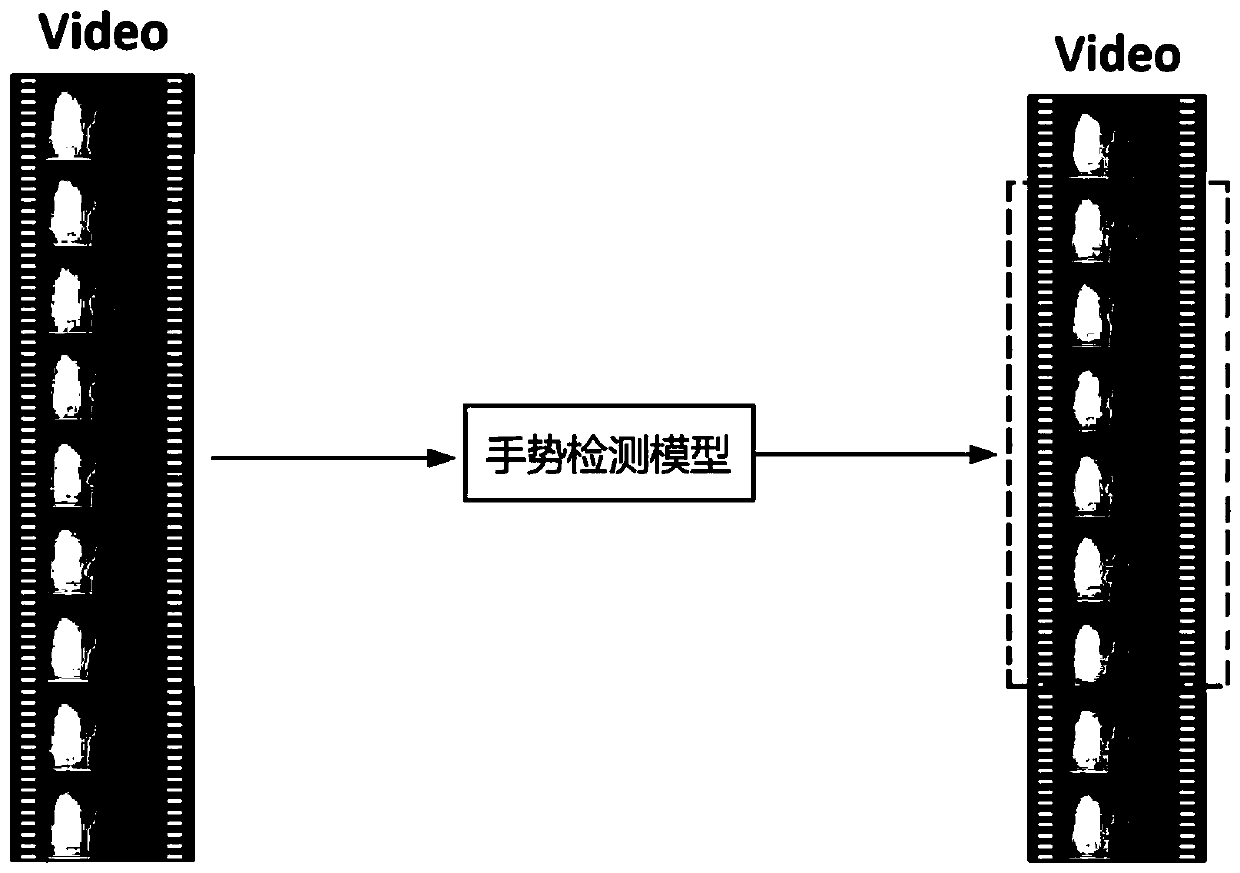

[0055] 1) Model training first: model training is divided into gesture detection model and gesture recognitio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com