A convolutional neural network RGB-D significance detection method based on multilayer fusion

A convolutional neural network, RGB-D technology, applied in the field of convolutional neural network RGB-D saliency detection, to achieve the effect of improving the results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

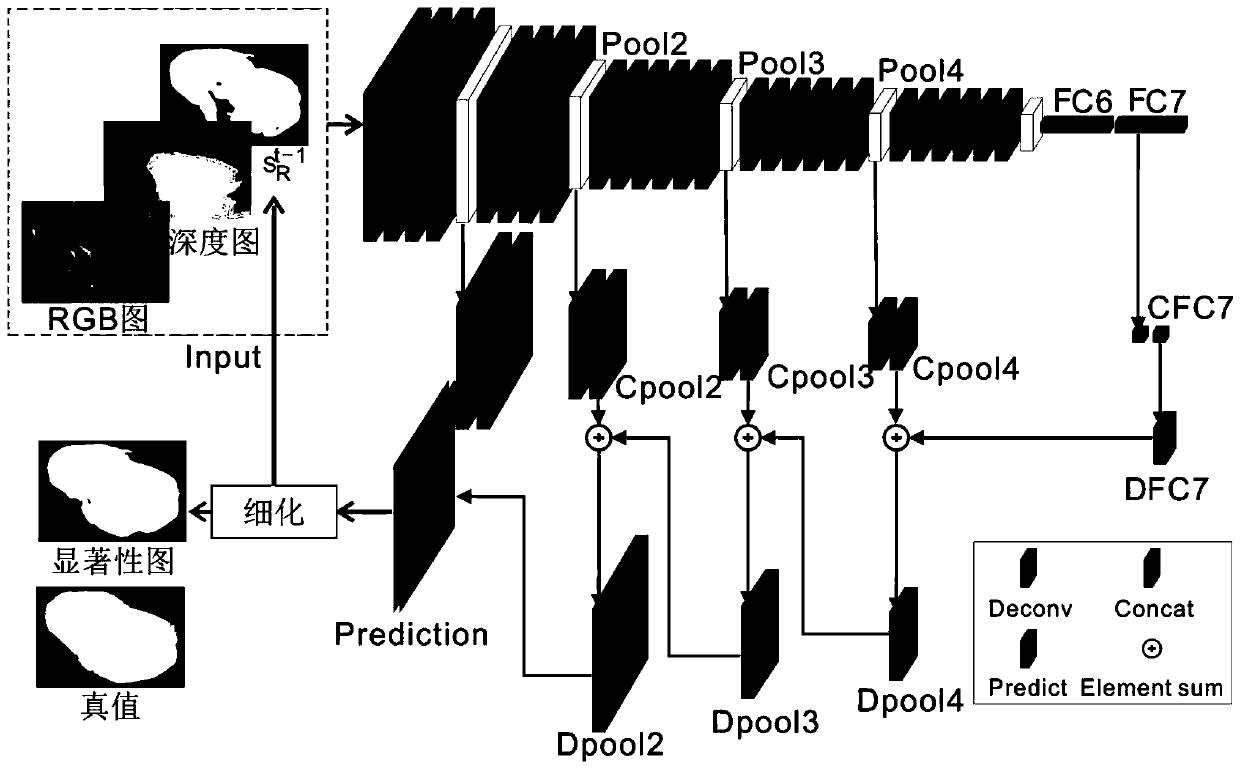

[0038] A convolutional neural network RGB-D saliency detection method based on multi-layer fusion, see figure 1 , the method includes the following steps:

[0039] 1. Iterative optimization of detected salient objects

[0040] The basic idea of the RGB-D saliency detection in the embodiment of the present invention is to use a circular convolutional neural network to iteratively optimize the detected salient objects, which is formalized as:

[0041] S t =φ(I,D,S t-1 ;W) (1)

[0042] Among them, φ is the network model function, I is the RGB image, D is the depth map, S is the saliency detection result, t is the number of iterations, and W is the network parameter.

[0043] 2. Basic Network Architecture

[0044] see figure 1 , the basic network architecture in the embodiment of the present invention is the same as the VGG16 network structure (wherein, the VGG16 network structure mainly includes: 5 convolutional layer modules CONV1-CONV5, and two fully connected layer mod...

Embodiment 2

[0064] Combine below figure 1 1. The specific example further introduces the scheme in embodiment 1, see the following description for details:

[0065] In the embodiment of the present invention, when designing the network, it is necessary to consider how to effectively use the features of different scales of the convolutional neural network to capture salient objects of different scales in the image.

[0066] Specifically, the multi-layer fused convolutional neural network designed in the embodiment of the present invention gradually fuses higher-level convolutional features to lower-level convolutional features, and finally generates a saliency map with the same resolution as the input image, namely :

[0067] 1) First, use a 3×3 convolution with a channel number of 60 to perform dimensionality reduction operations on the FC7 layer, pool4 layer, pool3 layer, and pool2 layer;

[0068] Through the above operations, the number of channels of the features of the corresponding...

Embodiment 3

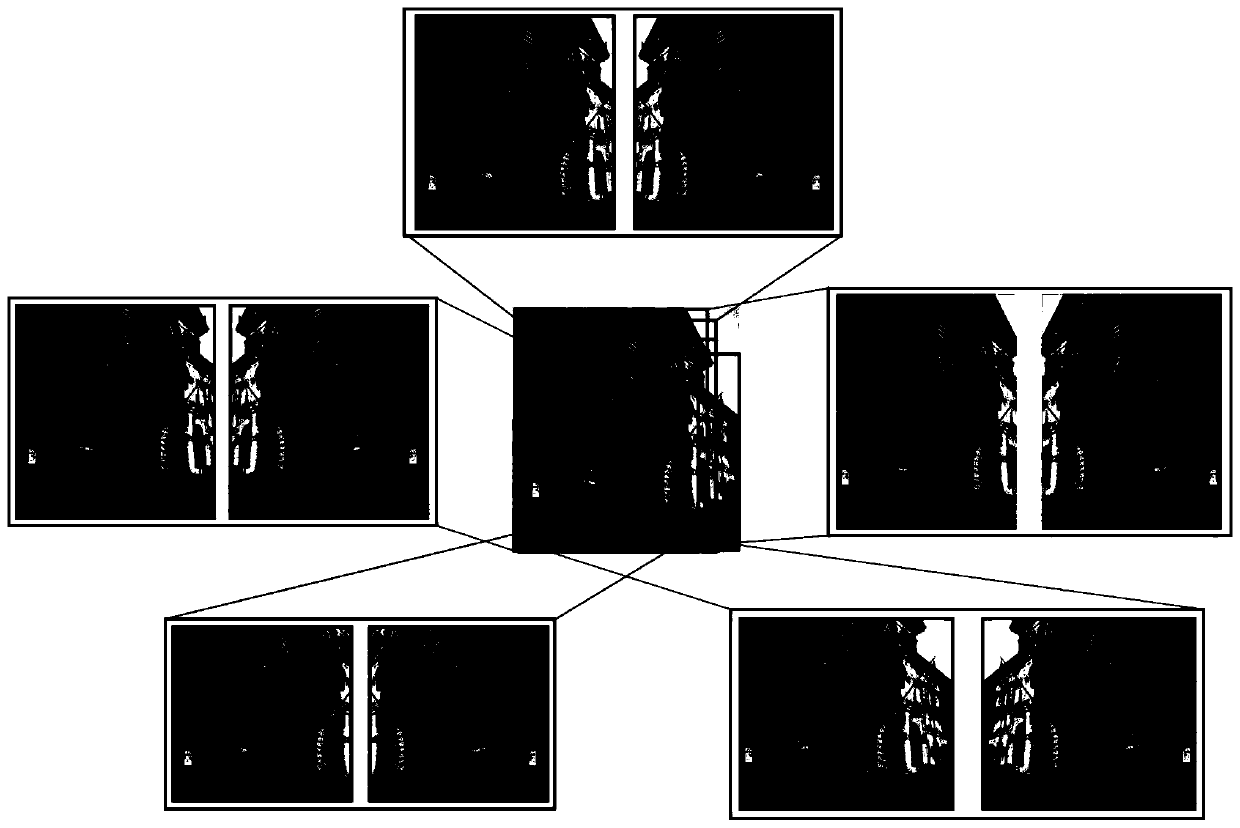

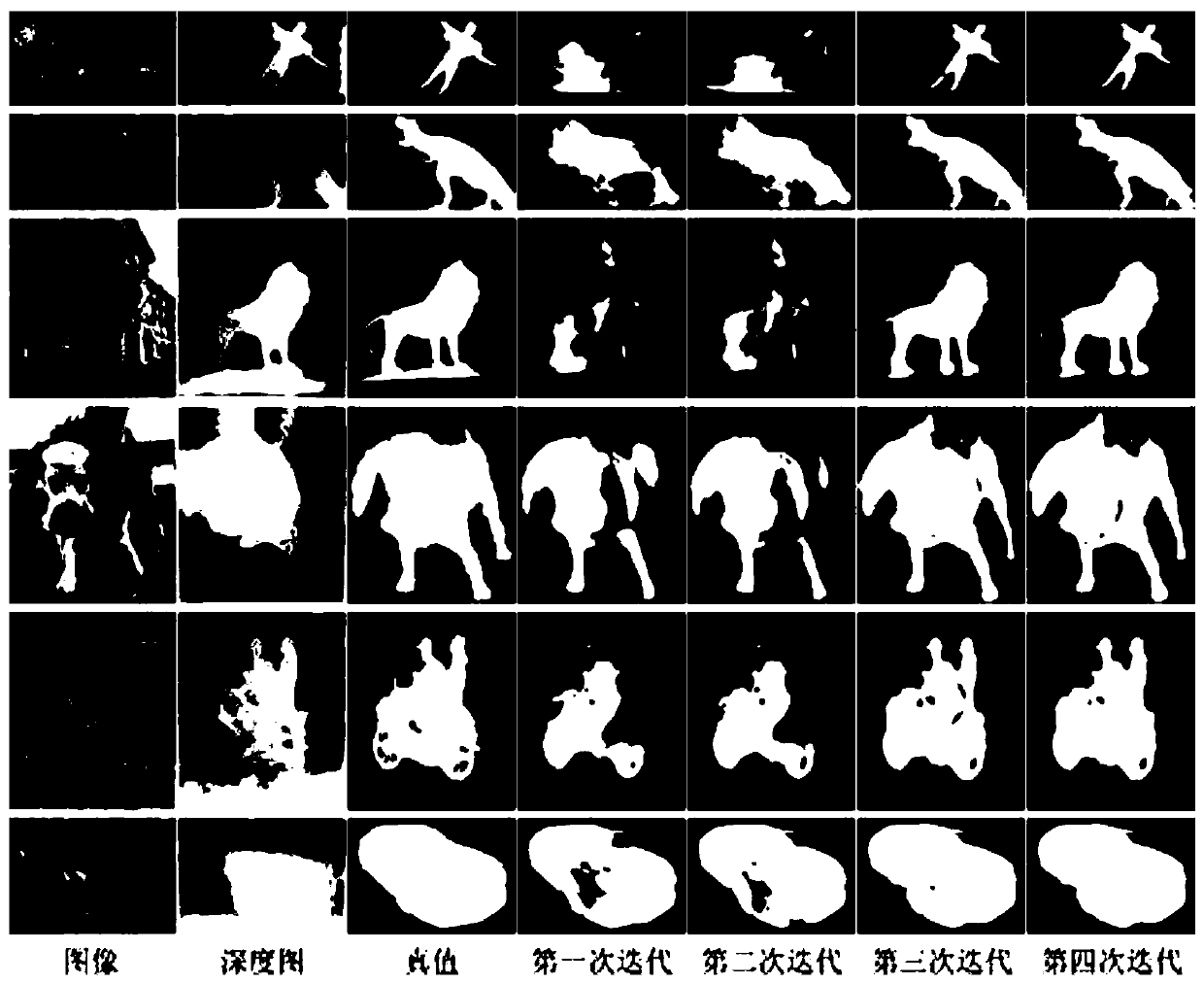

[0085] Combine below Figure 3-Figure 4 The scheme in embodiment 1 and 2 is carried out feasibility verification, see the following description for details:

[0086] according to figure 1 The shown network structure builds the network in the embodiment of the present invention, expands RGB and RGB-D image data, generates corresponding training data sets, and performs network training. The obtained saliency map is refined, and the network is fine-tuned after refinement.

[0087] From image 3 Among them, it can be found that after different trainings, the significance results detected by the embodiments of the present invention have been significantly improved. The result of the first iteration is the result of training using the all-zero saliency map and all-zero depth map. It can be seen that the obtained results are incomplete and the correct saliency cannot be obtained in the first and third rows of images. sexual object. After fine-tuning the network using the depth m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com