Kinectv2-based complete object real-time three-dimensional reconstruction method

A real-time three-dimensional and complete technology, applied in the field of computer vision, can solve the problems of large amount of calculation, difficulty in ensuring real-time performance and accuracy at the same time, high price of three-dimensional scanning equipment, and achieve the effect of improving the effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0064] In the first group, we used a blue disk with a frosted surface. From the depth map, we can see that the edge of the disk is surrounded by small black cavities. When generating a three-dimensional plane through fusion, we found that the surface is rough and not smooth. The influence of structure noise; the second group uses a smooth white reflective disc. When observing the depth map, we can see a black hole above, and this area happens to be the area where the indoor light is reflected on the disc, thus As a result, the three-dimensional plane is missing during fusion; in the third group, we use a matte black disc, and there are fine black dots in the area above the corresponding depth map disc, and then when observing the fused three-dimensional model, we find that the fusion degree of the model plane is poor, and the depth loss is serious .

[0065] From the above experiments, it is found that due to the influence of the Kinect2.0 device itself and the acquisition env...

Embodiment 2

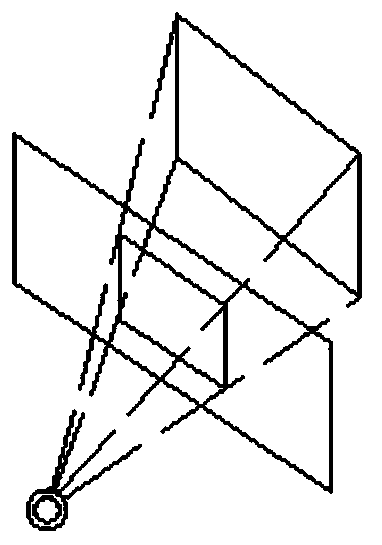

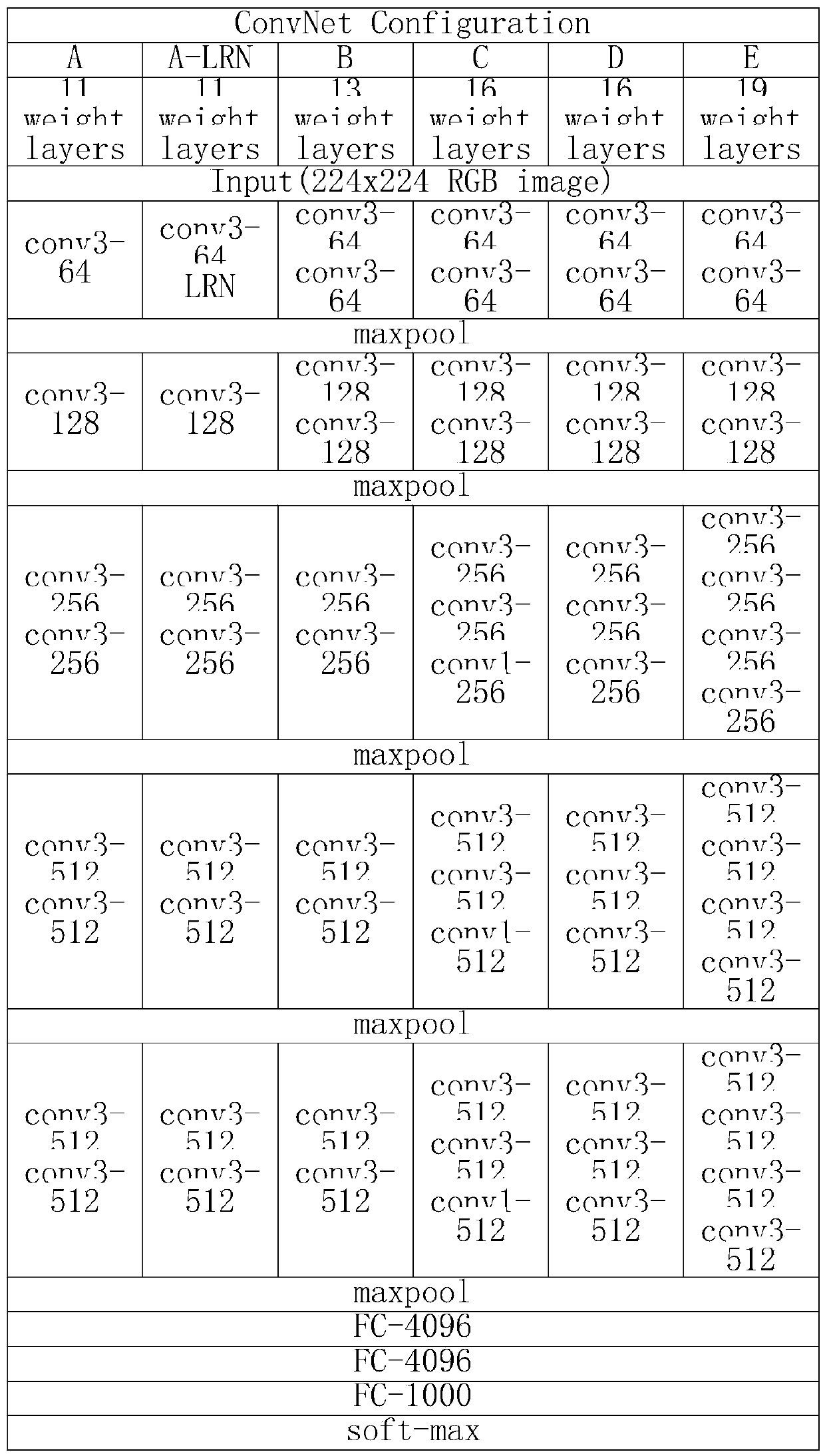

[0073] Deep Completion Network Design

[0074] Since Kinect2.0 is usually unable to perceive the depth map of bright, transparent areas and distant surfaces of objects, when the measured object or environment is too complex, the effect of using filters to denoise the acquired depth image is limited, and it cannot meet the requirements of most depth images. Repair of missing regions. When the traditional inpainting algorithm is bottlenecked, considering that the high-resolution color image obtained by Kinect2.0 has rich detailed information, we try to turn our attention to the field of deep learning, hoping to train a large number of data samples that can predict the depth image. And patched network. To this end, we introduce a method to try to construct the existing database, and design a deep network that can perform end-to-end training and evaluation on color images and depth images, predict the local differential properties of color images and combine the original data col...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com