Dynamic field self-adaption method and device and computer readable storage medium

An adaptive, domain-based technology, applied in the field of convolutional neural networks, can solve difficult-to-satisfy problems and achieve the effects of improved accuracy, improved transfer learning, and improved convergence speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

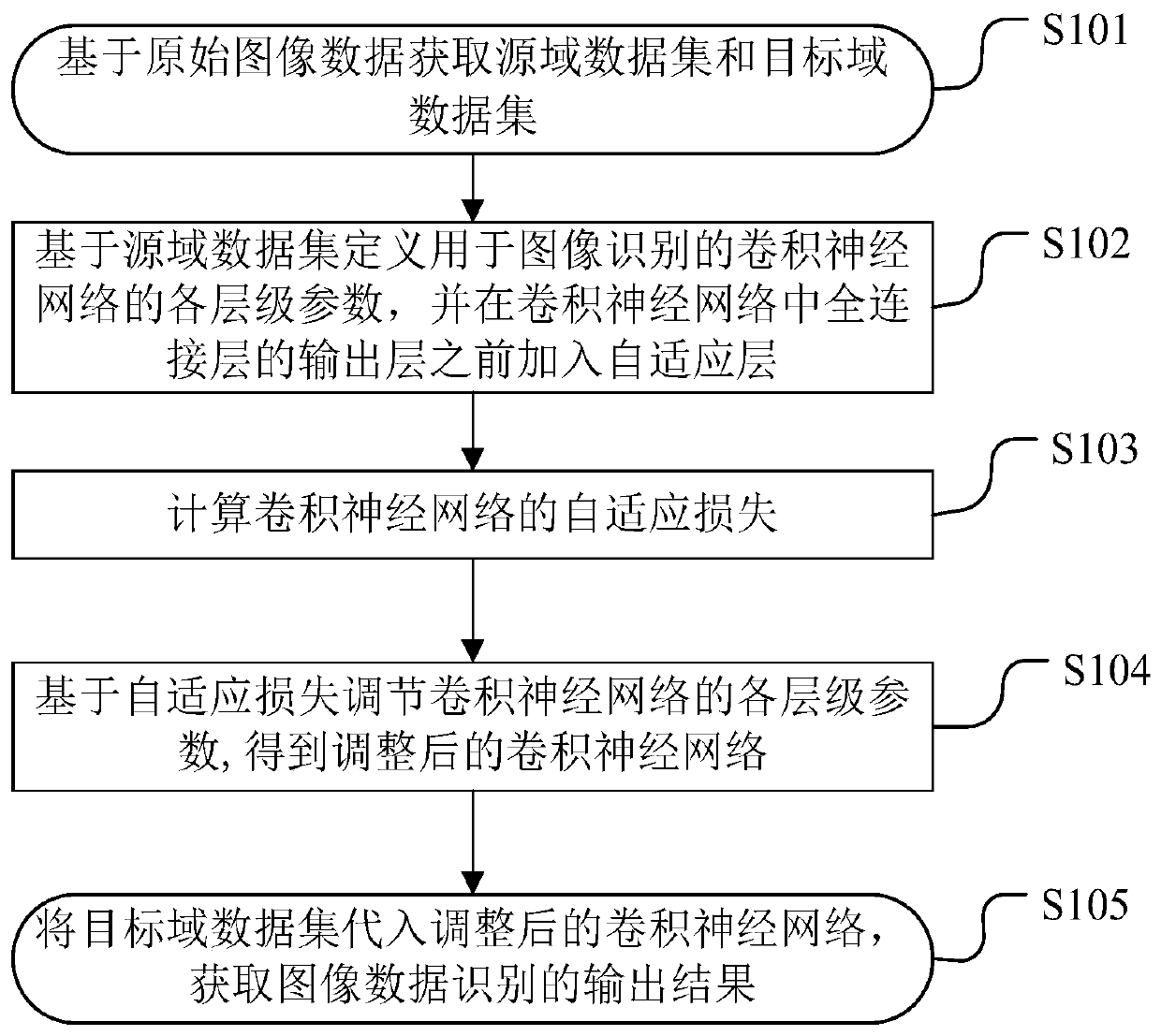

[0052] refer to figure 1 As shown, the embodiment of the present invention provides a dynamic domain adaptive method, including the following steps:

[0053] S101. Obtain a source domain dataset and a target domain dataset based on the original image data;

[0054] S102. Define the parameters of each level of the convolutional neural network for image recognition based on the source domain data set, and add an adaptive layer before the output layer of the fully connected layer in the convolutional neural network;

[0055] S103. Calculate the adaptive loss L of the convolutional neural network MMD ;

[0056] S104, based on adaptive loss L MMD Adjust the parameters of each level of the convolutional neural network to obtain the adjusted convolutional neural network;

[0057] S105. Substitute the target domain data set into the adjusted convolutional neural network to obtain an output result of image data recognition.

[0058] Understandably, for image recognition research, ...

Embodiment 2

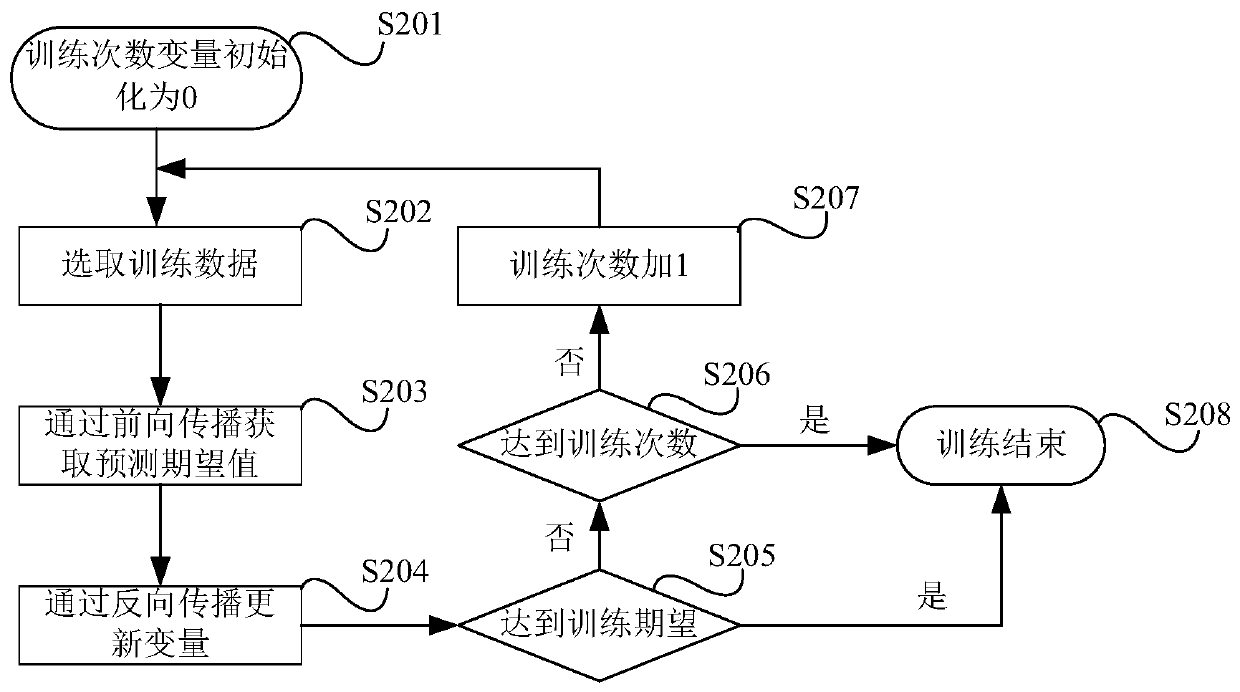

[0095] On the basis of Embodiment 1, Embodiment 2 of the present invention provides a method flow for training a neural network with a backpropagation algorithm. refer to figure 2 As shown, its method flow includes the following steps:

[0096] S201. Initialize the training times variable to 0;

[0097] S202. Select a part of training data in the source domain data set, that is, a batch (Batch);

[0098] S203. Obtain an output prediction value through forward propagation;

[0099] S204. Calculate the loss, and update various parameters of the neural network through the backpropagation algorithm;

[0100] S205, judging whether the training expectation is met, if it is reached, then skip to S208, if not, then skip to S201;

[0101] S206, judging whether the set number of training times is reached, if the number of training times is reached, then jump to S208, if not, then jump to S207;

[0102] S207, adding 1 to the number of training times, and jumping to S202;

[0103] ...

Embodiment 3

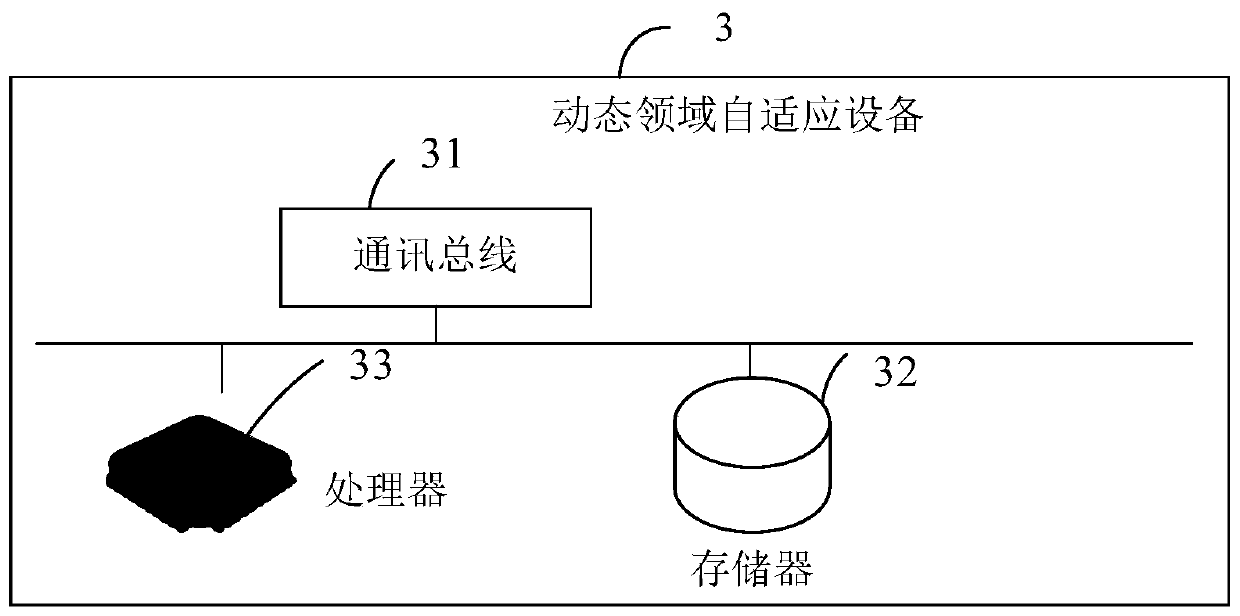

[0106] refer to image 3 As shown, the specific hardware structure of a dynamic domain adaptive device provided by Embodiment 3 of the present invention, the dynamic domain adaptive device 3 may include: a memory 32 and a processor 33 ; each component is coupled together through a communication bus 31 . Understandably, the communication bus 31 is used to realize connection and communication between these components. In addition to the data bus, the communication bus 31 also includes a power bus, a control bus and a status signal bus. But for clarity, in image 3 The various buses are denoted as communication bus 31 in FIG.

[0107] The memory 32 is used to store the dynamic domain adaptive method program capable of running on the processor 33;

[0108] The processor 33 is configured to perform the following steps when running the dynamic domain adaptive method program:

[0109] Obtain a source domain dataset and a target domain dataset based on the original image data;

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com