Neural network structured sparse method based on incremental regularization

A technology of network structure and neural network model, applied in the direction of neural learning method, biological neural network model, neural architecture, etc., can solve the problems of weak expressiveness and performance loss of CNN, and achieve obvious effect, great flexibility, and good pruning effect of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] In conjunction with the following implementation examples, the present invention is further described in detail. However, the neural network structured column sparse algorithm proposed by the present invention is not limited to this implementation method.

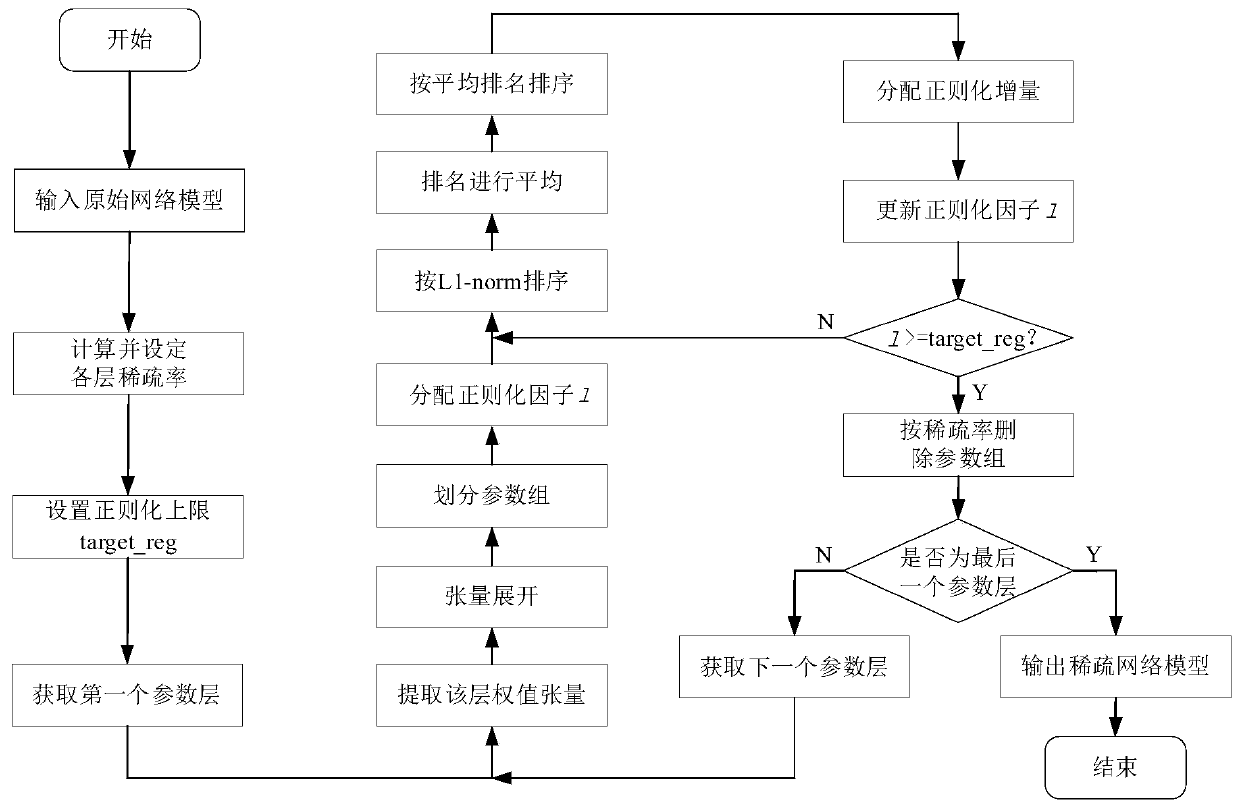

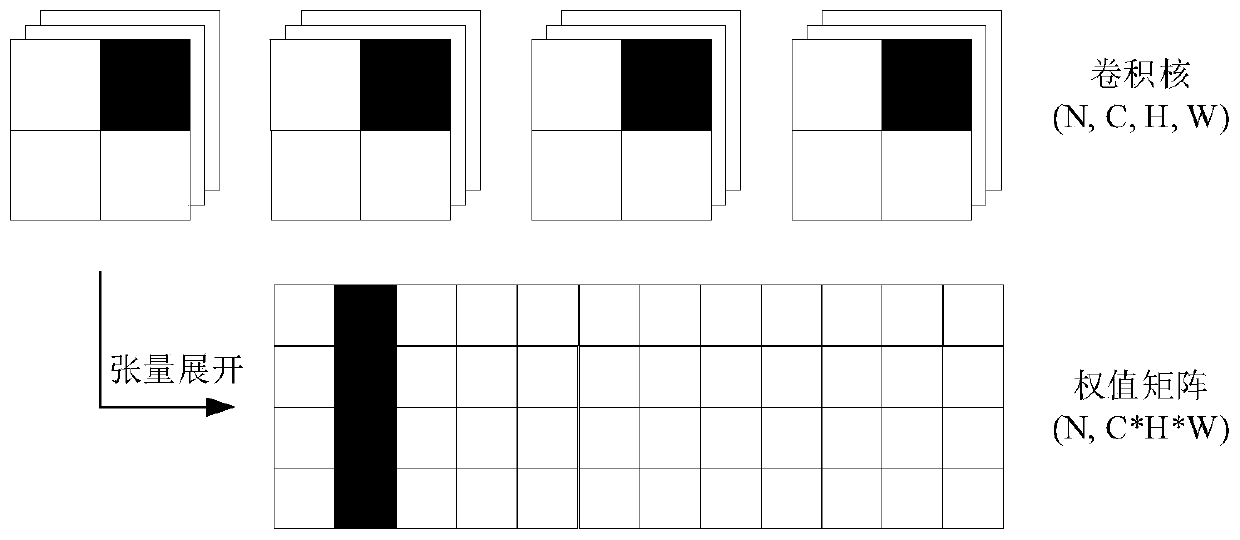

[0030] The present invention provides a neural network structured sparse method based on incremental regularization. The overall network model sparse process is as follows: figure 1 shown.

[0031] (1) Preparation work

[0032] For the neural network model to be sparse, prepare the training data set, network structure configuration file, and training process configuration file. The used data set, network structure configuration, and training process configuration are all consistent with the original training method; in ResNet-50 In the neural network structured column sparse experiment, the dataset used is ImageNet-2012, and the network structure configuration and other files used are the files used by the original...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com