Beidou visual fusion accurate lane identification and positioning method and implementation device thereof

A positioning method and lane technology, applied in the field of intelligent transportation, can solve problems such as single special lane, unable to solve precise lane identification and positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

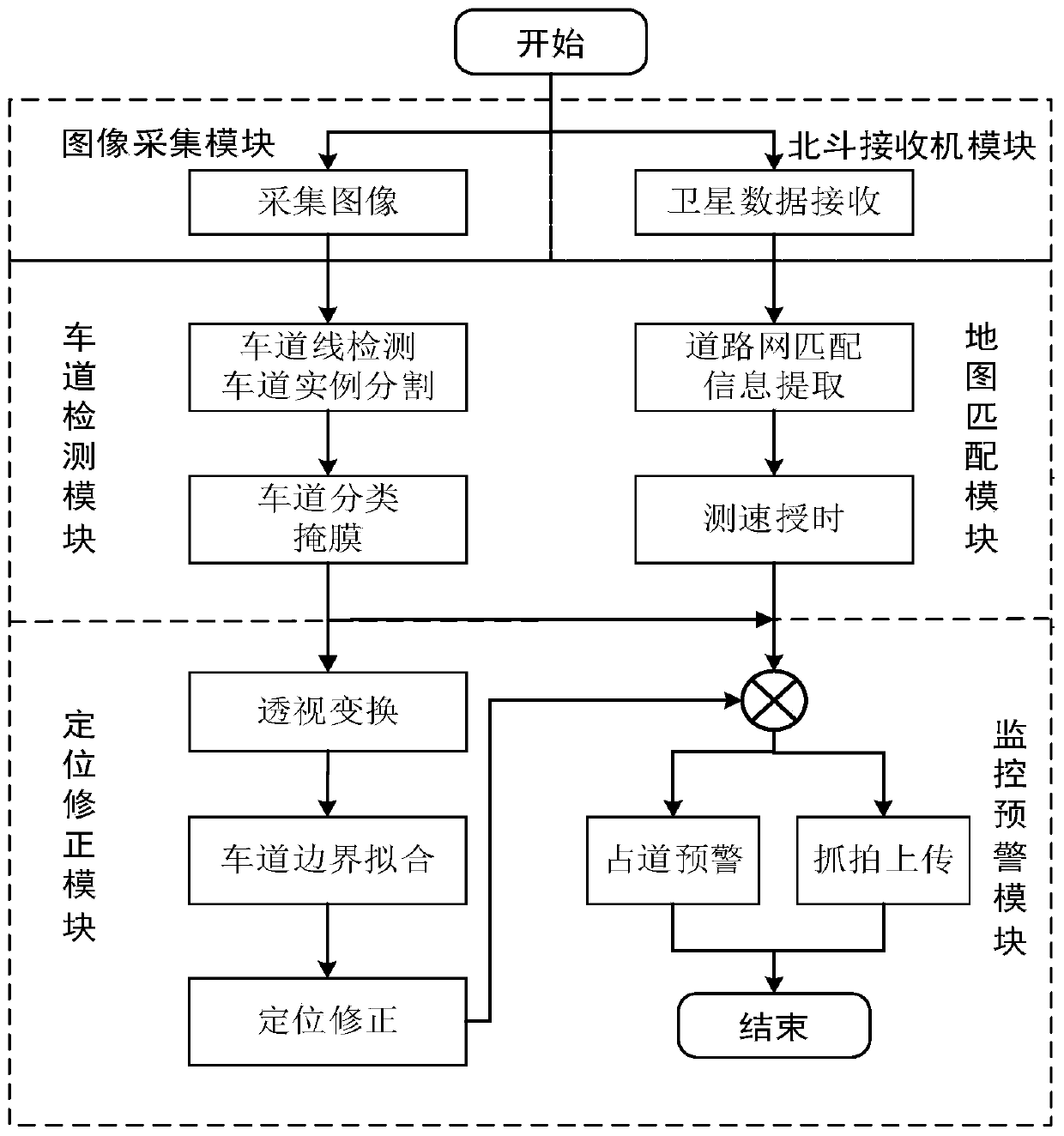

[0120] A Beidou vision fusion precise lane identification and positioning method, such as figure 1 shown, including the following steps:

[0121] (1) Lane detection, including:

[0122] Step A1: The image acquisition device takes pictures of the road ahead and stores them; Figure 4 As shown, the abscissa is the width of the picture, and the ordinate is the height of the picture; the image acquisition device is a driving recorder or a 120° wide-angle camera, and the device is installed on the center line of the front windshield and directly in front of the rearview mirror. 20° included angle. The vehicle is driving on the road with lane markings, and the image acquisition device continues to capture the front image and store it in the video memory SD card during the driving process.

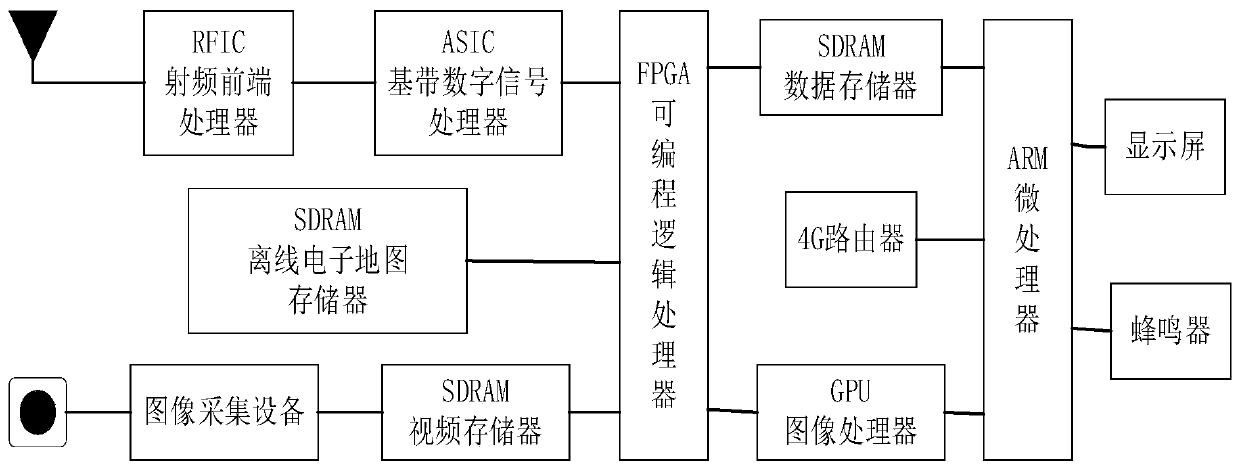

[0123] Step A2: Extract road features according to road pictures, detect and recognize lane lines and divide lanes; use programmable logic processor FPGA to read the video in the video memory...

Embodiment 2

[0137] According to the Beidou vision fusion precise lane identification and positioning method described in Embodiment 1, the difference is that:

[0138] Step A2, including:

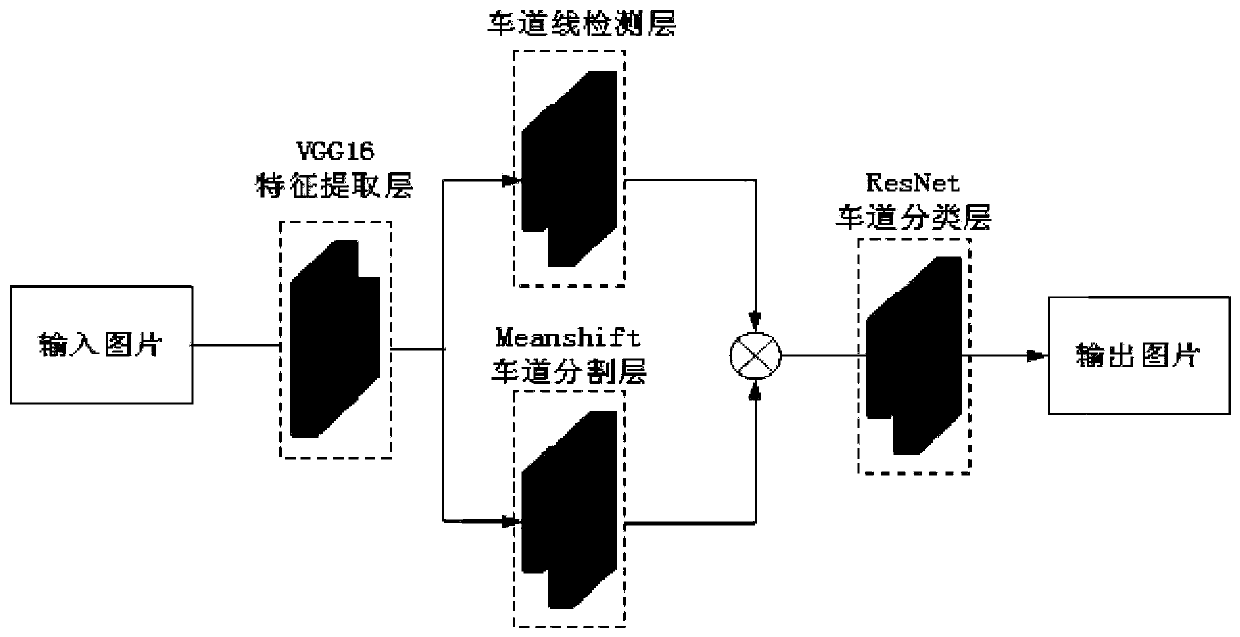

[0139] Such as image 3 As shown, the lane detection network includes VGG16 feature extraction layer, lane line detection layer, Meanshift lane segmentation layer, ResNet lane classification layer; VGG16 feature extraction layer is used for road map feature extraction, ResNet lane classification layer is used for lane line classification, Meanshift lane The segmentation layer completes the lane clustering, and the lane line detection layer separates the lane line from the background into 0 and 1.

[0140] a. Send the road picture to the VGG16 convolutional neural network (VGG16 feature extraction layer) after mean value preprocessing, which means: subtract the mean value of all pixels from each pixel in the road picture, and send it to the VGG16 convolutional neural network. Get the feature map; the ...

Embodiment 3

[0160] According to the Beidou vision fusion precise lane identification and positioning method described in embodiment 1 or 2, the difference is that:

[0161] Step B1 means:

[0162] Capture Beidou satellite signals, track Beidou satellite signals, mediate navigation messages, measure pseudo-range, carrier phase, and calculate latitude and longitude.

[0163] Step B2, including:

[0164] e. Input the positioning information currently obtained by the Beidou receiver, and initially match it with the electronic map road network database. The initial matching refers to using the hidden Markov model HMM method to find multiple possible matching initial road sections according to the positioning information;

[0165] F, enter tracking matching, and tracking matching refers to matching the current moment location point (determined by location information) to the same road section matched with the previous moment location point; when arriving at a complex section such as a road int...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com