Synchronous localization and mapping method for vision-inertia-laser fusion

An inertial and visual technology, used in image enhancement, image analysis, and re-radiation, etc., can solve the problems of easy loss and low SLAM accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0061] The present invention will be further described below in conjunction with the accompanying drawings.

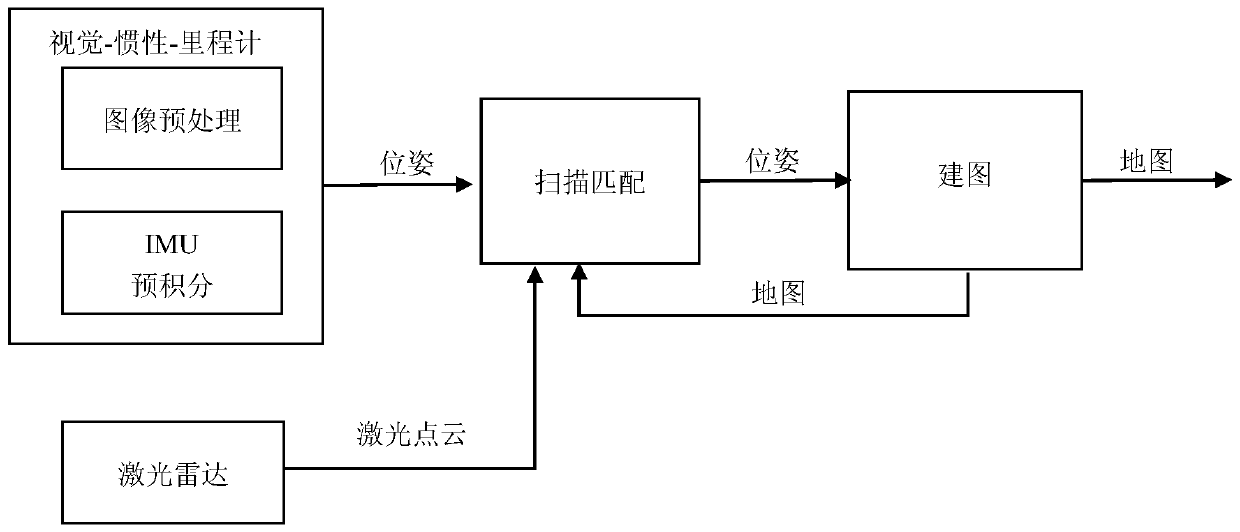

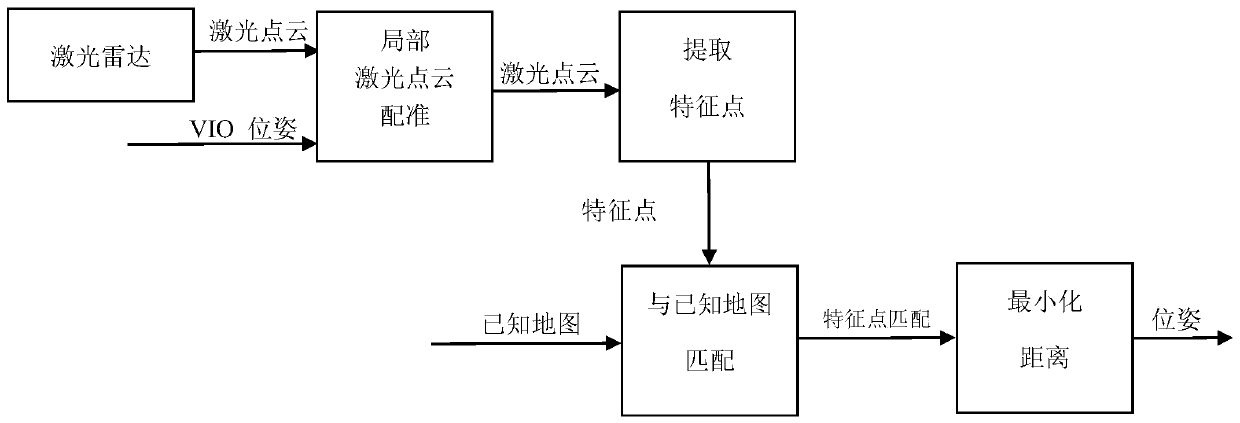

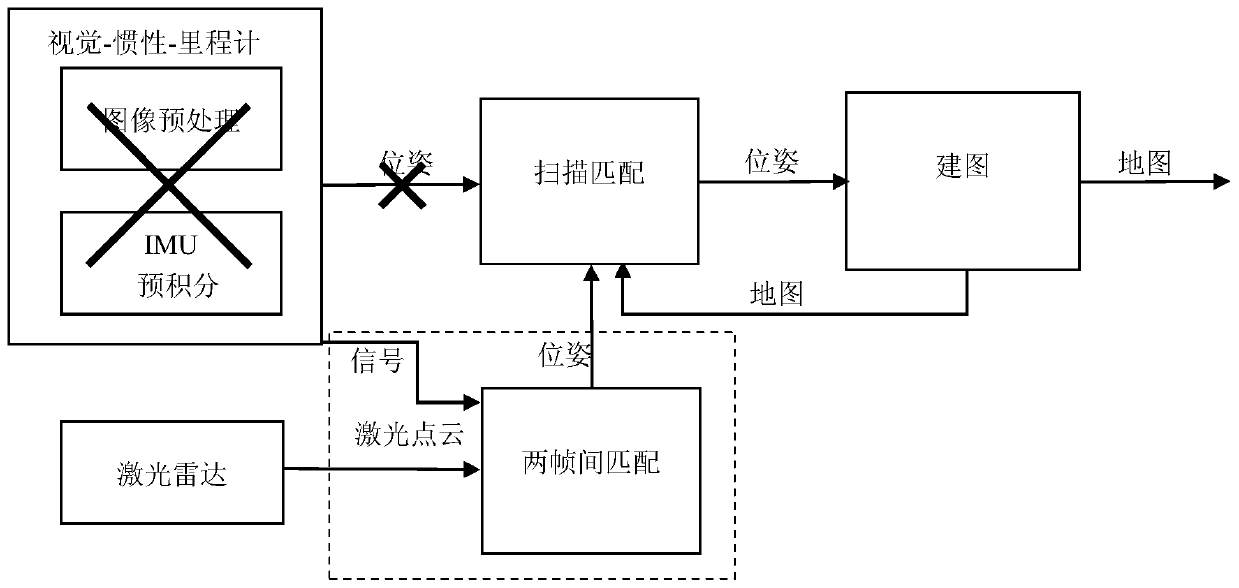

[0062] refer to Figure 1 to Figure 5 , a simultaneous positioning and mapping method for visual-inertial-laser fusion, comprising the following steps:

[0063] 1) Visual-inertial-laser odometer, the process is as follows:

[0064] Assuming that the internal parameters of the camera and the external parameters between the three sensors are known, and the sensors are time-synchronized, the camera and the lidar have the same frequency; this method involves four coordinate systems, namely the world coordinate system W, the camera coordinate System C, inertial measurement unit (IMU) coordinate system I and lidar coordinate system L; sensor coordinate system C, I, L changes with the movement of the device, C i expressed in t i The camera coordinate system at the moment; define the lidar coordinate system after initialization as the world coordinate system;

[0065] Firs...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com