Gesture recognition method based on regional full convolutional network

A fully convolutional network and gesture recognition technology, applied in neural learning methods, character and pattern recognition, biological neural network models, etc., can solve the problems of complex processing and low efficiency, and achieve high rejection rate and avoid recognition rate. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

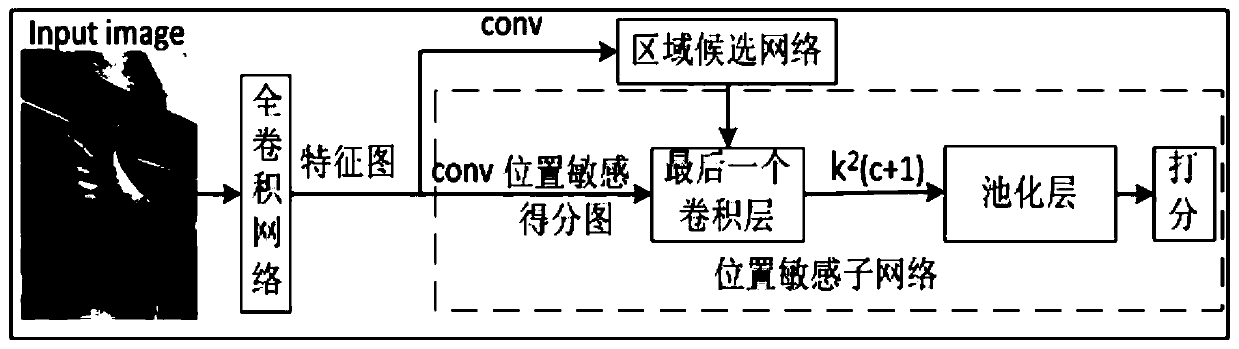

[0036] The present invention provides a gesture recognition method based on a regional full convolution network, comprising the following steps:

[0037] Step 1, build a fully convolutional network

[0038] In this solution, the residual network ResNet-34 network architecture is used as the skeleton, the step size of the RerNet-34 network is changed from 32 pixels to 16 pixels, the average pooling layer and the fully connected layer of the ResNet-34 network architecture are deleted, and then A fully convolutional network is built using the convolutional layers of the ResNet-34 network architecture to extract features from the input image.

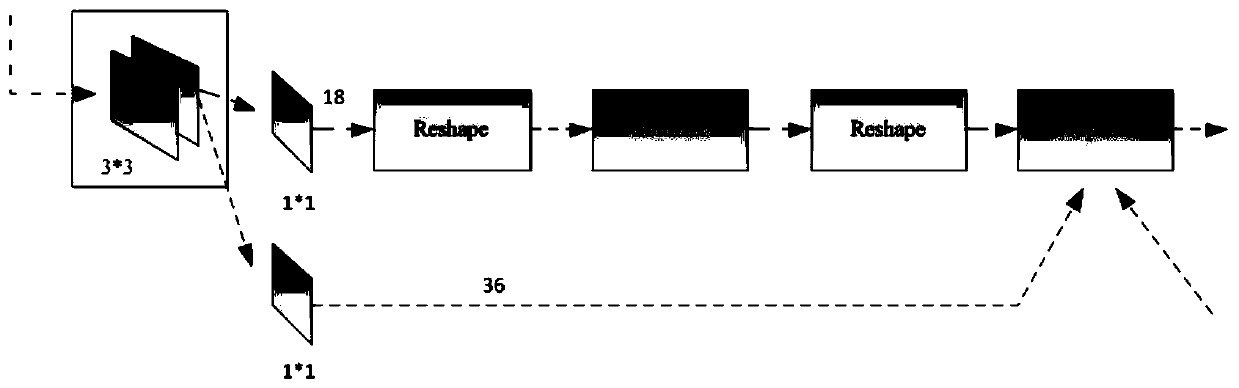

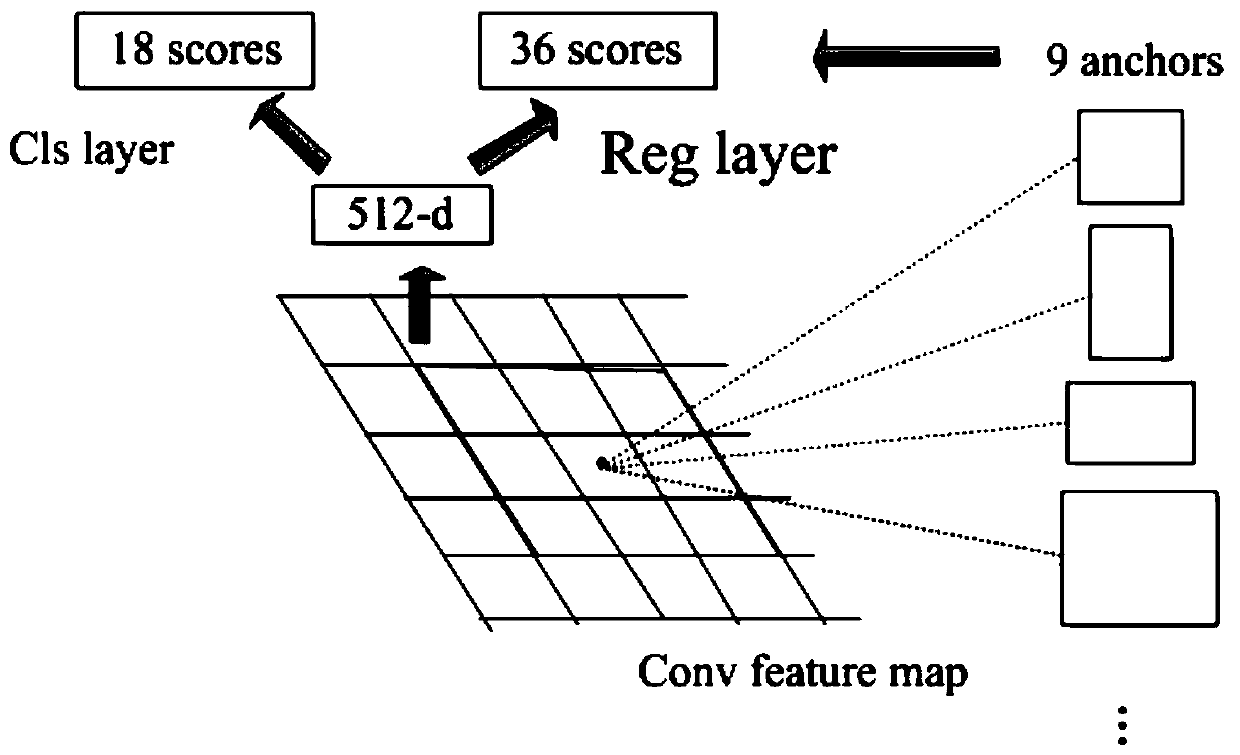

[0039] Such as figure 1 As shown, the fully convolutional network in this scheme consists of two parts. The first part is a convolution layer with a convolution kernel size of 7*7 to process the input image, and the second part is four groups of different convolution kernels composed of 3*3. The deep residual block, the residual block is...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com