Neural network weight matrix splitting and combining method

A technology of weight matrix and neural network, applied in the field of deep learning, can solve problems such as time-consuming and resource-consuming, and achieve the effect of saving training time and simplifying training steps

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

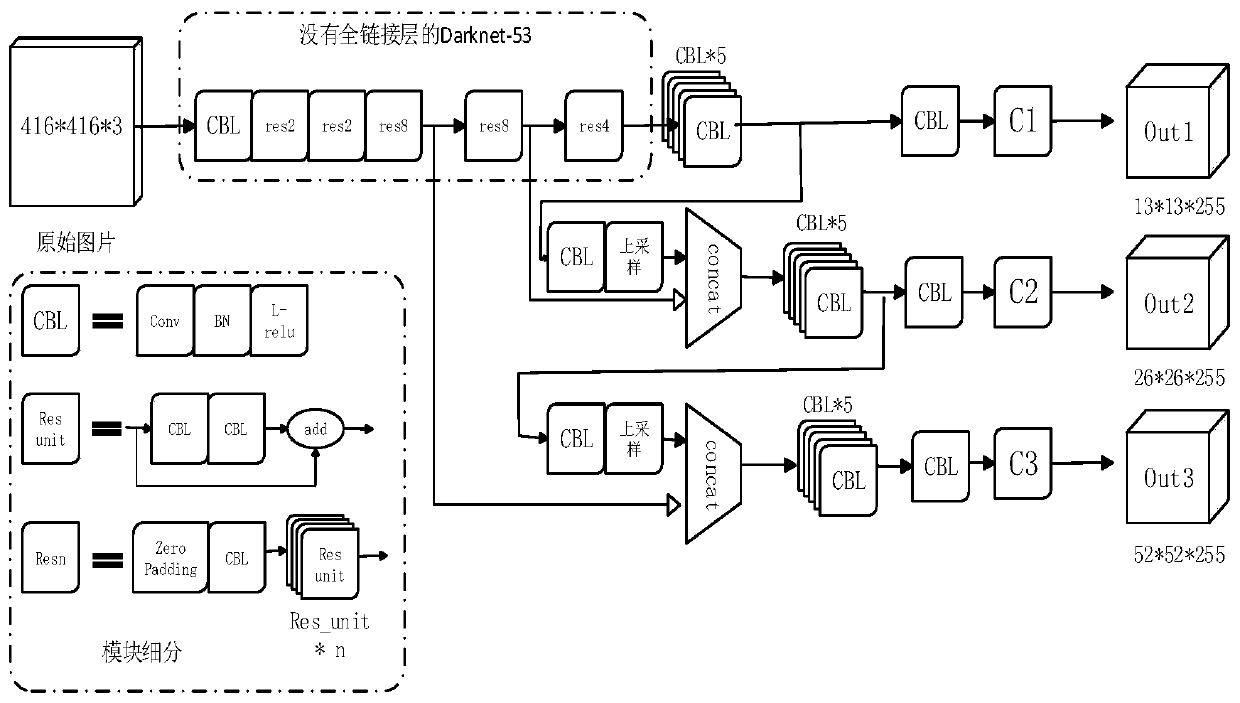

[0055] This embodiment takes YOLO v3 (the third edition of the YOLO series) as an example to illustrate a method for splitting and combining the neural network weight matrix for YOLO v3 in the present invention.

[0056] Let's take the standard 416*416 yolo v3 of 80 categories trained on the coco training set as an example, and the layer number is based on a total of 106 layer numbers.

[0057] Such as figure 1 Shown, the brief introduction of YOLOv3 network structure in the present invention is as follows:

[0058] Input: three-channel color image (416*416*3);

[0059] Output: Predict the prediction results of three scales (13*13, 26*26, 52*52) of different size targets respectively.

[0060] Daranet-53: A 53-layer feature extraction network that can extract the abstract features of pictures.

[0061] in,

[0062] CBL: convolution layer (Conv) + batch normalization layer (BN) + activation layer (L-relu), mainly performs convolution operations.

[0063] Res_unit: Residual...

Embodiment 2

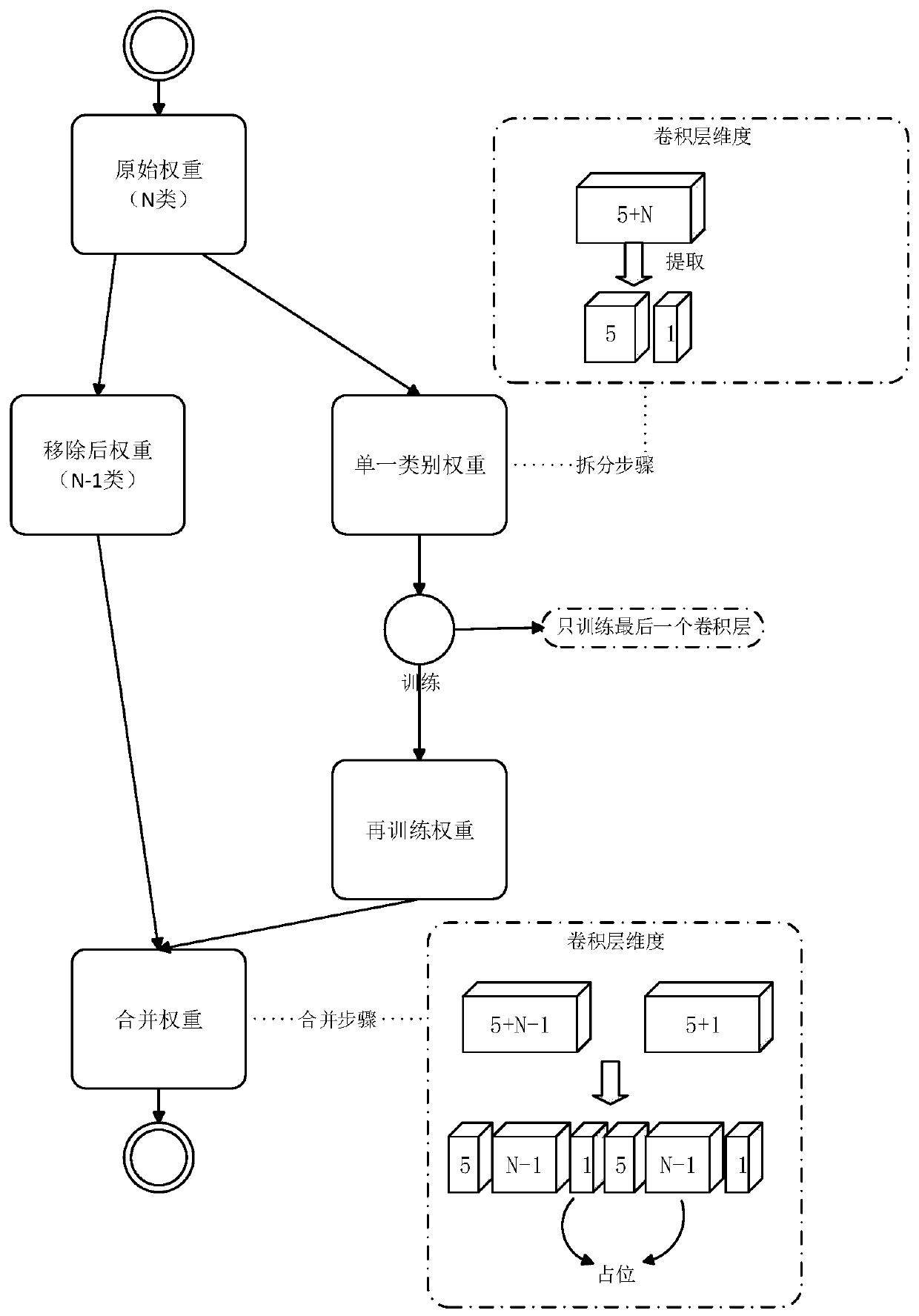

[0104] Use the keras advanced deep learning framework to modify the weight file, that is, to modify the convolutional layer of the weight matrix model. Let's take modifying the yolo v3 weight file as an example.

[0105] First determine the type of object to be detected (the original contains 80 categories), and train yolov3 by collecting image data to obtain a convolutional neural network weight with better effect, called the original weight matrix W1, which can be used for the task of target detection.

[0106] 1. The existing category 1 is no longer needed or needs to be updated to be processed, so it is necessary to extract this category, that is, to reduce the 80 categories to 79 categories.

[0107] correspond figure 1 The y1, y2, y3 outputs 13*13*255, 26*26*255, 52*52*255 should be modified to 13*13*252, 26*26*252, 52*52*252.

[0108] 1.1 After analysis, it can be known that the original weight matrix W1 has three output convolutional layers ( figure 1 3 C layers in ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com