Intelligent detection method based on graph neural network

A neural network and intelligent detection technology, applied in the field of intelligent detection based on graph neural network, can solve the problems of high time cost and economic cost for inspectors, easy to produce misjudgment and missed judgment, loss, etc., to reduce labor costs and The effect of detecting cost, improving accuracy and efficiency, and improving expression ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

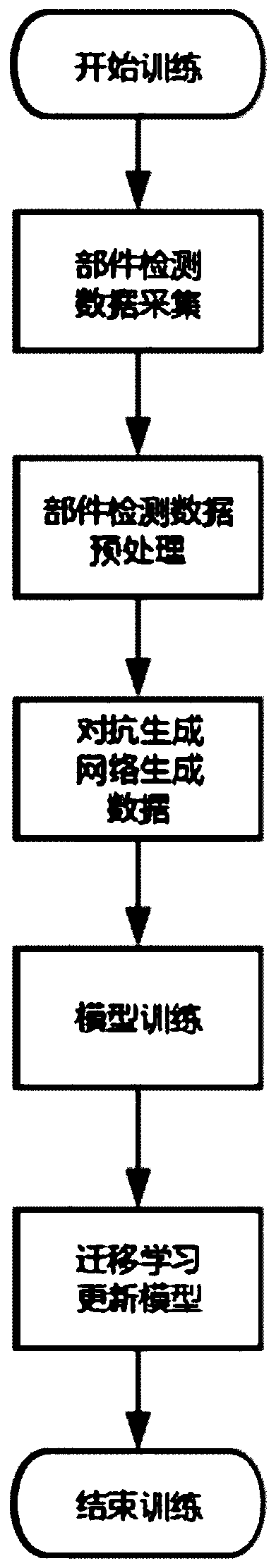

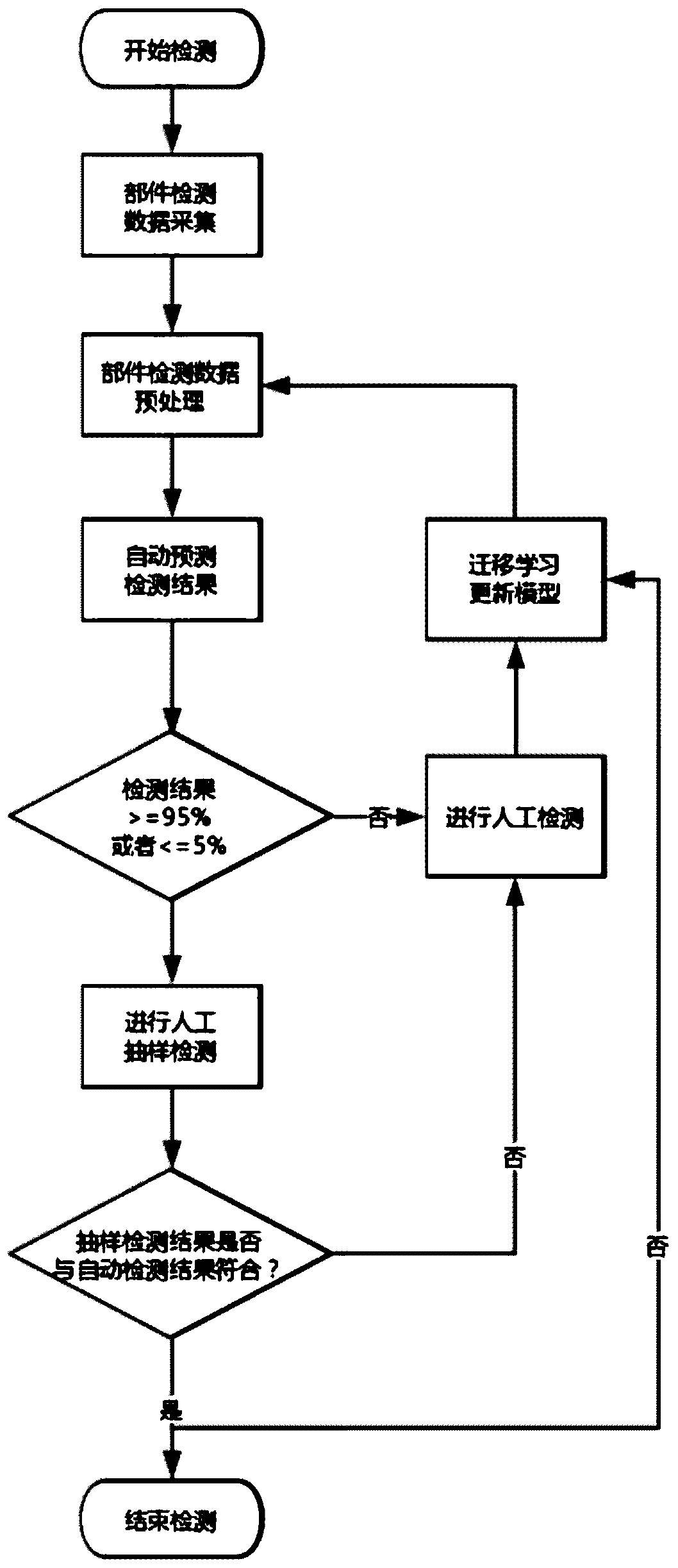

[0051] like figure 1 and figure 2 As shown, the intelligent detection technology based on graph neural network in this embodiment specifically includes the following steps:

[0052] 1. Sample Collection

[0053] Sample collection is a critical step in the entire automated inspection process for components such as elevator traction motors. Precise sample data is required for model training, migration, and prediction.

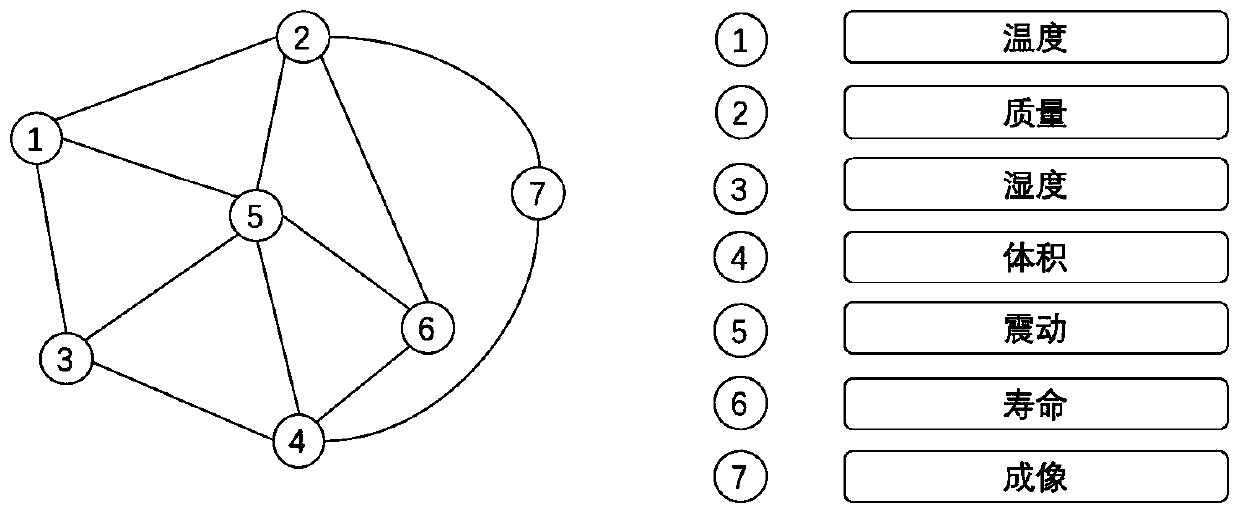

[0054] The inspection indicators of elevator traction motor components include temperature, humidity, weight, volume, vibration, life, imaging, rated current and voltage, and maximum torque, a total of nine items. specifically,

[0055] The temperature, as a standard to measure the operating state of the component, needs to be detected by a thermometer and output the parameter t when the component is in the standby state and the carrying state respectively.

[0056] The humidity, as a standard for measuring the internal environment of the component, adopts ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com