VR-based spatial cognitive ability training method and system

A technology of spatial cognition and training method, which is applied in the field of VR-based spatial cognition ability training and system, can solve the problem that users can only carry out real-scene training of spatial cognition ability, so as to reduce the difficulty of training, improve the training effect, and ensure safe effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

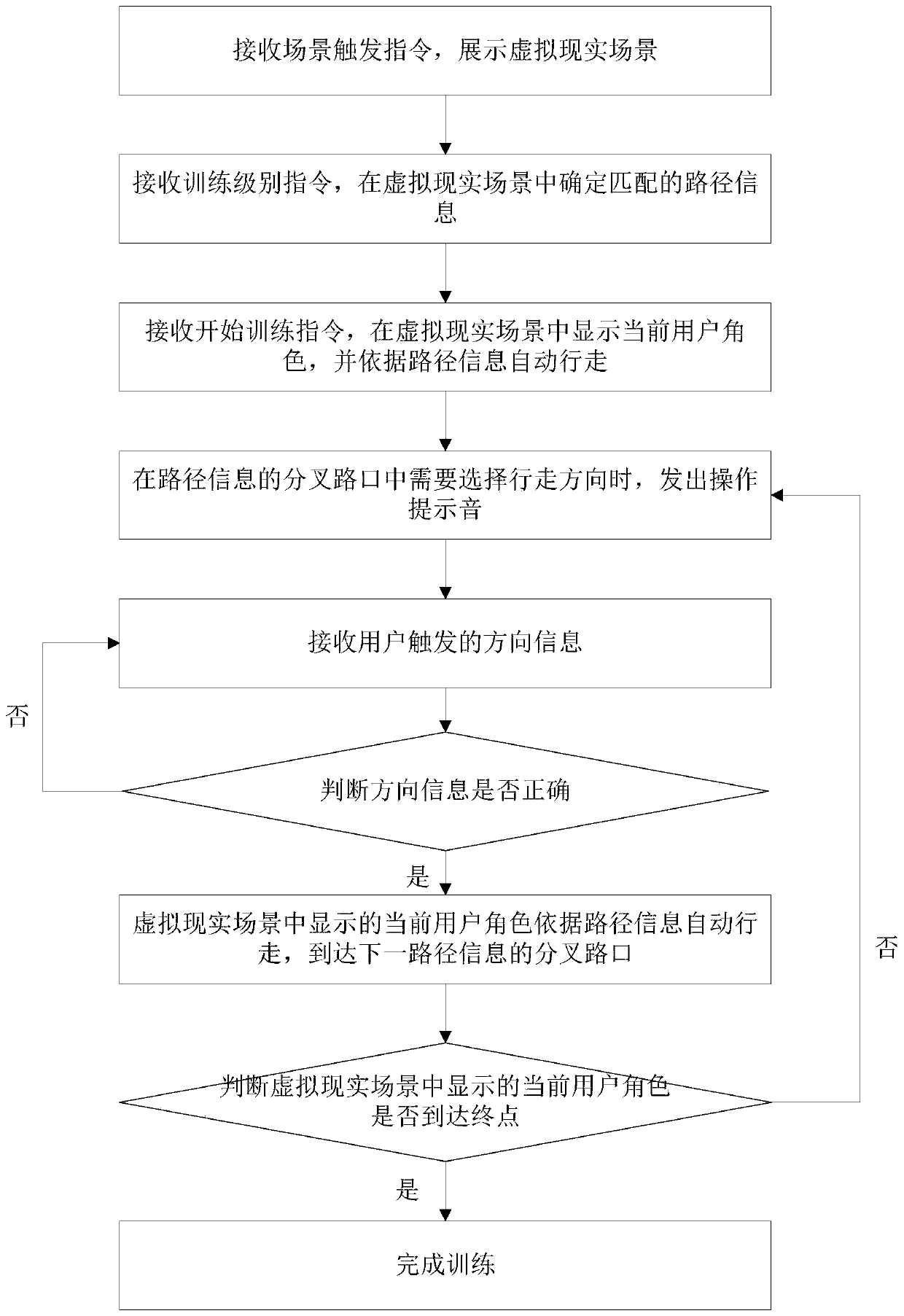

[0041] Suppose it is determined in Example 1 figure 2 Path information training in , combined with Figure 1-2 for a detailed description.

[0042] The spatial cognition training system includes: a VR device and an operating handle; the operating handle is a handle that sends an operating signal to the VR device; a plurality of training scenes for training users are pre-built in the VR device, and each training scene has at least Three training levels; the training methods include:

[0043] S1. For the user to be trained, after receiving the scene trigger instruction, display the virtual reality scene to the user to be trained;

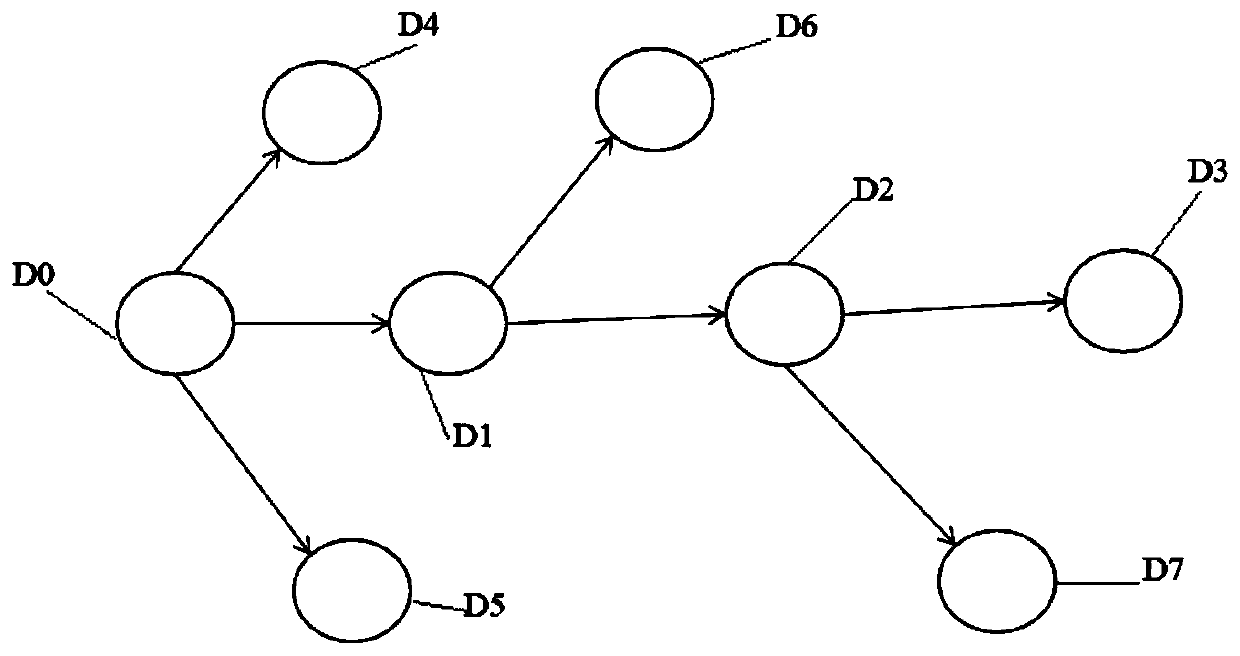

[0044] S2. After receiving the training level instruction of the displayed virtual reality scene, determine the following in the displayed virtual reality scene figure 2 The path information shown;

[0045] S3. Receive the instruction to start the training triggered by the user to be trained, and display the transparent current user character wi...

Embodiment 2

[0054] Assuming that it is determined in Example 2 figure 2 Path information training in , combined with Figure 1-3 for a detailed description.

[0055] S1. For the user to be trained, after receiving the scene trigger instruction, display the virtual reality scene to the user to be trained;

[0056] S2. After receiving the training level instruction of the displayed virtual reality scene, determine the following in the displayed virtual reality scene figure 2 The path information shown;

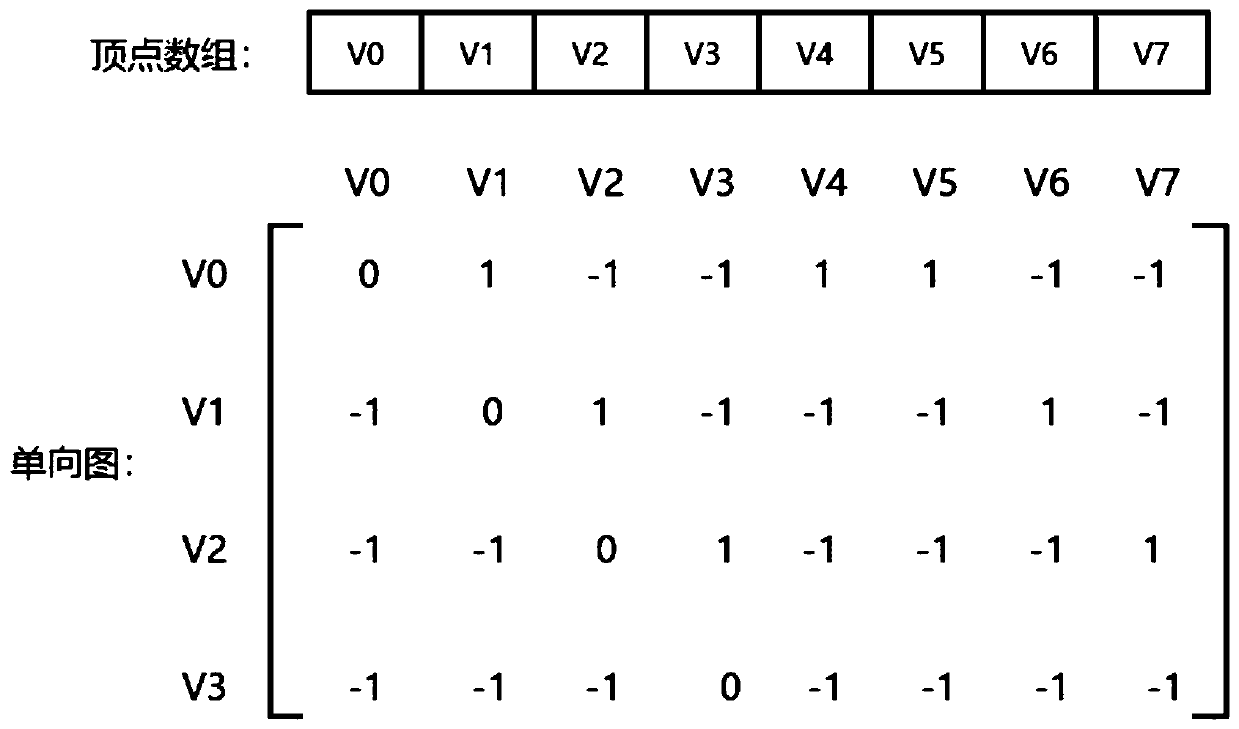

[0057] Among them, for figure 2 The path information includes: a storage of all reachable intersection indexes from the starting point D0 to the end point D3 in the current virtual scene, including the first array of the intersection index of the correct route and the wrong intersection index, and the intersection index of the correct route They are stored in order and numbered sequentially starting from 0, among which, figure 2 The index number of the starting point D0 in the first ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com