Unmanned driving platform real-time target 3D detection method based on camera and laser radar

A lidar and unmanned driving technology, applied in the field of computer vision, can solve the problems of low spatial positioning accuracy of image detection and difficulty in determining the category of point cloud detection, and achieve the effect of accurate spatial positioning and low missed detection rate.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The present invention will be described in further detail below in conjunction with the accompanying drawings.

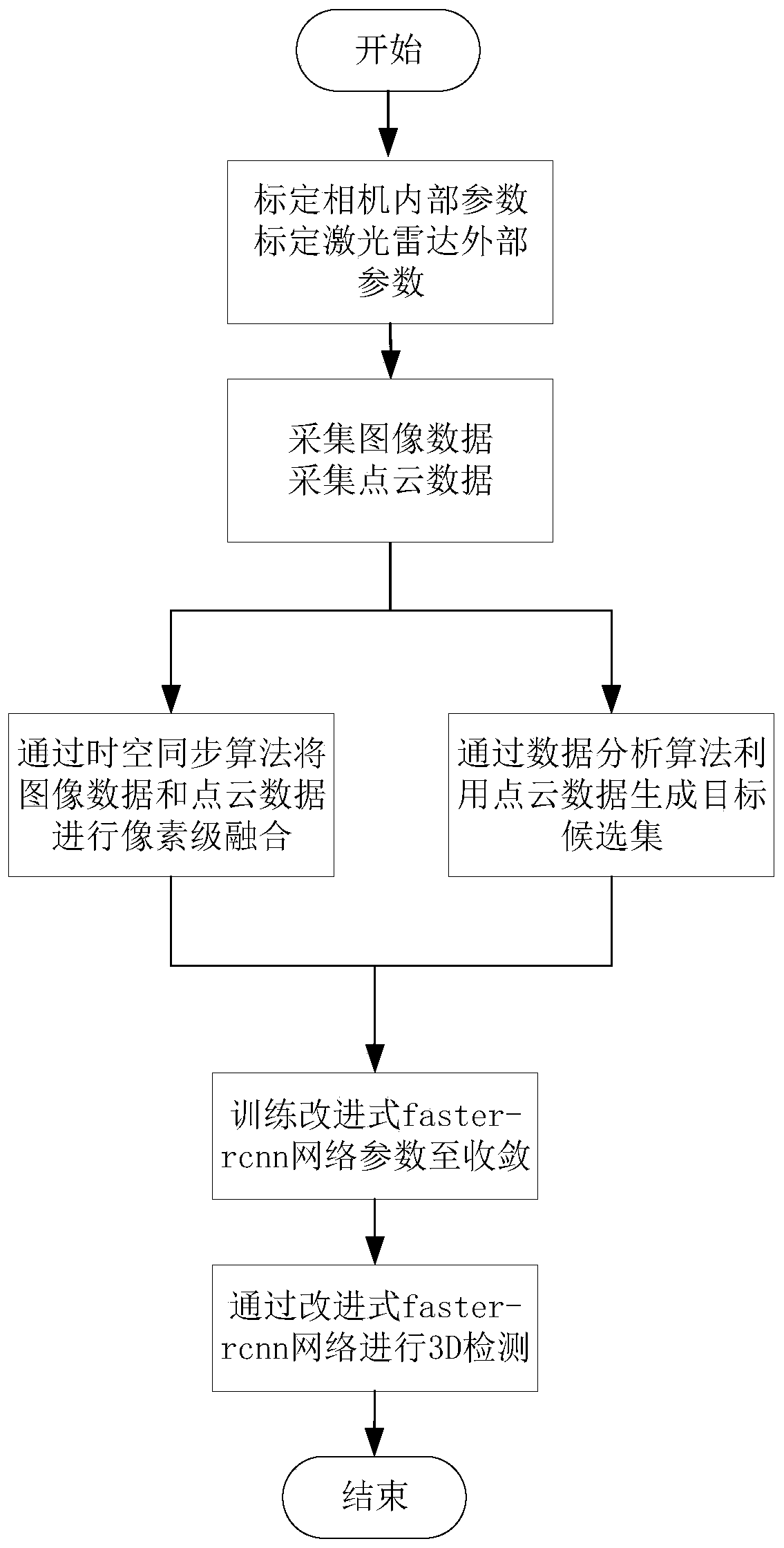

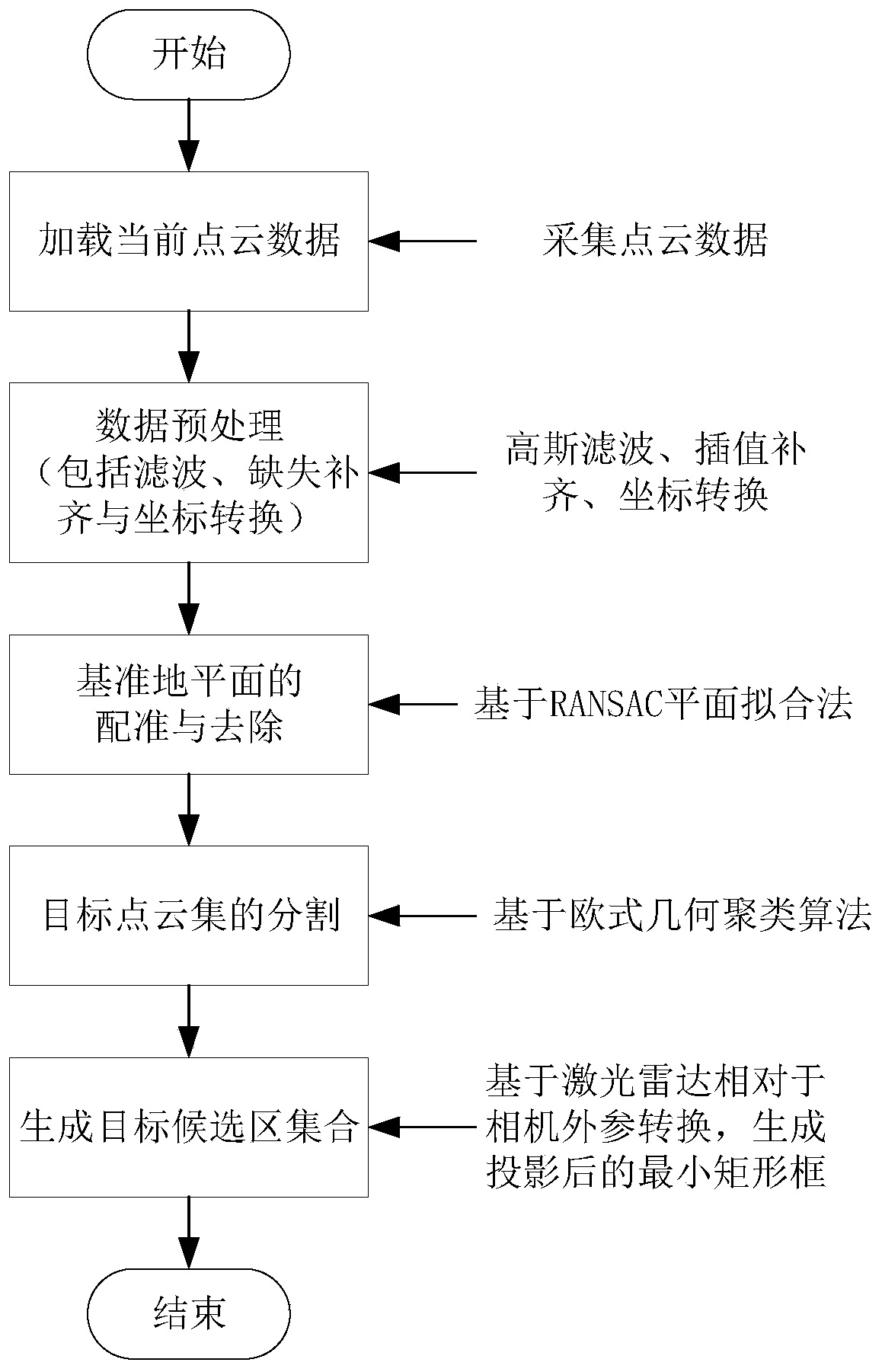

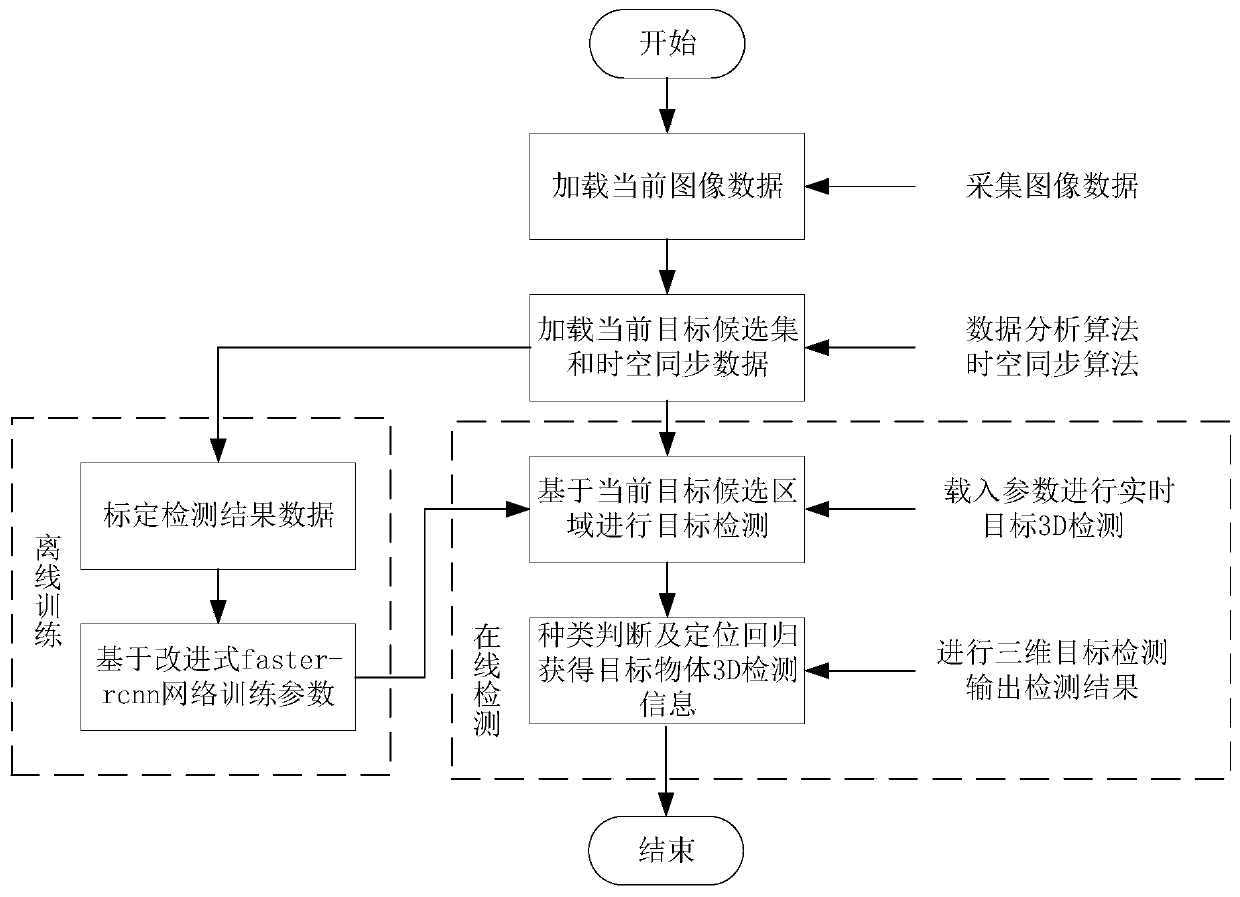

[0031] combine figure 1 , a real-time target 3D detection method for an unmanned driving platform based on a camera and a laser radar described in the present invention, through the pixel-level fusion of the original data of the camera and the laser radar, the time-space synchronization data is combined with the laser radar The data analysis method obtains the clustering detection results, builds an improved faster-rcnn network architecture for parameter training and uses it for real-time detection, and outputs the type, length, width, height, and center point of the target object around the unmanned platform relative to the unmanned The distance, yaw angle, roll angle, and pitch angle of the space coordinates of the human driving platform. According to the system and its detection method, the present invention adopts the fusion algorithm of traditional clus...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com