Object pose estimation method and system based on deformation convolution network

A convolutional network and pose estimation technology, applied in the field of computer vision, can solve problems such as reducing accuracy, reducing algorithm efficiency, and time-consuming, and achieving the effects of improving efficiency, simplifying estimation method steps, and improving accuracy and robustness.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

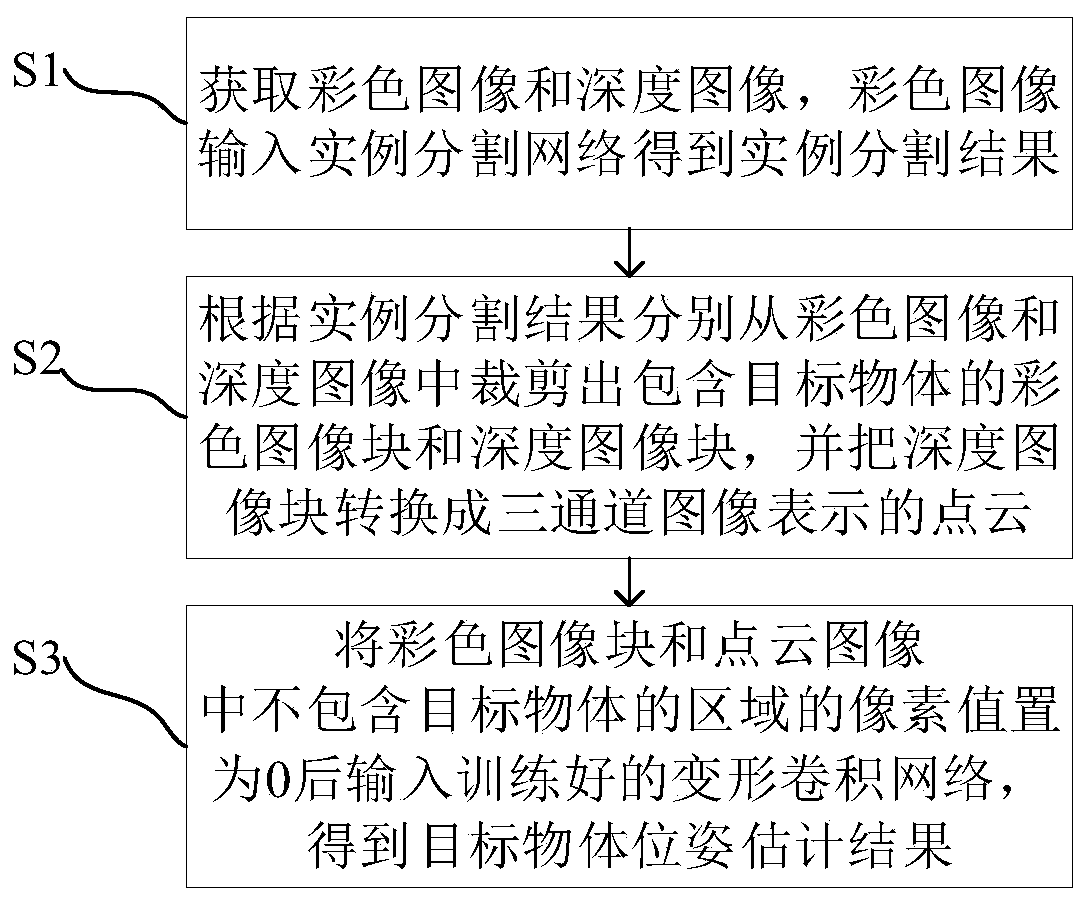

[0049] An object pose estimation method based on deformable convolutional network, such as figure 1 ,include:

[0050] S1. Obtain the color image and depth image of the target object, input the color image of the target object into the trained instance segmentation network, and obtain the instance segmentation result;

[0051] S2. Cut out the color image block and the depth image block containing the target object from the color image and the depth image respectively according to the instance segmentation result, and convert the depth image block into a point cloud represented by three channels;

[0052] S3. Set the pixel value of the color image block and point cloud that does not contain the target object to 0, and then input the trained deformation convolution network to obtain the target object pose estimation result. The output of the deformation convolution network includes multiple targets. The object pose value and the corresponding confidence, the target object pose ...

Embodiment 2

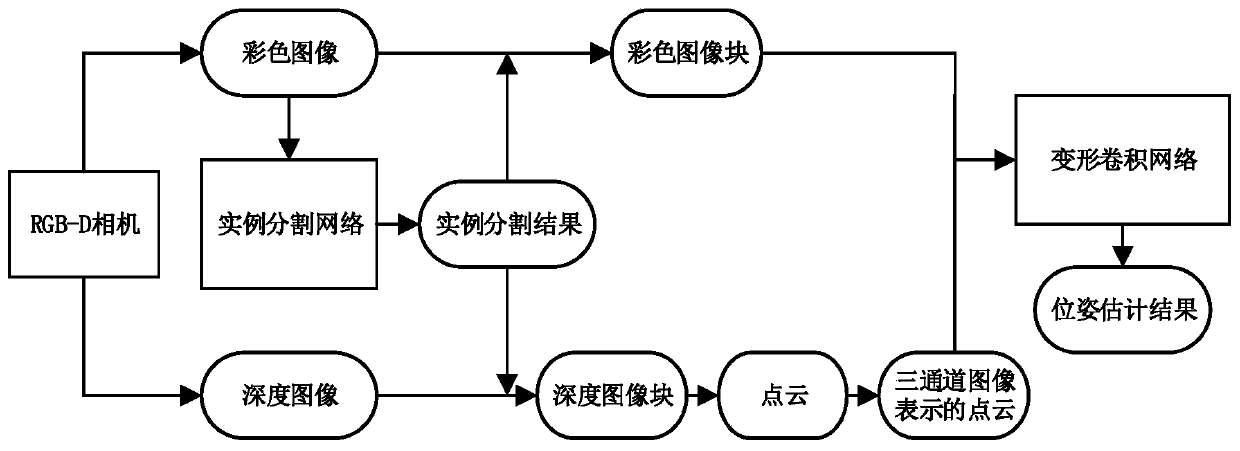

[0065] An object pose estimation system based on a deformable convolutional network corresponding to Embodiment 1, such as figure 2 , including an RGB-D camera, an instance segmentation module, an object cropping module, a transformation processing module and a deformable convolution module;

[0066] The RGB-D camera acquires the color image and depth image of the target object;

[0067] The instance segmentation module segments the color image to obtain the instance segmentation result;

[0068] The target cropping module cuts out the color image block and the depth image block containing the target object from the color image and the depth image respectively according to the instance segmentation result;

[0069] The conversion processing module converts the depth image block into a point cloud represented by a three-channel image, and sets the pixel value of a region not containing the target object in the color image block and the point cloud image to 0;

[0070] The de...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com