Voice lip fitting method and system and storage medium

A voice and lip shape technology, which is applied in voice analysis, voice recognition, image data processing, etc., can solve the problems of large amount of model calculation, cumbersome steps, slow calculation speed, etc., to achieve the effect of fitting quantization, increase calculation efficiency, The effect of reducing time cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

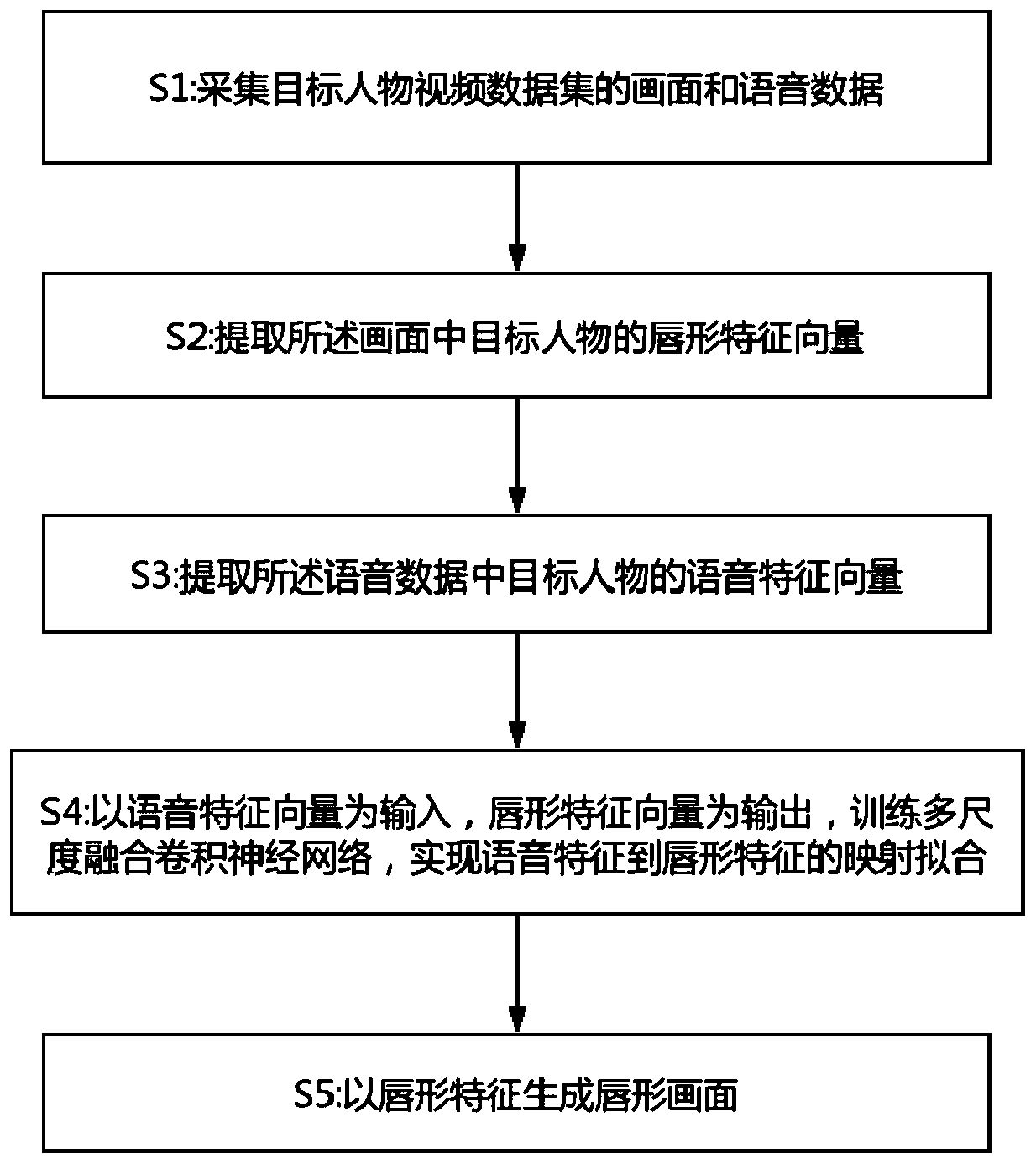

[0053] Such as figure 1 As shown, it is a flow chart of the speech lip shape fitting method based on multi-scale fusion convolutional neural network in this embodiment.

[0054] The speech lip shape fitting method based on the multi-scale fusion convolutional neural network of the present embodiment comprises the following steps:

[0055] S1: Collect the image data and voice data of the video data set of the target person. In this step, the image data and voice data of the target person video data set need to be collected at the same time and at the same frame rate, and the image data of the target person video data set needs to be collected using a three-dimensional structured light depth camera. In this embodiment, MacOS and ARKit are used to write a face tracking program, run on an IphoneX device, and use its front camera to collect the video image data at a frame rate of 60 frames per second.

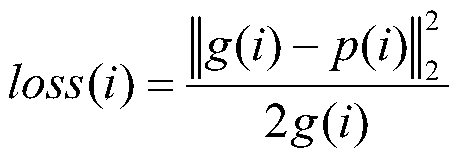

[0056]S2: Extracting a lip shape feature vector of the target person in the i...

Embodiment 2

[0070] This embodiment provides a system for applying the voice lip shape fitting method of Embodiment 1, and its specific scheme is as follows:

[0071] Including data acquisition module, lip shape feature vector extraction module, voice feature vector extraction module, multi-scale fusion convolutional neural network training module and voice lip shape fitting module;

[0072] Wherein the data collection module is used to collect the image data and voice data of the target figure video data set;

[0073] The lip shape feature vector extraction module is used to extract the lip shape feature vector of the target person in the image data;

[0074] The voice feature vector extraction module is used to extract the voice feature vector of the target person in the voice data;

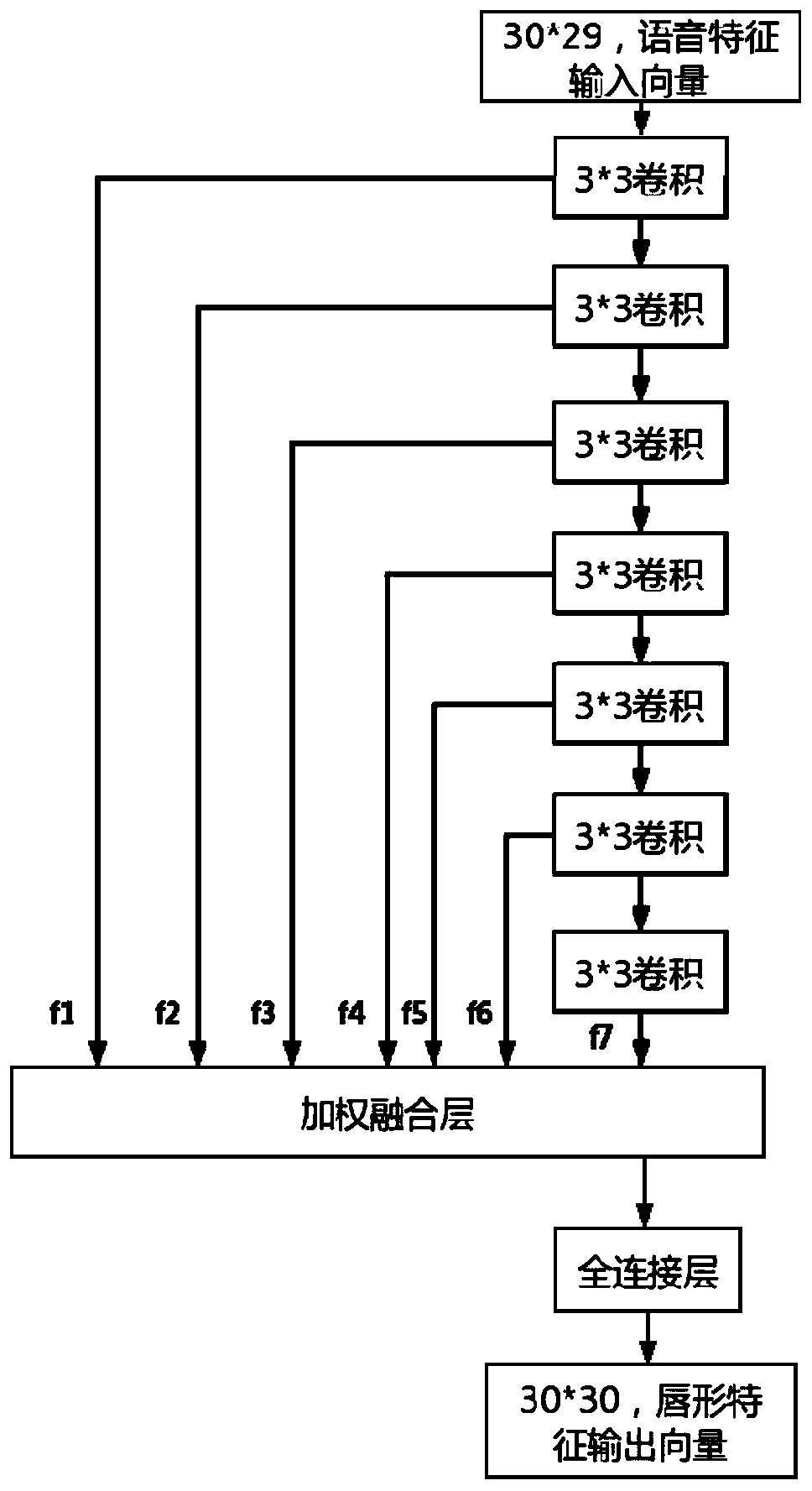

[0075] The multi-scale fusion convolutional neural network training module is used to train the multi-scale fusion convolutional neural network with the speech feature vector as input and the lip shape fea...

Embodiment 3

[0078] This embodiment provides a storage medium, and a program is stored in the storage medium, and the method steps of the voice lip shape fitting method in the first embodiment are executed when the program is running.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com