Neural network compression method using block cyclic matrix

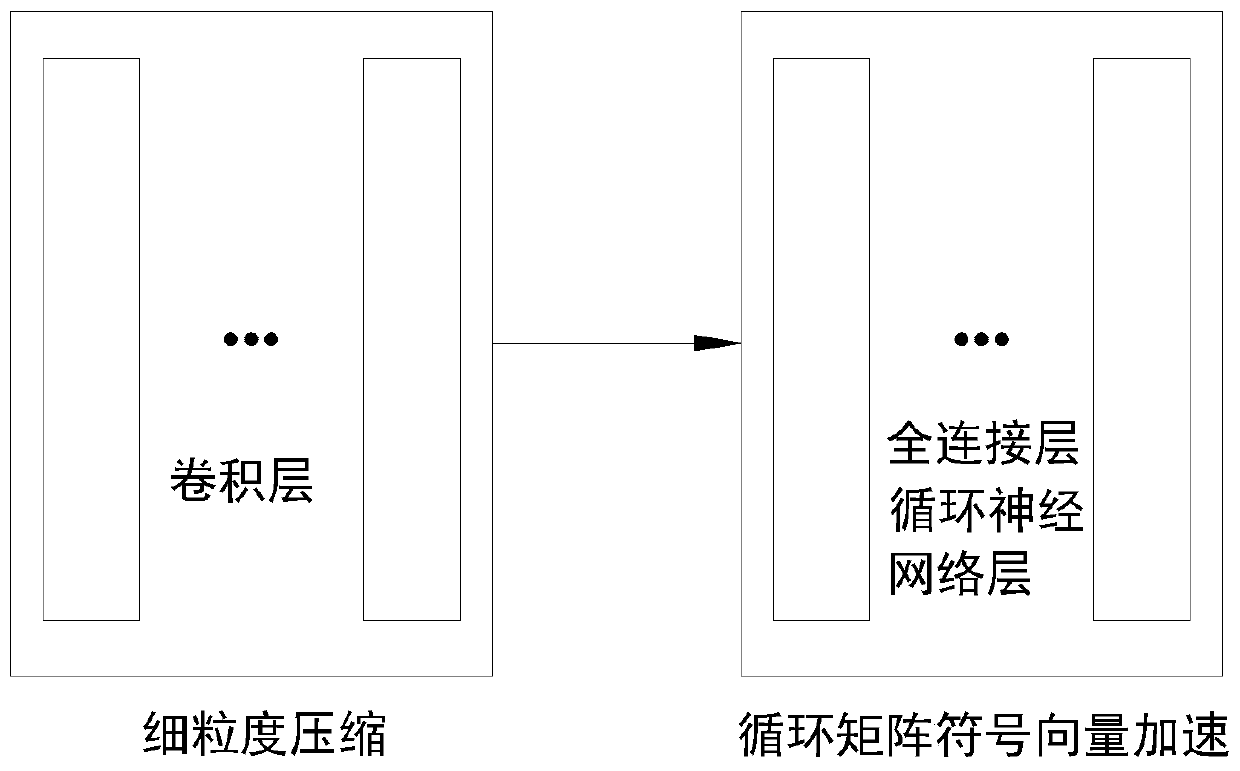

A technology of neural network and circulant matrix, which is applied in the field of neural network compression using block circulant matrix, can solve problems such as hardware matching and projection correlation performance degradation, and achieve the effects of ensuring accuracy, increasing convergence speed, and improving compression performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0074] Embodiment 1: VGG16 experiment

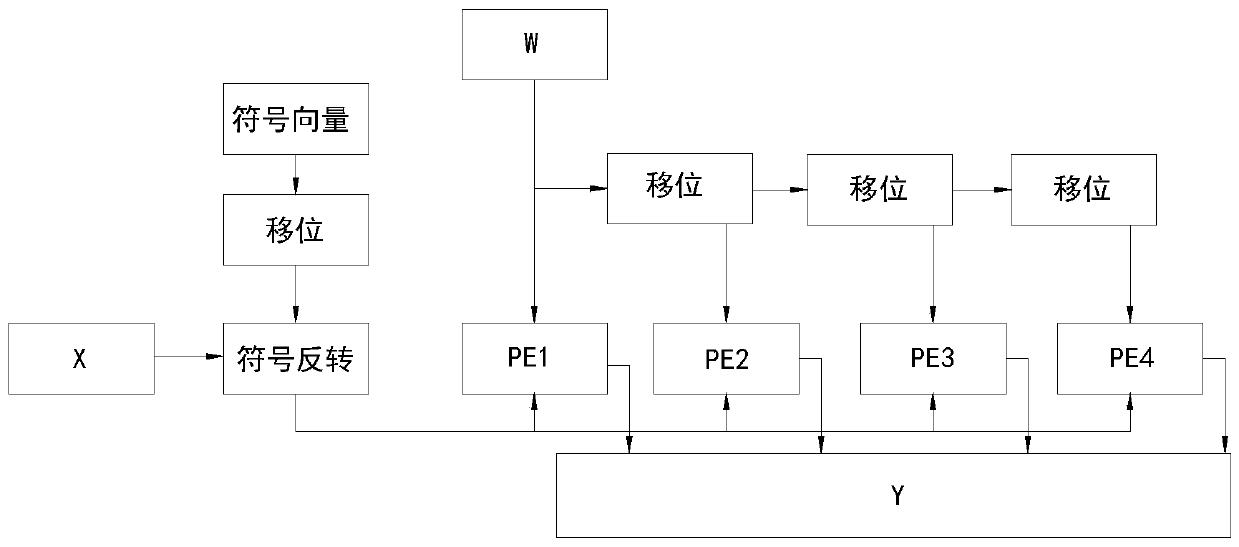

[0075] When the sign vector is generated, n_sign=block size, n_sign=32, block_sign=25. Among them, the expansion rule is: cyclically shift block_sign-1 times to the right from the basic symbol vector, and shift right by 1 bit each time, and splice the obtained structure into an 800-dimensional symbol vector.

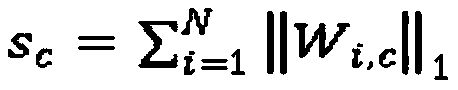

[0076] The penultimate fully connected layer of the VGG16 network is replaced by the recurrent neural network layer proposed in this scheme, and the convolutional layer of the network is pruned by a fine-grained compression method.

[0077] The above experiments were performed on the large data set ImageNet2012, and the compression rate and Top-1 Acc were calculated. The experimental results are shown in Table 1, Table 2 and Table 3 below.

[0078] The results show that compared with the uncompressed VGG16 network, the number of network parameters and complexity is greatly reduced at the expense of little accuracy performance.

Embodiment 2

[0079] Example 2: ResNet50 experiment

[0080] When the sign vector is generated, n_sign=40, block_sign=20. Among them, the expansion rule is: repeat the basic symbol vector 20 times, and concatenate it into an 800-dimensional symbol vector.

[0081] Replace all the fully connected layers of ResNet with the recurrent neural network layer proposed in this scheme, and use the fine-grained compression method to prune the convolutional layer of the network.

[0082] The above experiments were performed on the large data set ImageNet2012, and the compression rate and Top-1 Acc were calculated. The experimental results are shown in Table 1, Table 2 and Table 3 below.

[0083] The results show that compared with the uncompressed ResNet50 network, the number of network parameters and complexity is greatly reduced at the expense of little accuracy performance.

[0084] Table 1 Top-1Acc (%) of the network

[0085]

[0086] Table 2 Parameter compression ratio of the network

[00...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com