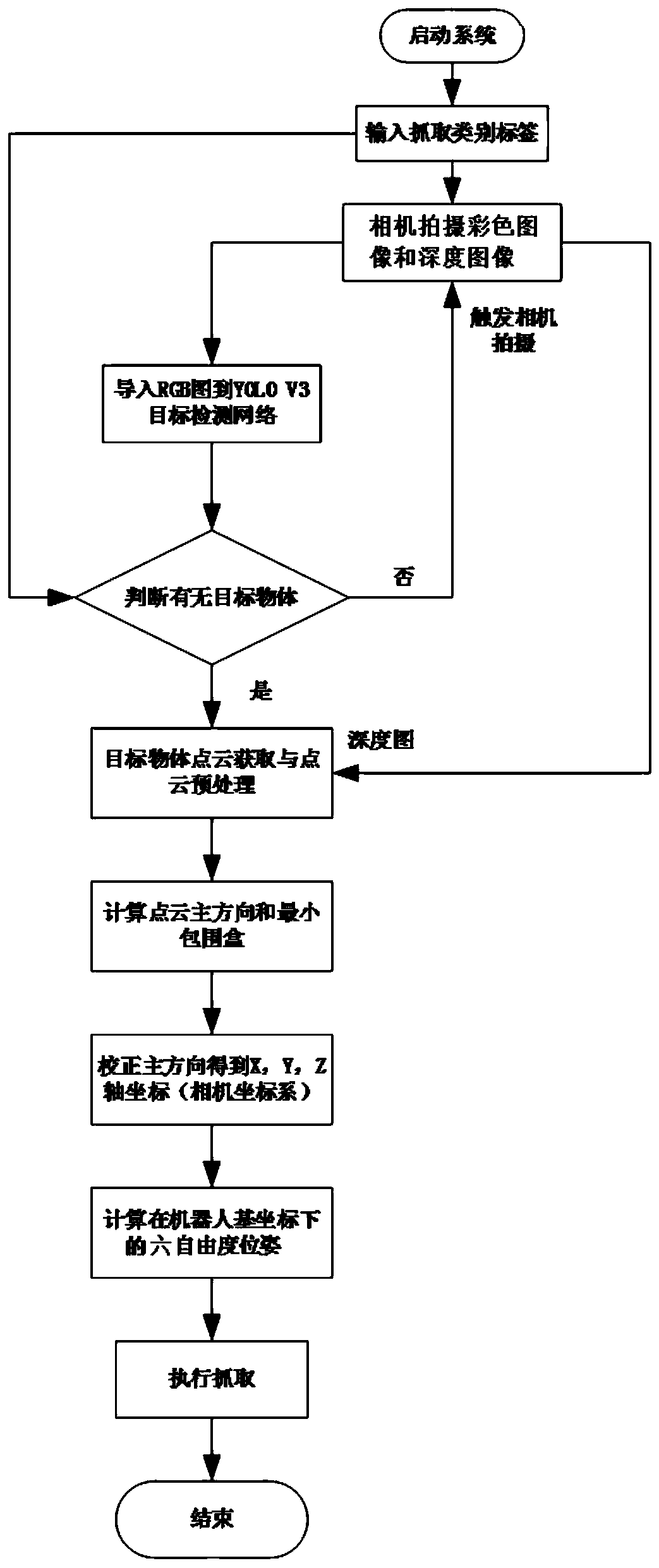

Robot autonomous classification grabbing method based on YOLOv3

A robot and degree-of-freedom technology, used in instruments, manipulators, image analysis, etc., can solve problems such as poor robustness and weak generalization ability, and achieve the effects of high precision, strong generalization ability, and fast speed.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

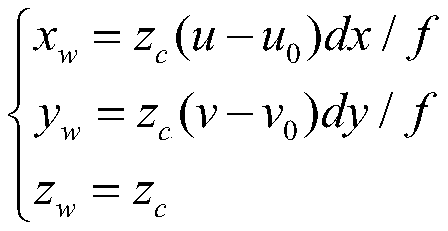

Method used

Image

Examples

Embodiment Construction

[0044] In order to further understand the invention content, characteristics and effects of the present invention, the following embodiments are enumerated hereby, and detailed descriptions are as follows in conjunction with the accompanying drawings:

[0045] The Chinese interpretation of English in this application is as follows:

[0046] YOLOv3: A single-stage target detection algorithm proposed by Joseph Redmon in 2018;

[0047] PCA: principal component analysis method;

[0048] Darknet-53: Deep convolutional neural network, used to extract image features, is the core module of the YOLOv3 algorithm;

[0049] kinect: visual sensor, which can obtain RGB information and depth information of objects.

[0050] RGB: three-channel color image;

[0051] RGB-D: a general term for three-channel color images and depth images;

[0052] R-CNN: Regional convolutional neural network, a target detection algorithm proposed by Ross Girshick et al. in 2014.

[0053] Fast R-CNN: Fast reg...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com