Robotic arm grasping method based on semantic laser interaction

A robotic arm and laser technology, applied in manipulators, program-controlled manipulators, image analysis, etc., can solve the problems of unfriendly human-computer interaction, inconvenient operation, poor user experience, etc., achieving novel interaction methods and improving ease of use. , the effect of convenient operation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

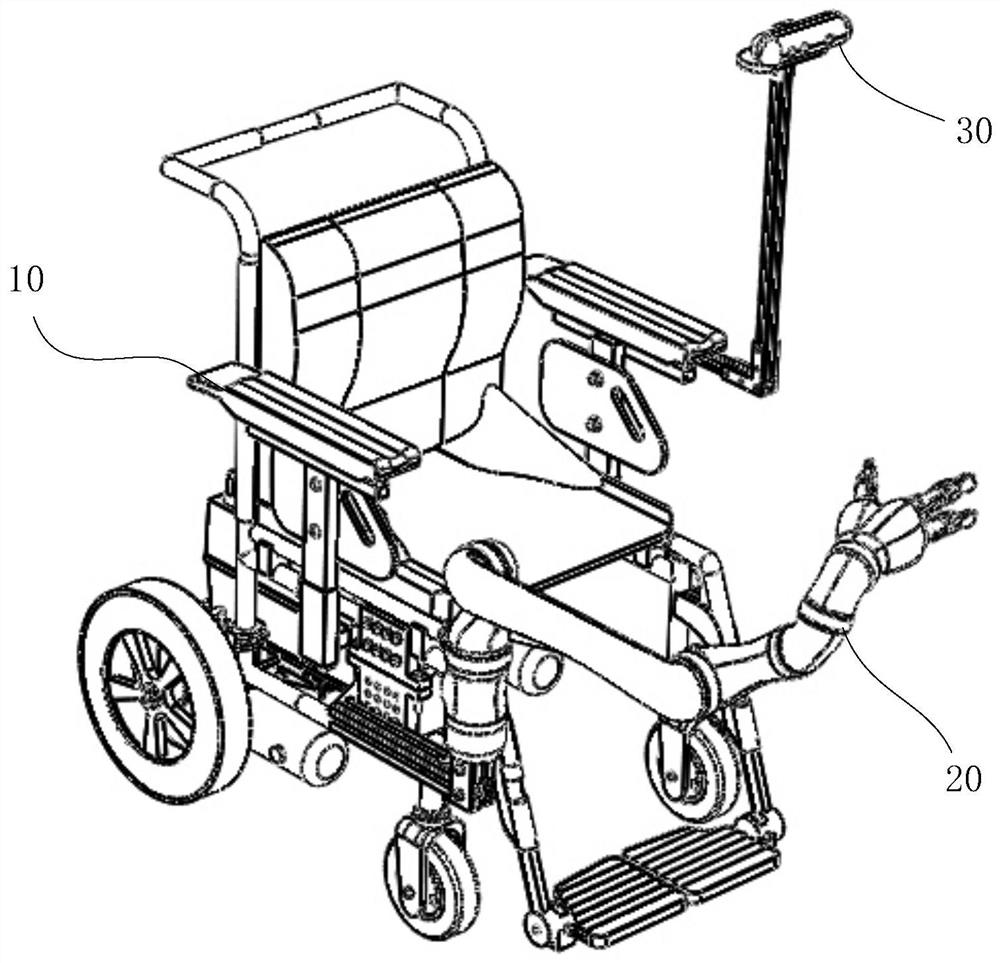

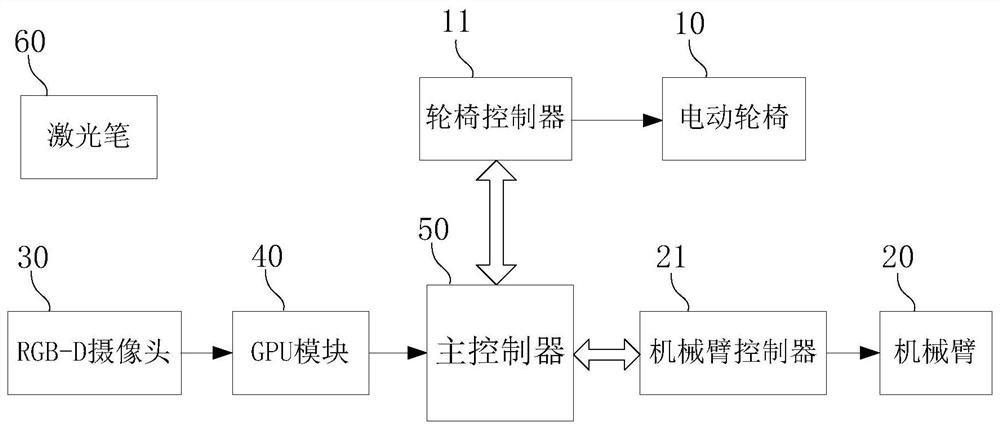

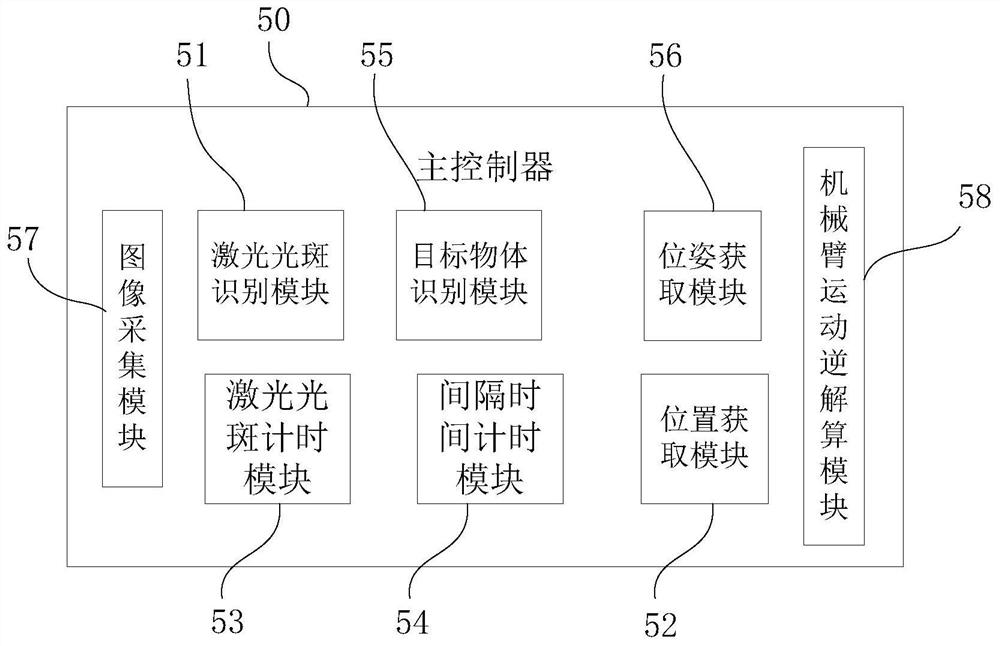

Embodiment 1

[0125] refer to Figure 4 , place multiple objects (such as: cups, spoons, water bottles, etc.) on the table, the RGB-D camera 30 shoots the objects on the table in real time, the elderly sit on the electric wheelchair 10, hold the laser pointer 60, press the laser The switch of the pen 60 emits laser light and irradiates the target object (such as a cup), and a laser spot is formed on the target object. In this embodiment, the laser spot is mainly used as the human-computer interaction medium, and the system recognizes the target object according to the laser spot on the object, and realizes that the robotic arm 20 automatically grabs the target object (that is, grabs the object on the table and moves it to a certain fixed space) position) to realize the function of laser point picking objects, such as Figure 5 The specific control method is as follows:

[0126] Step S101 , the RGB-D camera 30 is used to photograph the area where the object on the table is located, to acqu...

Embodiment 2

[0153] refer to Figure 4 , place multiple objects (such as: cups, spoons, water bottles, etc.) on the table, the RGB-D camera 30 shoots the objects on the table in real time, the elderly sit on the electric wheelchair 10, hold the laser pointer 60, press the laser The switch of the pen 60 emits laser light and irradiates it on the water bottle of the first target object, and a laser spot will be formed on the water bottle. After a period of time t1, the laser output by operating the laser pen 60 is irradiated on a certain point on the table of the second target object and is irradiated. Stay for a period of time t2. This embodiment mainly recognizes two target objects according to two laser spots appearing on different target objects, so that the robotic arm 20 can automatically grab the first target object and move it to a certain fixed position on the second target object (that is, the object moves to the desktop another fixed spatial position), such as Figure 7 The spec...

Embodiment 3

[0200] refer to Figure 4 , place multiple objects (such as: cups, spoons, water bottles, etc.) on the table, the RGB-D camera 30 shoots the objects on the table in real time, the elderly sit on the electric wheelchair 10, hold the laser pointer 60, press the laser The switch of the pen 60 emits laser light and irradiates it on the water bottle of the first target object, and a laser spot will be formed on the water bottle. After staying for a period of time t1, the laser output from the operating laser pointer 60 is irradiated on a certain point on the cup of the second target object and stays for a period of time t2. This embodiment mainly identifies two target objects according to the laser spots on different target objects, and realizes mechanical The arm 20 automatically grabs the first target object water bottle and moves it to a certain fixed position above the second target object cup and pours water into the cup. like Figure 8 The specific control method is as foll...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com