Resource scheduling method and device, and storage medium

A resource scheduling and computer equipment technology, applied in the field of high-performance computing, can solve problems such as inability to efficiently schedule resources, inability to effectively evaluate GPU acceleration effects, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

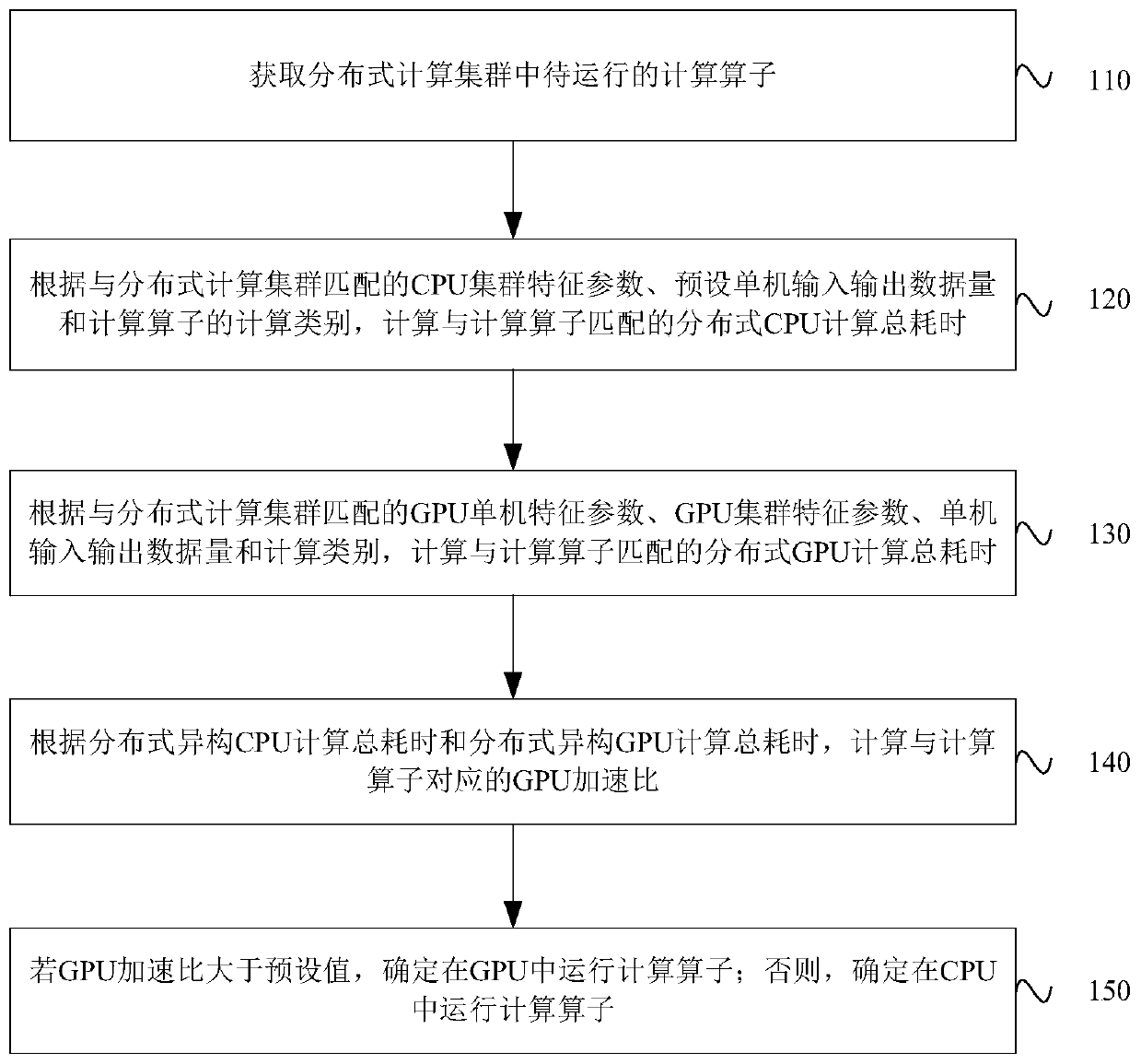

[0049] figure 1 It is a flow chart of a resource scheduling method provided by Embodiment 1 of the present invention. This embodiment is applicable to the case where a computing operator is determined to run on a CPU or GPU in high-performance computing. The method can be executed by a resource scheduling device. The device can be realized by software and / or hardware, and the device can be integrated in the processor, such as figure 1 As shown, the method specifically includes:

[0050] Step 110, acquiring computing operators to be run in the distributed computing cluster.

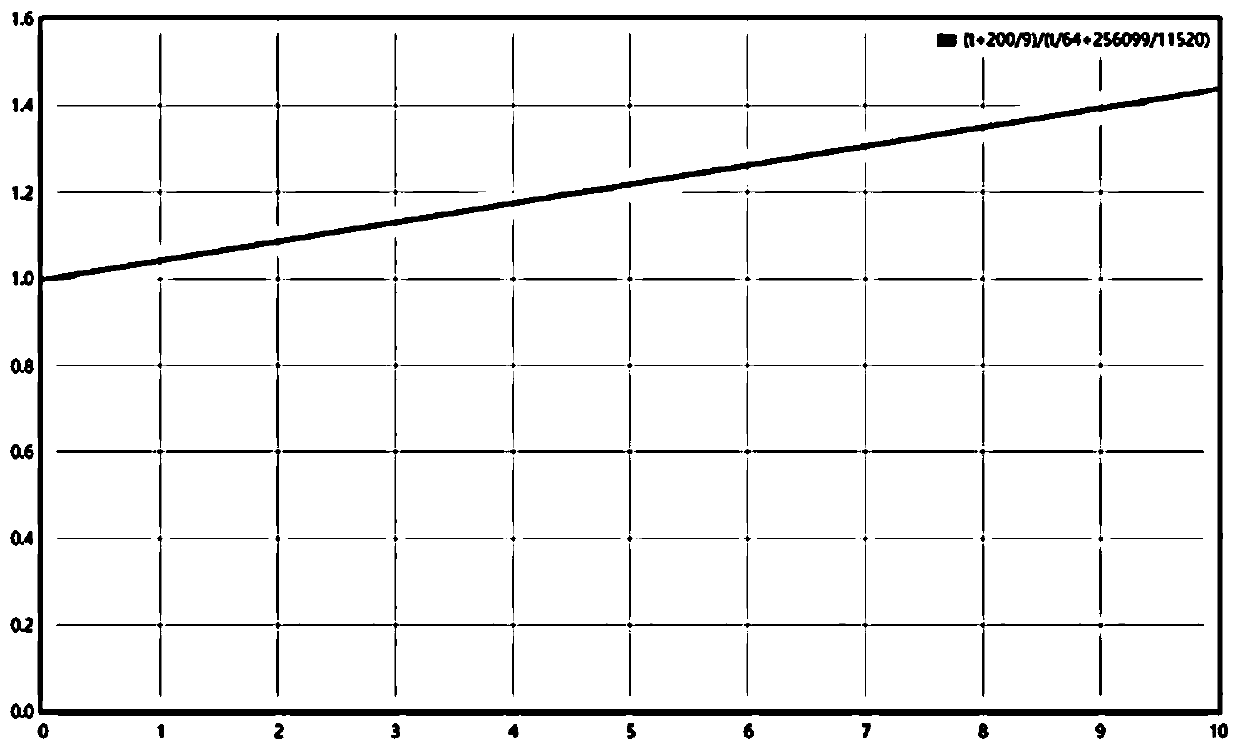

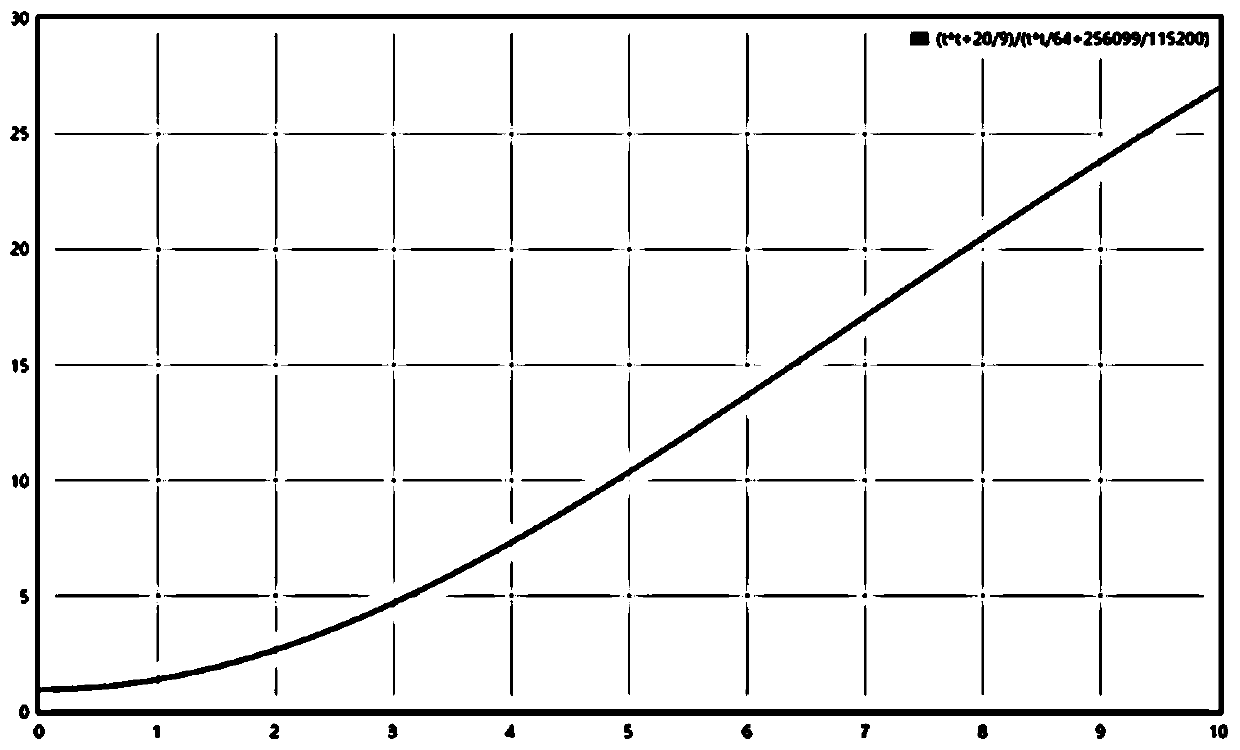

[0051] In the embodiment of the present invention, in the distributed computing cluster, the high-performance computing server adopts the CPU specification of Inter E5 2600, the GPU specification of NVIDIA GTX 1080Ti, the motherboard adopts the PCI-E 3.0 specification, and the GPU uses the PCIE8X slot as an example , to illustrate the calculation example of the GPU acceleration ratio, but the embodiment ...

Embodiment 2

[0090] image 3 It is a schematic structural diagram of a resource scheduling device provided in Embodiment 2 of the present invention. combine image 3 , the device includes: a calculation operator acquisition module 310 , a distributed CPU calculation total time consumption calculation module 320 , a distributed GPU calculation total time consumption calculation module 330 , a GPU acceleration ratio calculation module 340 and a calculation operator operation hardware determination module 350 .

[0091] Wherein, the calculation operator acquiring module 310 is used to acquire the calculation operator to be run in the distributed computing cluster;

[0092] The distributed CPU computing total time-consuming calculation module 320 is used to calculate the distributed CPU matching the computing operator according to the characteristic parameters of the CPU cluster matching the distributed computing cluster, the preset stand-alone input and output data volume, and the computing ...

Embodiment 3

[0103] Figure 4 It is a schematic structural diagram of a computer device provided in Embodiment 3 of the present invention, as shown in Figure 4 As shown, the equipment includes:

[0104] one or more processors 410, Figure 4 Take a processor 410 as an example;

[0105] memory 420;

[0106] The device may also include: an input device 430 and an output device 440 .

[0107] The processor 410, memory 420, input device 430, and output device 440 in the device can be connected by bus or other means, Figure 4 Take connection via bus as an example.

[0108] The memory 420, as a non-transitory computer-readable storage medium, can be used to store software programs, computer-executable programs, and modules, such as program instructions / modules corresponding to a resource scheduling method in an embodiment of the present invention (for example, attached image 3The calculation operator acquisition module 310 shown, the distributed CPU calculation total time consumption cal...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com