Voiceprint recognition method based on gender, nationality and emotion information

A voiceprint recognition and nationality technology, applied in the field of speaker recognition, can solve problems such as affecting the accuracy of speaker recognition, limited neural network learning ability, and increasing network depth or complexity.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

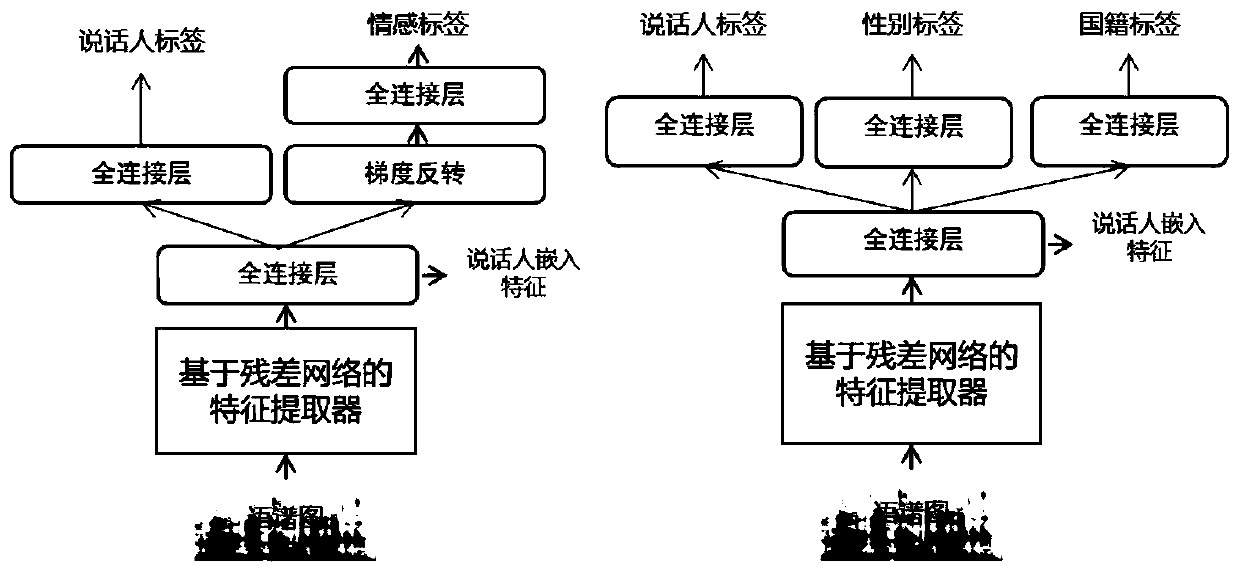

Embodiment Construction

[0054] The present invention will be described in further detail below in conjunction with the accompanying drawings and accompanying tables.

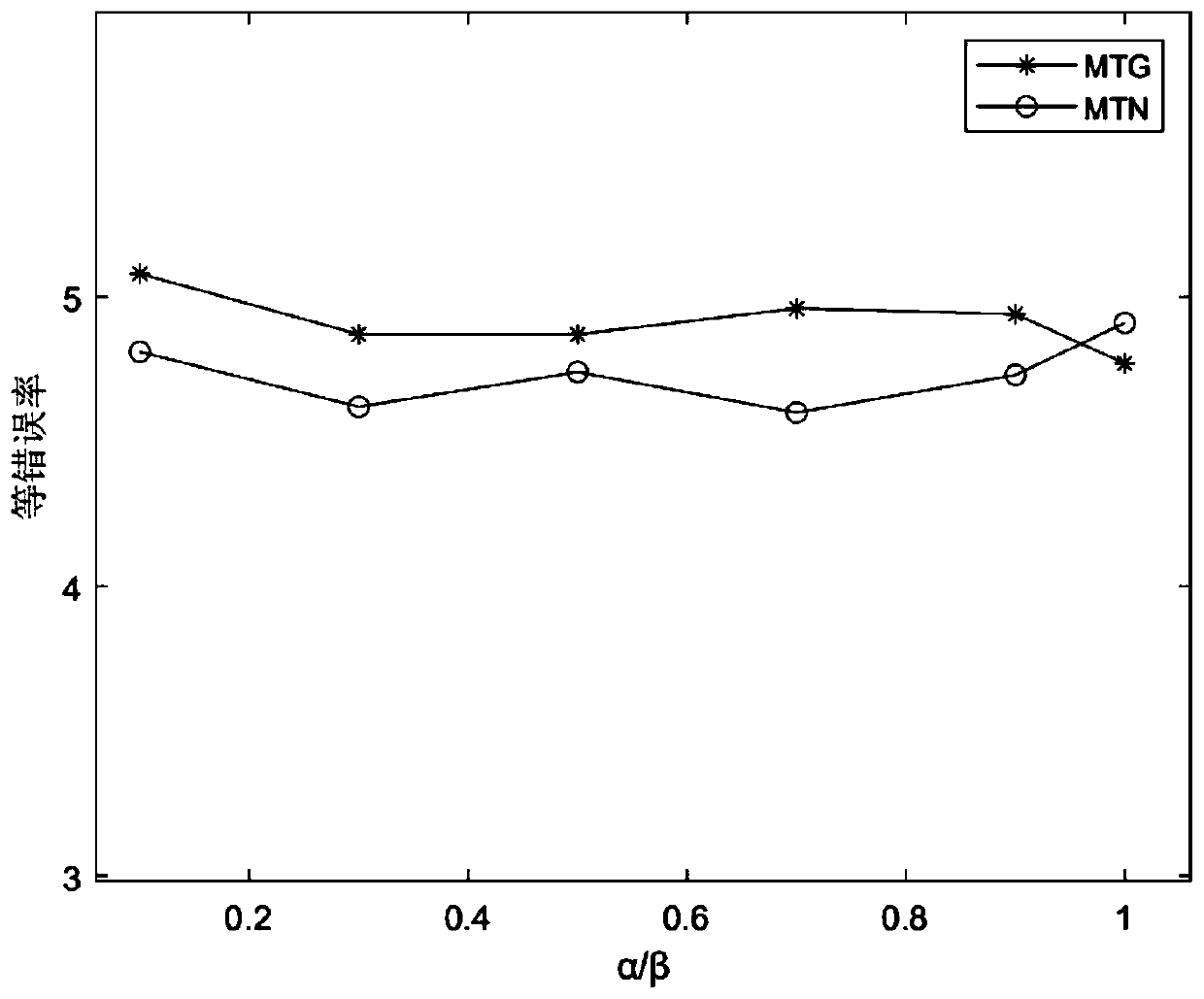

[0055] This example uses the widely used VOXCELEB1 data set in speaker recognition as an example to give an embodiment of the invention. The entire system algorithm process includes four steps: data preprocessing, feature extraction, training of neural network model parameters, and use of scoring fusion tools. Specific steps are as follows:

[0056] 1) Data preprocessing

[0057] In the data preprocessing stage, the length of the training sentence is firstly limited, and the sentence with a length of less than 1 second is directly skipped, and the sentence with a length of more than 3 seconds is randomly cut for 3 seconds. All training sentences are then normalized.

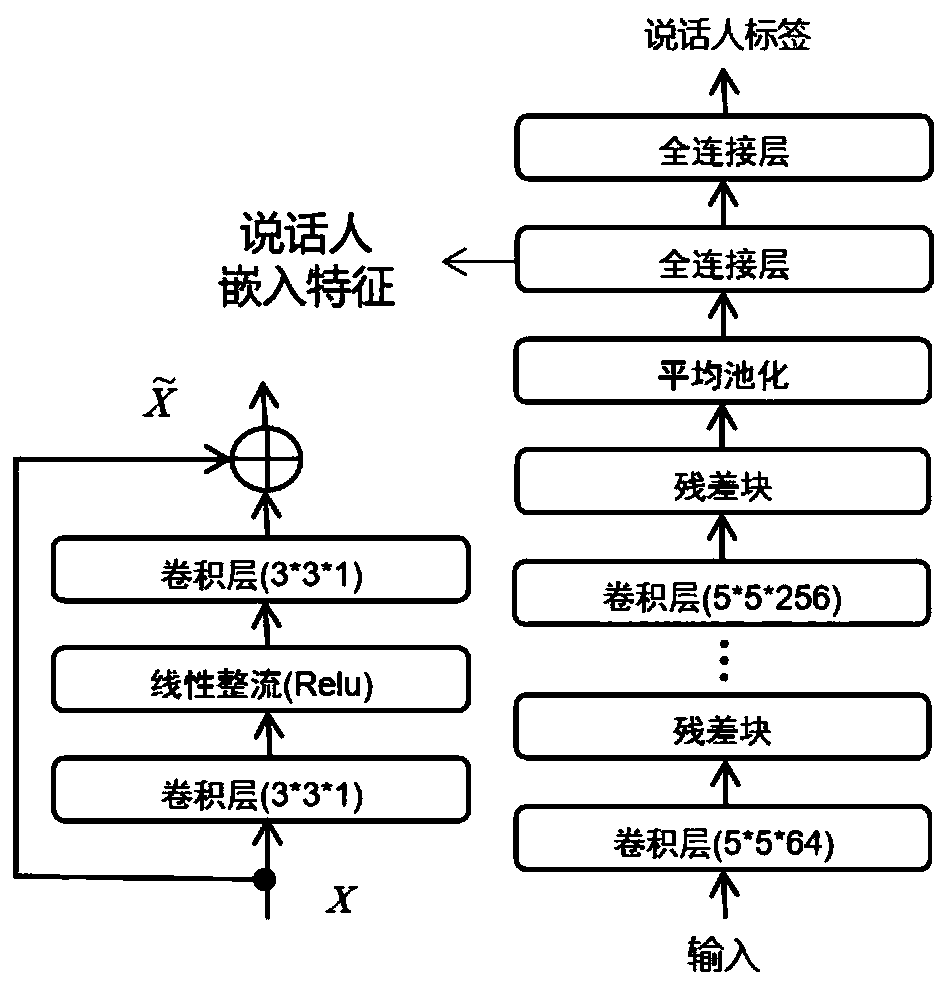

[0058] 2) Feature extraction

[0059] A 512-dimensional spectrogram was extracted using the Librosa tool. See above for a detailed description of spectrograms.

[0...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com