Robot target grabbing detection method based on scale invariant network

A scale-invariant, detection method technology, applied in neural learning methods, biological neural network models, instruments, etc., can solve the problems of grasping failure, effective extraction of grasping area interference, and algorithm invariance to scale, etc., and achieve easy implementation. , to ensure the accuracy and robustness of grasping detection, and improve the effect of grasping success rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

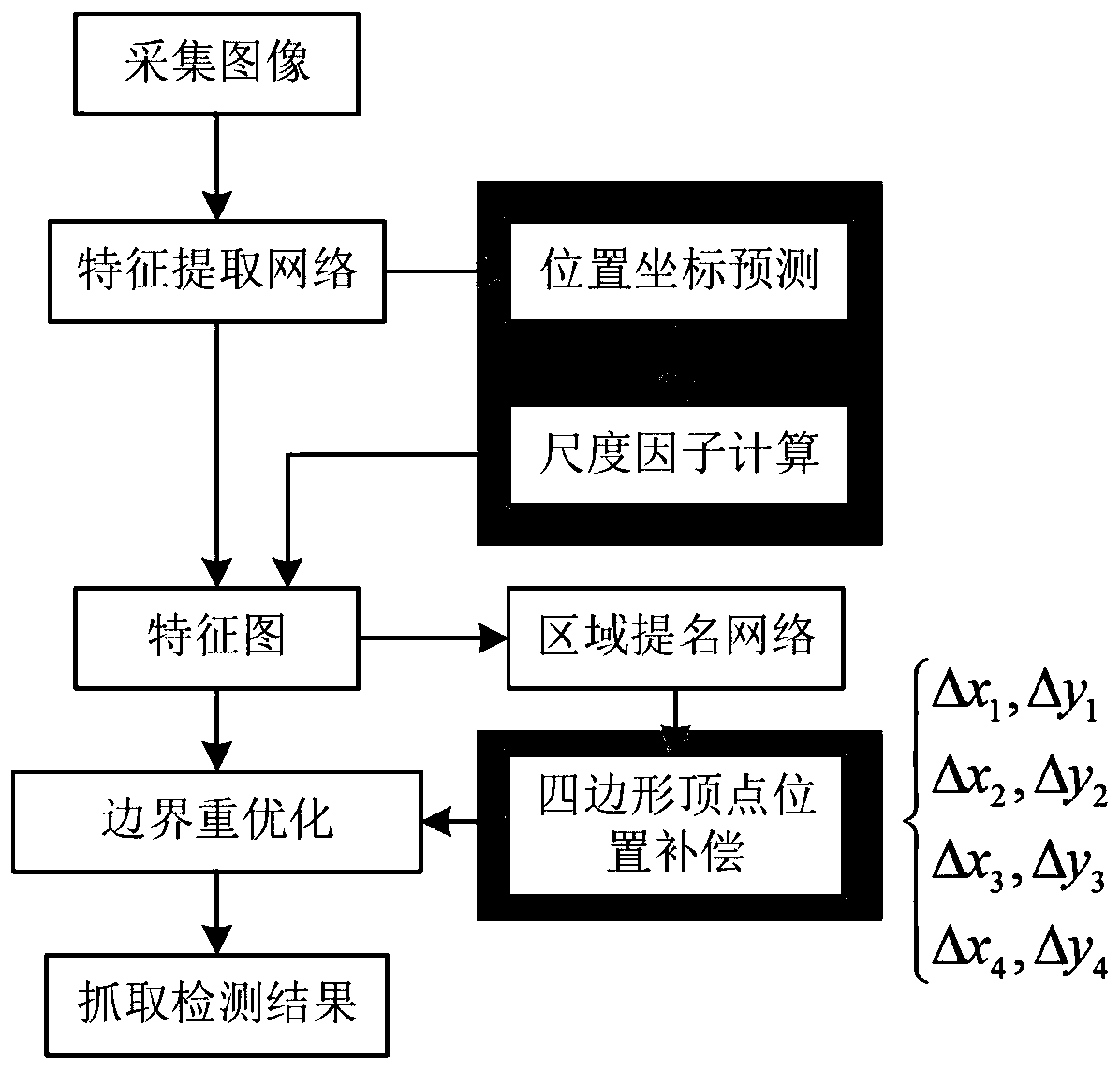

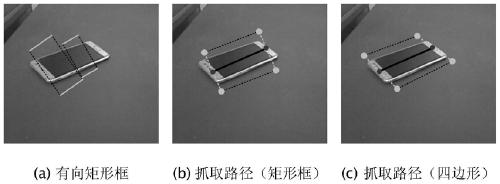

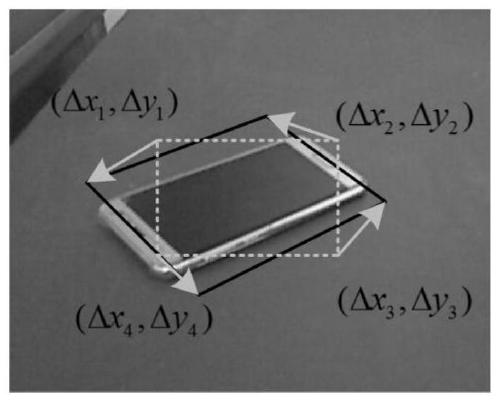

[0044] see Figure 1-3 , the present invention proposes a robot target grasping detection method based on a scale invariant network, which mainly consists of five parts: image acquisition, feature extraction, target positioning and scaling, quadrilateral grasping representation detection and boundary re-optimization.

[0045] A robot target grasping detection method based on a scale-invariant network, comprising the following steps:

[0046] Step 1, image collection: use an optical camera to collect an RGB image containing the target to be captured as input information for subsequent steps;

[0047] Step 2, feature extraction: Construct a feature extraction module consisting of 13 convolutional layers, 13 modified linear unit layers, and 4 pooling layers, and use the 30th layer of the feature extraction module, that is, the output of the modified linear unit layer as The feature map extracted from the current image;

[0048] Step 3, target positioning and scaling:

[0049] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com