Floating-point number conversion circuit

A technology for converting circuits and floating-point numbers, which is applied in the computer field, can solve the problems of low training efficiency of neural networks, achieve the effects of reducing resources, reducing data bit width, and improving efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

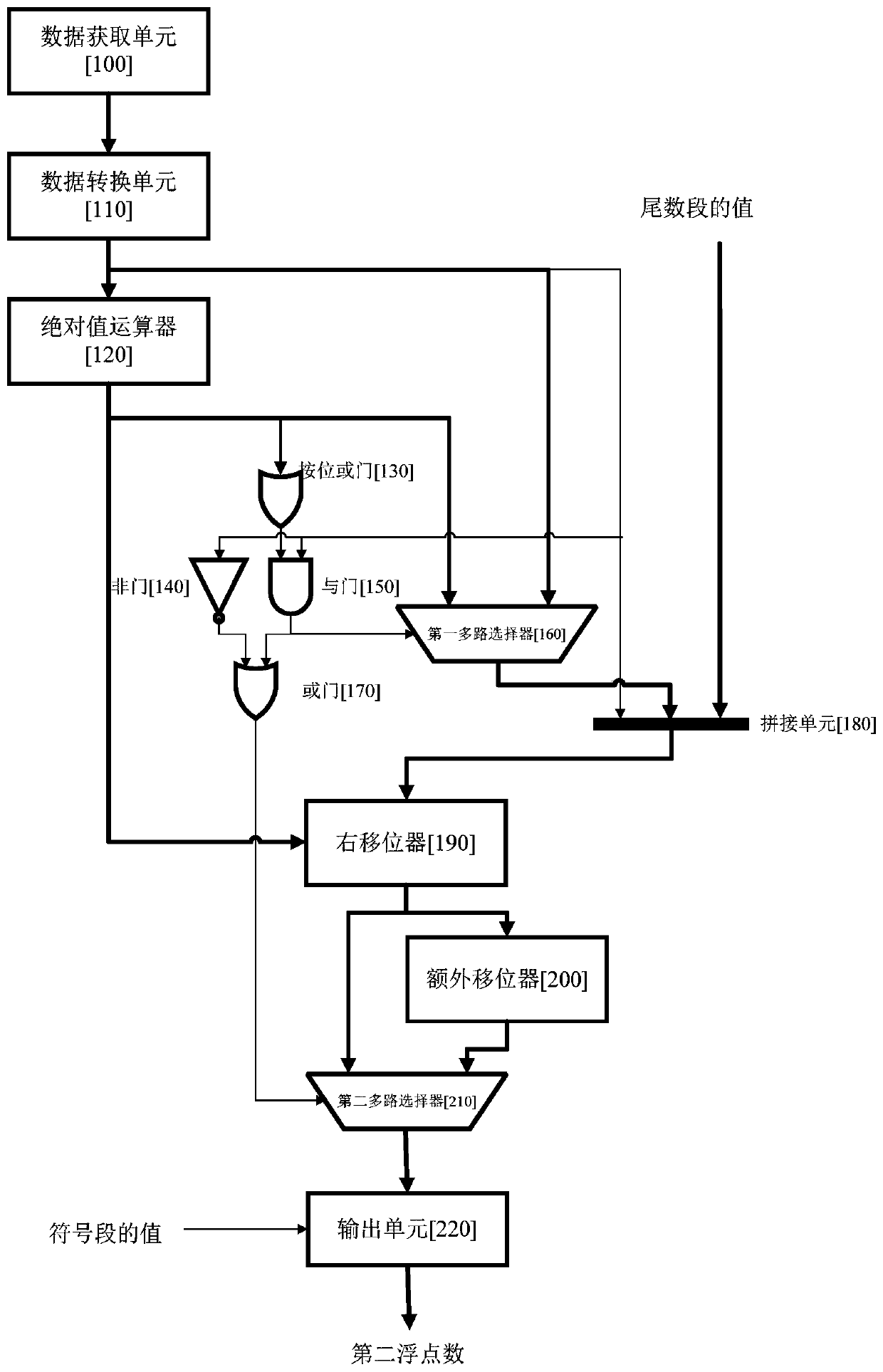

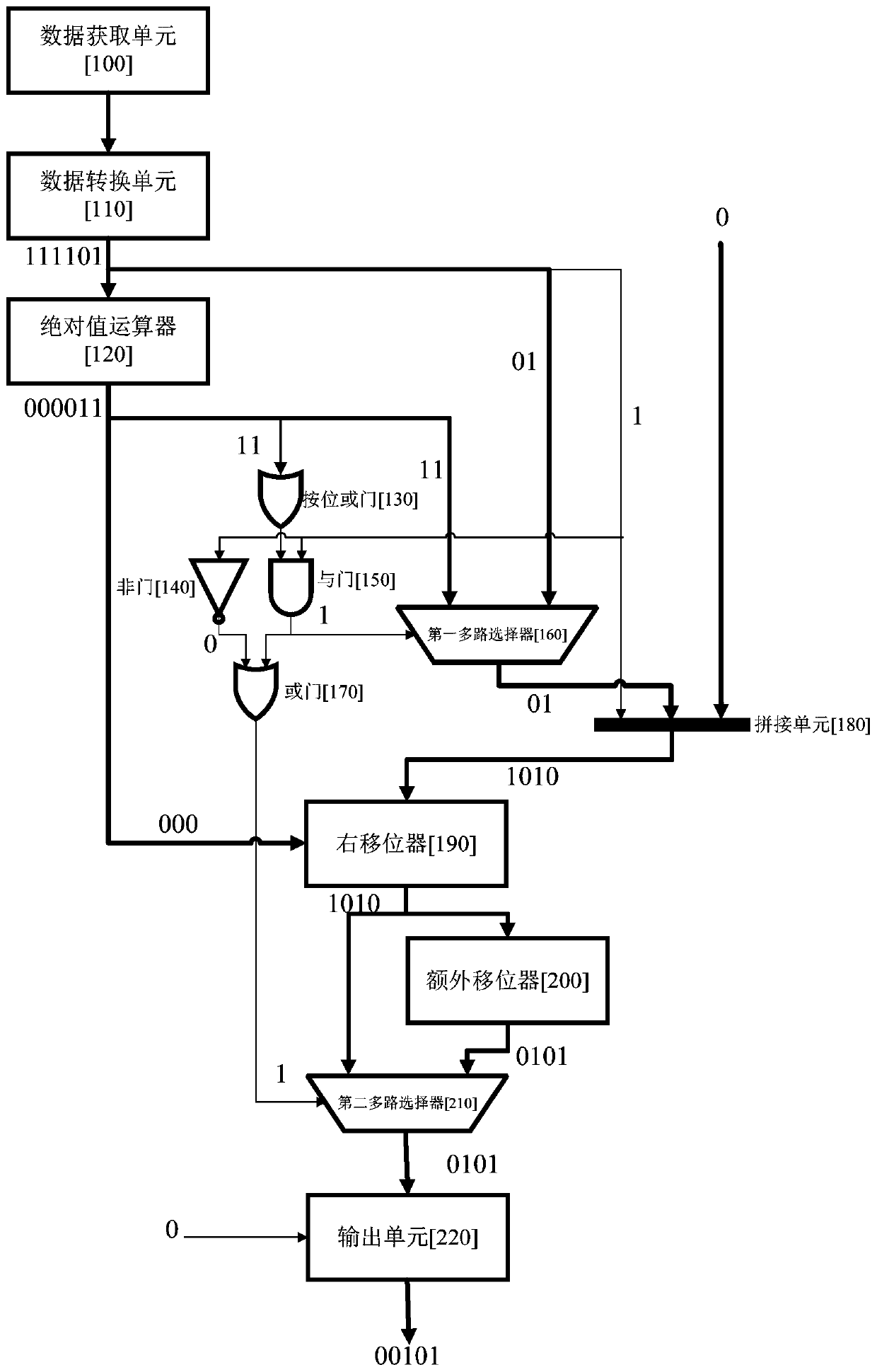

[0045] The parameters of the Posit data format in the technical solution of the present invention include N and es, wherein N is the total bit width represented by the entire data, and es is the bit width of the index segment, and both parameters need to be determined before expressing the data. N can take any positive integer value, such as 5, 8 and so on. In this embodiment, N represents the preset total bit width, and es represents the preset exponent bit width, and the preset exponent bit width is selected according to the actual demand for floating-point numbers in the Posit data format, such as 2, 3, 4, etc. . figure 1 The schematic diagram of the specific data representation form of the single-precision floating-point number based on the IEEE 754 specification provided for the present invention, the single-precision floating-point number based on the IEEE 754 specification includes three parts of the symbol segment S, the exponent segment E1 and the mantissa segment F, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com