VR immersion application system based on 3D live-action cloning technology

An application system and immersive technology, applied in 3D modeling, stereo system, mechanical mode conversion, etc., can solve problems such as weak user experience, high network bandwidth requirements, and insufficient scene authenticity, so as to increase authenticity and integrity, expanding the scope of scene application, using and easy-to-operate effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0033] The VR immersive application system based on 3D real scene cloning technology solves the problem that existing VR applications have a large amount of data and high requirements on network bandwidth, which may lead to missing data, insufficient scene authenticity, and weak user experience. question.

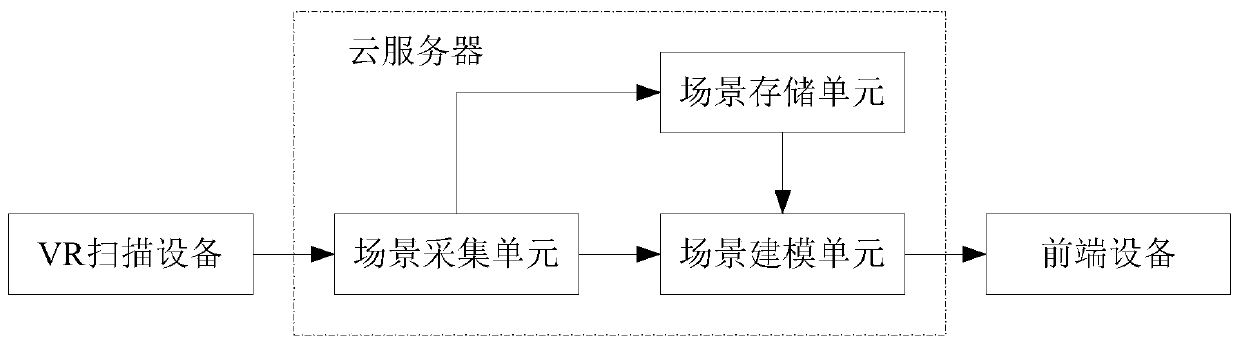

[0034] VR immersive application system based on 3D real scene cloning technology, such as figure 1 shown, including:

[0035] VR scanning equipment: select multiple display points and connection points to scan and analyze the real scene and send them to the scene acquisition unit;

[0036] Scene acquisition unit: According to the data collected by the VR scanning equipment, use the three-dimensional data stitching technology to stitch together the entire complete scene, and transmit the scene data to the scene storage unit and the scene modeling unit;

[0037] Scene storage unit: Fragment the scene data, slice and divide according to the adaptive size, and then store it i...

Embodiment 2

[0042] In this embodiment, on the basis of Embodiment 1, further, the VR scanning device is a camera based on depth vision technology, collects and analyzes 3D data of a real scene scene, and does not need to perform post-processing when calculating the depth of field after the picture is taken. It can not only avoid delay but also save the cost related to the use of a powerful post-processing system, and the scale of ranging is flexible. In most cases, it only needs to change the intensity of the light source, the optical field of view and the pulse frequency of the transmitter. The camera of the camera is a binocular vision camera, which has two kinds of cameras, one is a color camera, and the other is a depth camera. The color data is collected by the color camera, and the depth data is collected by the depth camera. Wherein, the depth camera is such as a laser radar and Kinect, but the cost of lidar is very high, and it is more used for military or industrial use, so it is ...

Embodiment 3

[0045] In this embodiment, on the basis of Embodiment 2, further, the front-end device is a mobile terminal connected to the cloud server, and the operation gestures of the mobile terminal are integrated to perform interactive operations on the displayed scene model, and the mobile terminal is a mobile phone or a tablet computer. It also includes a VR wearable terminal connected to the front-end device to increase the user's immersive experience.

[0046] This embodiment enables users to experience scene applications on mobile terminals, including barrage annotations, data measurement at key locations, and data display. For example, display the length and width of the room, expand hardware integration, monitor the temperature value of the scene, and view video.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com