Method for enhancing defense capability of neural network based on federated learning

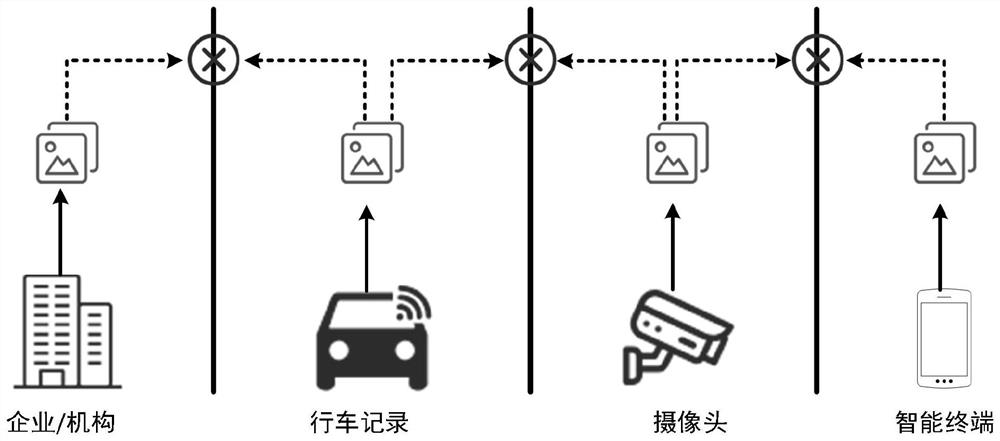

A neural network and federation technology, applied in the field of enhanced neural network defense capabilities based on federated learning, can solve problems such as artificial intelligence data crisis, privacy leakage, and neural network model data sharing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

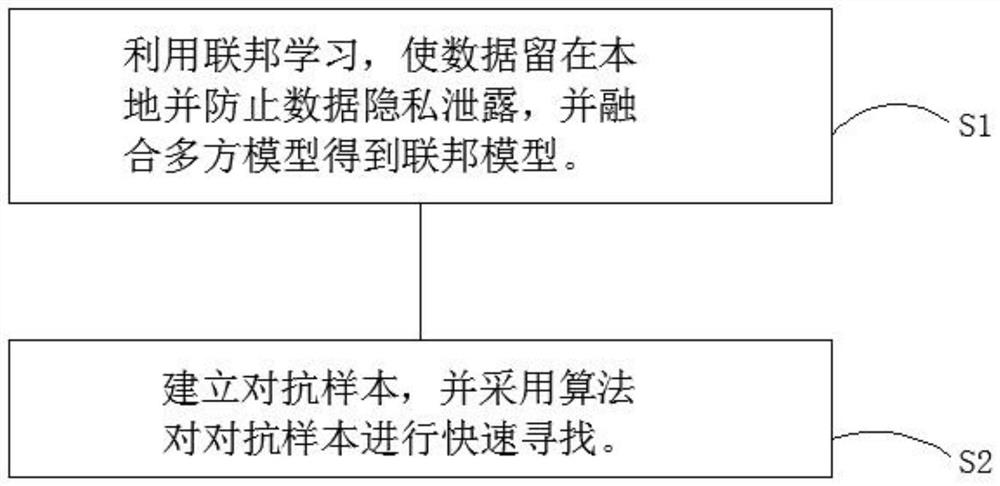

[0031] Embodiment 1: refer to Figure 1-5 Summary of the invention: the present invention provides a kind of method based on federated learning to strengthen neural network defense capability, comprises the following steps:

[0032] Step 1: Use federated learning to save the trouble of data collection. Keeping data locally can prevent data privacy from leaking out. Collaborate with all parties to conduct distributed model training, encrypt intermediate results to protect data security, and finally aggregate and fuse The multi-party model gets a better federated model, which increases the richness of the training dataset and reduces the effectiveness of adversarial examples.

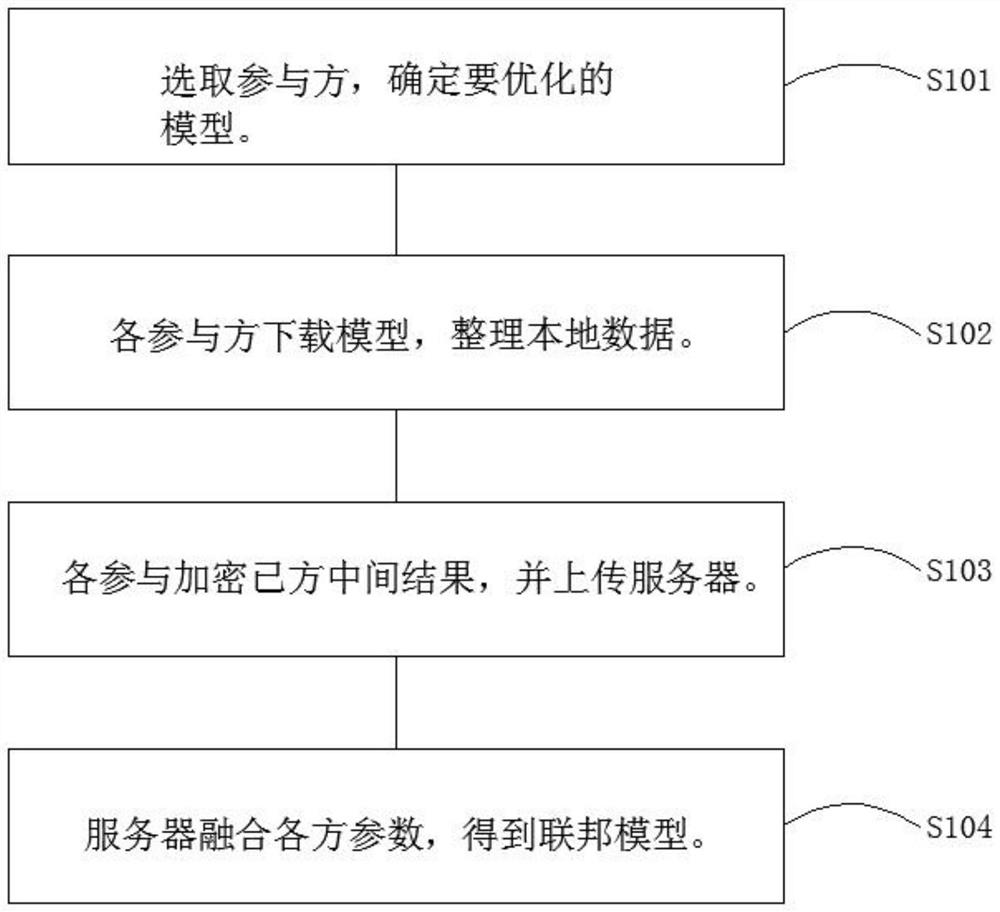

[0033] The following are the specific implementation steps:

[0034] 1) Select a trusted server as a trusted third party, and the terminals participating in the model training (participants, such as enterprises, universities, scientific research institutes, individual users, etc.) download the shared ini...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com