A monocular vision odometer method adopting deep learning and mixed pose estimation

A technology of pose estimation and monocular vision, which is applied in computing, photo interpretation, image data processing, etc., can solve the problems of not using the advantages of geometric theory, and the generalization ability needs to be improved, so as to achieve good robustness and accurate positioning. The effect of pose estimation results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] The specific embodiments of the present invention will be further described below in conjunction with the accompanying drawings.

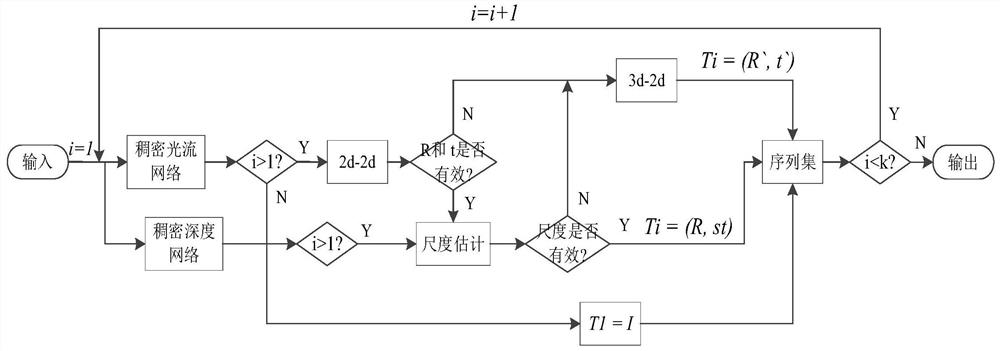

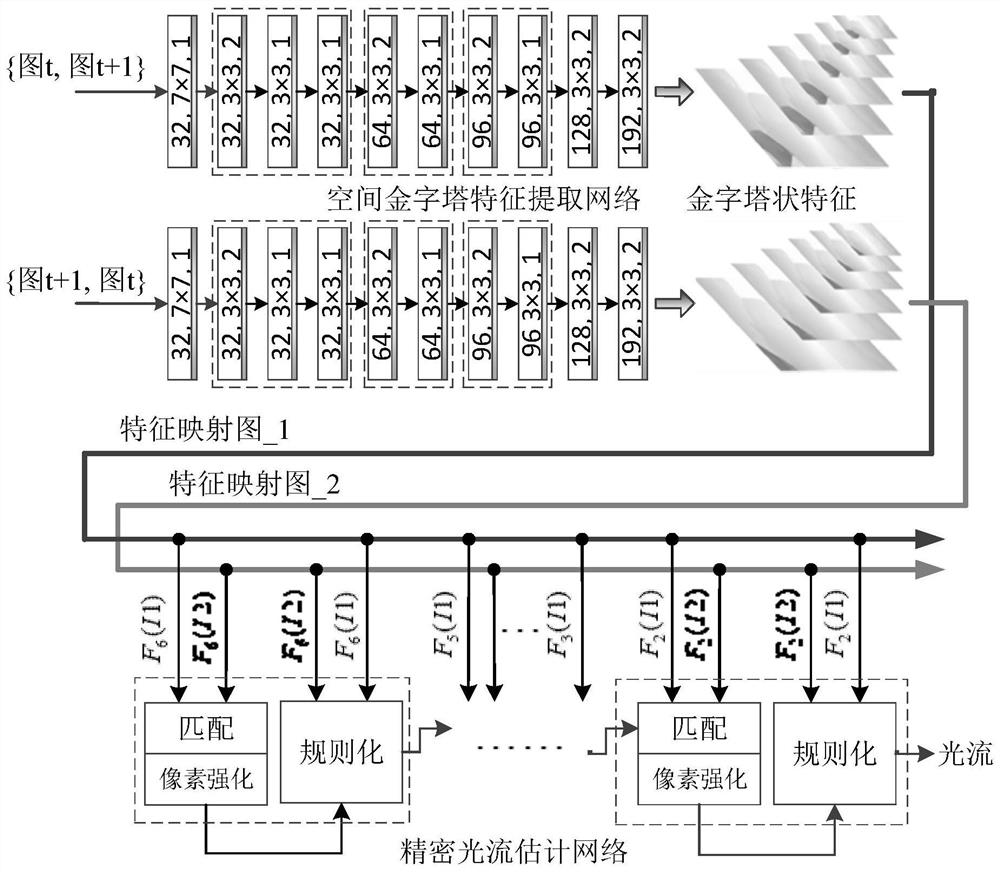

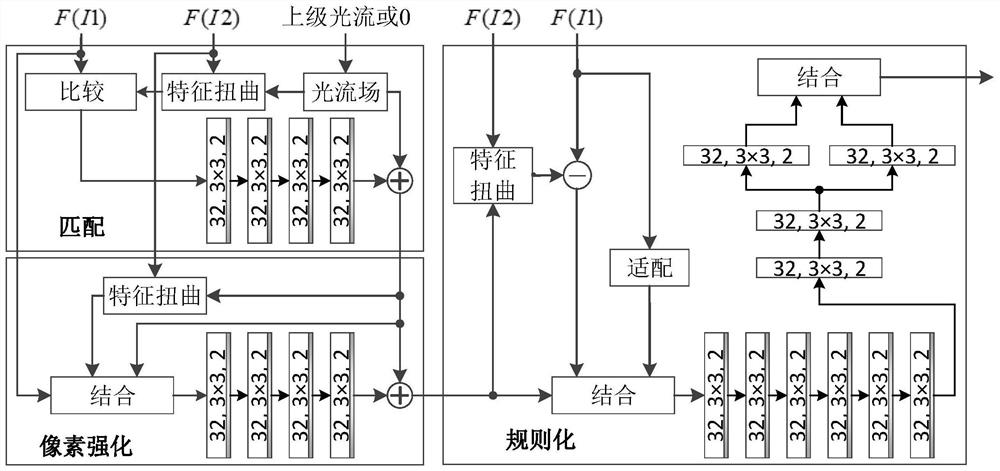

[0044] This method uses two deep learning networks: one is called dense optical flow network, which is used to extract the dense optical flow field between adjacent images, and the other is called dense deep network, which is used to extract the Dense depth field; key point matching pairs are obtained from the optical flow field, and the key point matching pairs are input into the hybrid 2d-2d and 3d-2d pose estimation algorithm to obtain relative pose information.

[0045] to combine figure 1 , the realization process of the monocular visual odometer method of the present invention is:

[0046] Step 1. In a set of image sequences, adjacent images are grouped in pairs to form an image pair, which is iteratively input into the monocular visual odometry, and the dense optical flow network is used to estimate the density between each group of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com