Floating-point dot-product hardware with wide multiply-adder tree for machine learning accelerators

A floating-point, processor-based technology used in machine learning to address increased power and performance constraints, reduced performance, increased latency, cost, and/or power consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

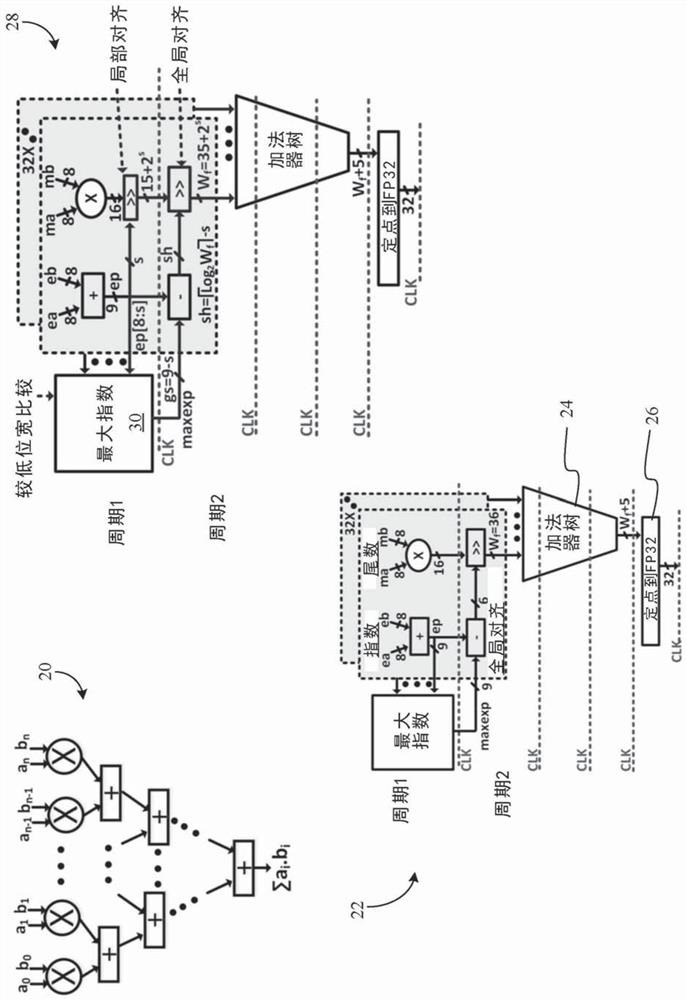

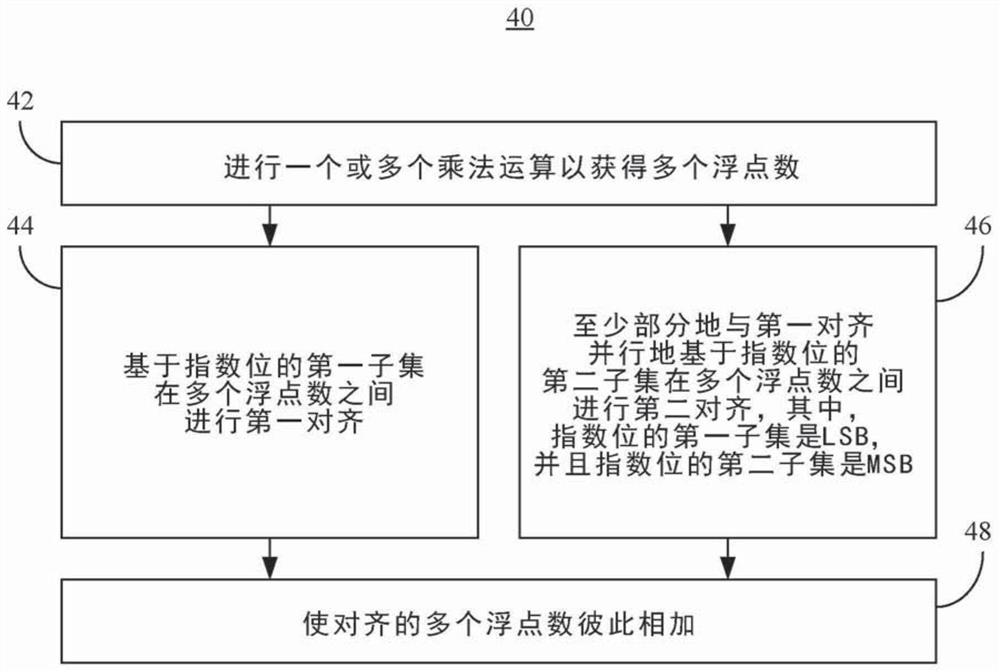

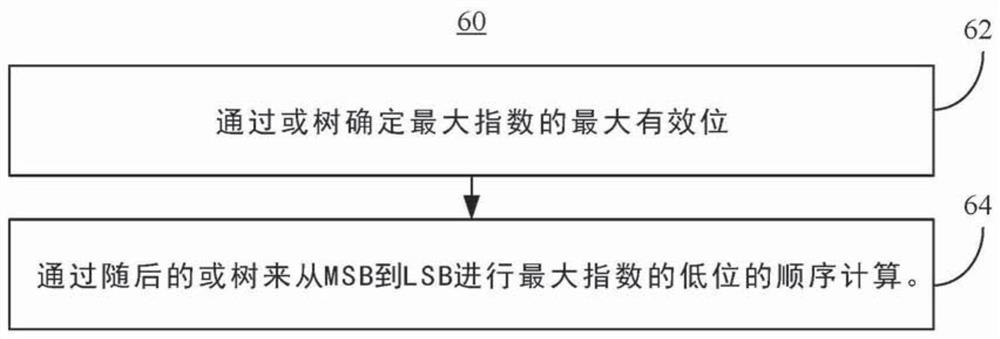

[0039] Example 1 includes a performance-enhanced computing system including a network controller and a processor coupled to the network controller, the processor including logic coupled to one or more substrates, the logic for : performing a first alignment between a plurality of floating point numbers based on a first subset of exponent bits; at least in part in parallel with said first alignment, performing a first alignment between said plurality of floating point numbers based on a second subset of exponent bits a second alignment, wherein a first subset of the exponent bits is the least significant bit (LSB), and a second subset of the exponent bits is the most significant bit (MSB); and the plurality of floating point numbers of the alignment add to each other.

example 2

[0040]Example 2 includes the computing system of example 1, wherein the first alignment is based on each exponent relative to a predetermined constant.

example 3

[0041] Example 3 includes the computing system of example 1, wherein the second alignment is based on each exponent relative to a greatest exponent of all exponents.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com