Violent video classification method and system and storage medium

A video classification and violence technology, applied in the field of violent video classification and recognition, can solve the problems of large manpower and cost, the model does not have the generalization ability, the characteristics and knowledge are limited in the size and distribution of training data, etc., to improve the generalization ability. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

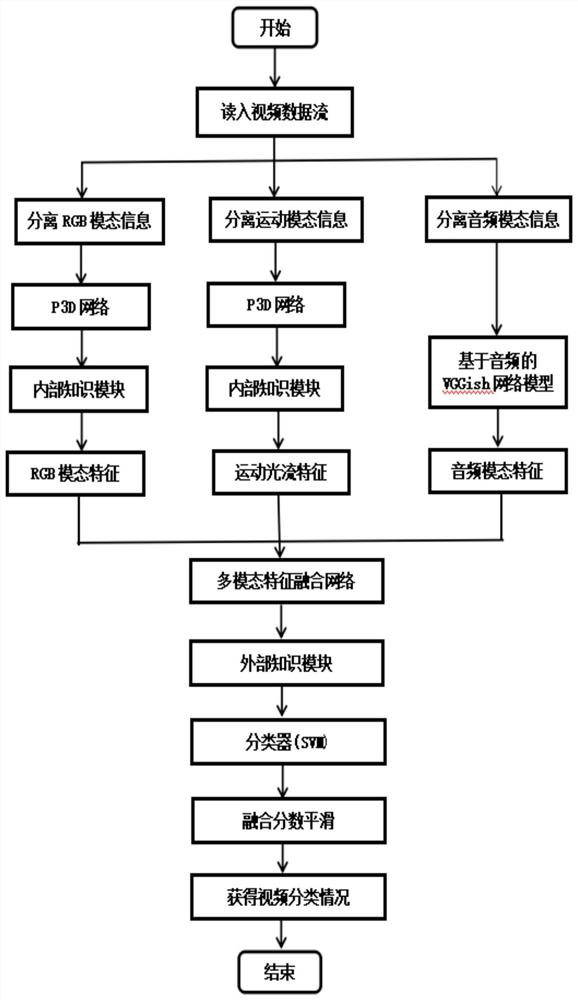

[0068] Such as figure 1 As shown, the violent video classification method provided by the present invention mainly includes the following steps:

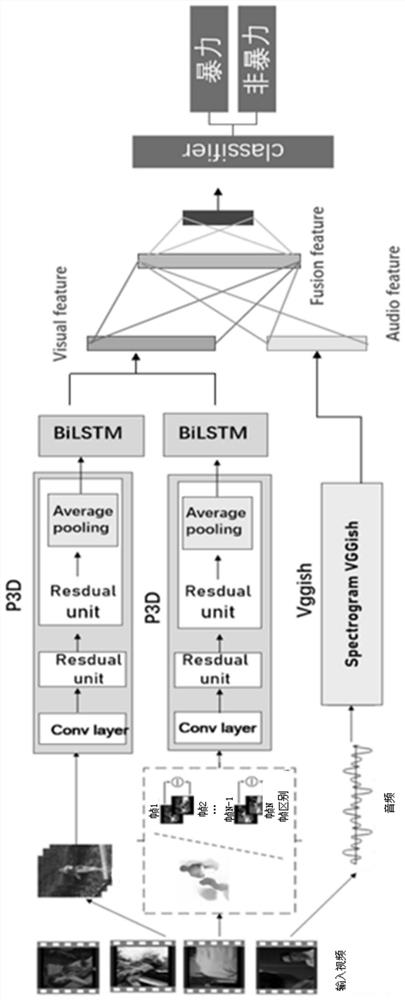

[0069] S100. Obtain a sample video data stream, and extract an RGB mode data stream, a motion mode data stream, and an audio mode data stream from the sample video data stream;

[0070] S200. Input the RGB modal data stream, motion modal data stream, and audio modal data stream into their corresponding feature extraction network models, so as to extract RGB data features, motion optical flow features, and audio for describing violent scenes Modal features;

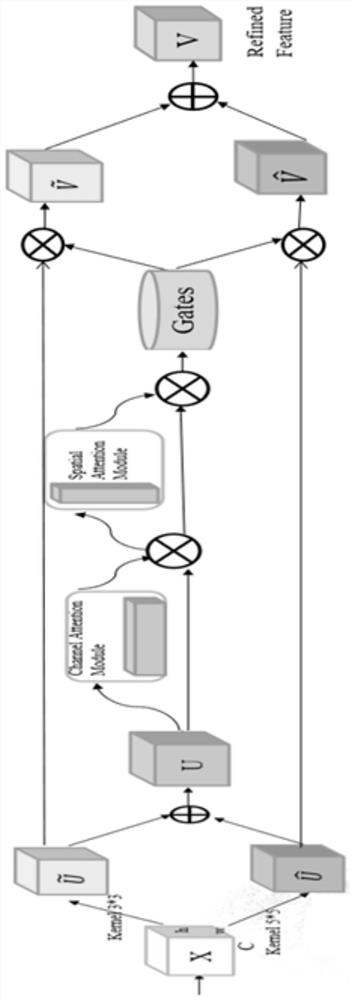

[0071] S300. Input the RGB data features and motion optical flow features into an internal knowledge model based on a multi-scale attention convolutional neural network for processing, so as to obtain new RGB data features and new motion optical flow features with violent key features;

[0072] S400. Input the new RGB data features, new motion optical flow features, and audio ...

Embodiment 2

[0125] Such as Figure 5 As shown, a schematic diagram of the basic composition of the violent video classification system integrated with internal and external knowledge modules in Embodiment 2 of the present invention. According to different functions, the system is mainly divided into the following seven modules (not shown in the figure):

[0126] A sample data extraction module, configured to obtain a sample video data stream, and extract an RGB modal data stream, a motion modal data stream and an audio modal data stream from the sample video data stream;

[0127] The modality feature extraction module is used to input the RGB modality data stream, the motion modality data stream and the audio modality data stream into respective corresponding feature extraction network models respectively, so as to extract the RGB data features used to describe the violent scene, Motion optical flow features and audio modality features;

[0128] The key semantic feature extraction modul...

Embodiment 3

[0134] Furthermore, the present invention also provides a computer storage medium, which is characterized in that a computer program executable by a processor is stored therein, and the computer program implements the above violent video classification method when executed by the processor.

[0135] In addition, the present invention also provides a computer device, which is characterized by including a memory and a processor, and the processor is configured to execute the computer program stored in the memory, so as to realize the above method for classifying violent videos.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com