Federated learning privacy protection method and system based on homomorphic pseudo-random numbers

A pseudo-random number and privacy protection technology, applied in the field of privacy protection, can solve problems such as high complexity, inapplicability to large-scale federated learning, and leakage of data information, so as to reduce communication costs, ensure security, and protect data privacy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

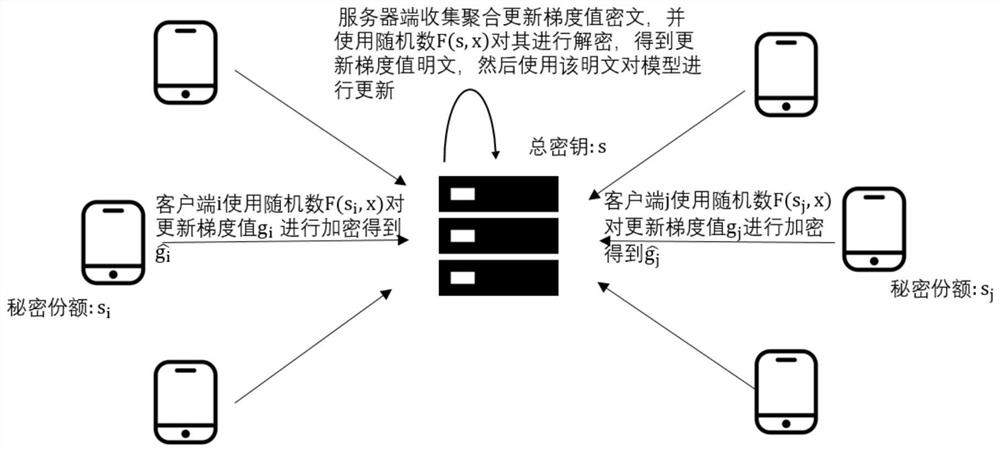

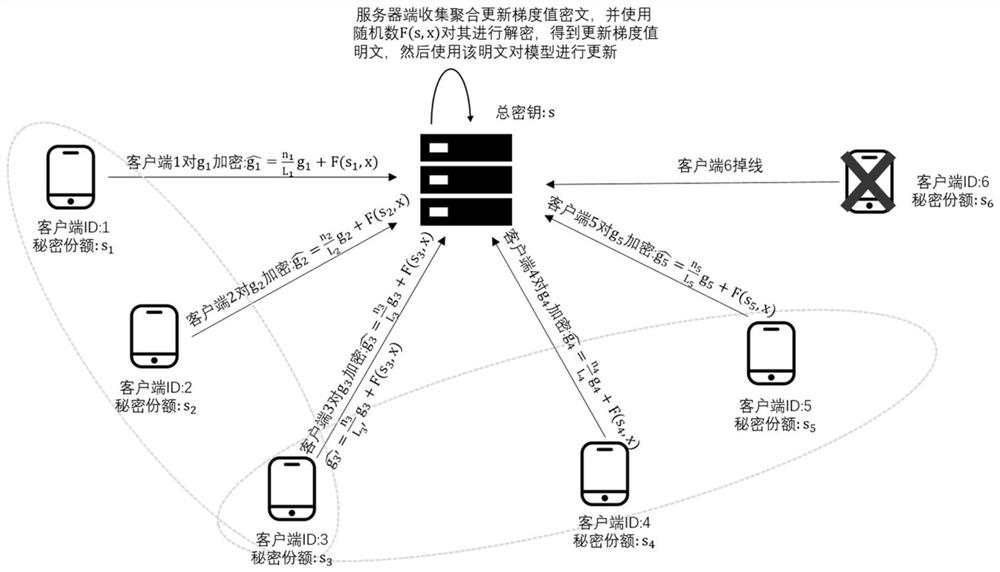

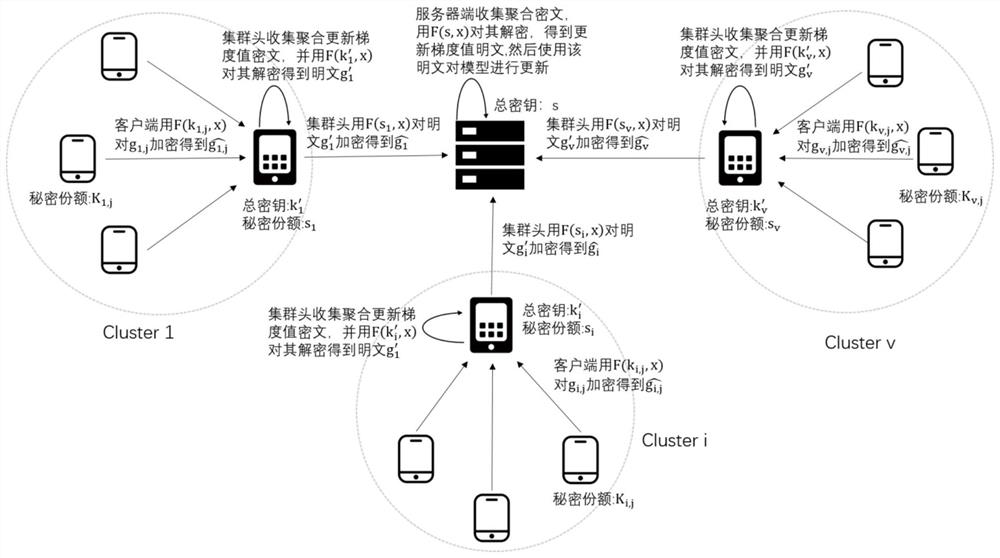

[0051] Embodiment 1, this embodiment provides a federated learning privacy protection method based on homomorphic pseudo-random numbers;

[0052] A privacy protection method for federated learning based on homomorphic pseudo-random numbers, including:

[0053] S101: n clients use verifiable secret sharing VSS to generate a key s, divide the key s into n shares, and each client gets its own secret share s i ; At least t clients participate in the recovery key s, and send the key s to the server; n and t are both positive integers; s i Indicates the secret share of the i-th client;

[0054] S102: Each client performs federated learning, and each client uses its own data locally for machine learning model training to generate updated gradient values;

[0055] S103: Each client uses the secret share s i As a seed, use the key homomorphic pseudo-random function to generate a pseudo-random number F(s i ,x); and use random number F(s i , x) Encrypt the updated gradient value to ...

Embodiment 2

[0111] Embodiment 2, this embodiment provides a federated learning privacy protection system based on homomorphic pseudo-random numbers;

[0112] A federated learning privacy protection system based on homomorphic pseudo-random numbers, including: a server and several clients;

[0113] n clients use verifiable secret sharing VSS to generate a key s, divide the key s into n shares, and each client gets its own secret share s i ; At least t clients participate in the recovery key s, and send the key s to the server;

[0114] Each client performs federated learning, and each client uses its own data locally for machine learning model training to generate updated gradient values;

[0115] Each client takes the secret share s i As a seed, use the key homomorphic pseudo-random function to generate a random number F(s i ,x); and use random number F(s i , x) Encrypt the updated gradient value to obtain the updated gradient value ciphertext, and then send the updated gradient value...

Embodiment 3

[0117] Embodiment 3, this embodiment also provides a client.

[0118] A client configured to:

[0119] n clients use verifiable secret sharing VSS to generate a key s, divide the key s into n shares, and each client gets its own secret share s i ; At least t clients participate in the recovery key s, and send the key s to the server;

[0120] Each client performs federated learning, and each client uses its own data locally for machine learning model training to generate updated gradient values;

[0121] Each client takes the secret share s i As a seed, use the key homomorphic pseudo-random function to generate a random number F(s i ,x); and use random number F(s i , x) Encrypt the updated gradient value to obtain the updated gradient value ciphertext, and then send the updated gradient value ciphertext to the server;

[0122] The client receives the updated model fed back from the server.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com