Lightweight distributed federated learning system and method

A learning system and distributed technology, applied in the field of lightweight distributed federated learning system, can solve problems such as low efficiency of federated learning and inability to reuse open source libraries, achieve the effect of low development cycle and development cost, and ensure data security

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

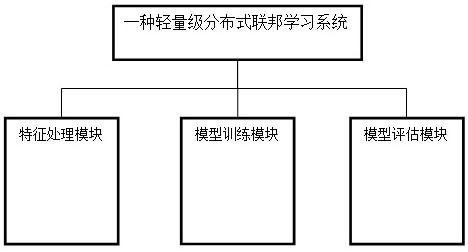

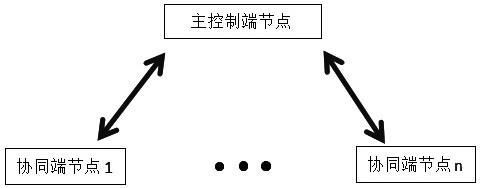

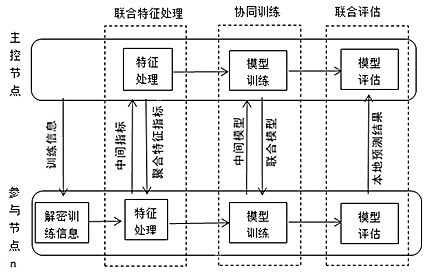

[0053] like figure 1 , figure 2 As shown, a lightweight distributed federated learning system includes a main control end node and multiple collaborative end nodes, and also includes:

[0054] Feature processing module: used for the main control end node to schedule each cooperative end node to perform joint feature processing through the feature preprocessing interface;

[0055] Model training module: used for the main control end node to schedule model training for federated learning of each cooperative end node through the model training interface;

[0056] Model evaluation module: used for the main control end node to aggregate the prediction results of each cooperative end node and evaluate the model performance through the model evaluation interface.

[0057]In this embodiment, the interaction between nodes does not involve specific private data, but only involves irreversible intermediate data, effectively ensuring data security. In the machine learning task life cy...

Embodiment 2

[0079] In this embodiment, joint feature processing includes, but is not limited to, missing value processing, outlier value processing, standardization, normalization, binarization, numericalization, one-hot encoding, polynomial feature construction, and the like. In an optional implementation, if the training parameters include a cross-validation method, then when each cooperative end trains a local model, it no longer only trains a single model, but fixes the method of cross-validation to split the data set, and each cooperative end trains at the same time Multiple models, when communicating with the master control node, also transmit the parameters of multiple models at the same time, achieving cross-validation and reducing the number of communications between nodes.

[0080] In an optional implementation, the main control terminal can realize model selection, and the selection process includes: the main control terminal node iteratively updates the model hyperparameter com...

Embodiment 3

[0082] In this embodiment, each cooperative end has its own local sample data, and the features overlap a lot, but the sample users with overlapping features have less overlap, and federated learning is performed based on overlapping features. This training method is called horizontal federated learning. In an optional implementation, the characteristics of the data of each cooperative end are not completely the same, and federated learning is performed based on overlapping sample users. This training method is called vertical federated learning.

[0083] To aid in understanding, here is an example: Figure 5 As shown, the sample dimensions and characteristics of cooperative end node 1 and cooperative end node 2 have partial overlap. First, determine the intersection feature data X of each cooperative end node 1 and x 2 , and then perform horizontal federated learning. like Figure 4 , for common features, in the joint feature processing, the two cooperative end nodes perfo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com