Motion capture system and method based on laser large-space positioning and optical inertia complementation

A technology of motion capture and large space, which is applied in the direction of optical devices, user/computer interaction input/output, instruments, etc., can solve the problems of easy occlusion of optical positioning, error accumulation of inertial motion capture technology, and motion loss, etc., to achieve Resolved foot drift, reduced number of cameras, reduced likelihood effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053] In order to make the above objects, features and advantages of the present invention more comprehensible, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

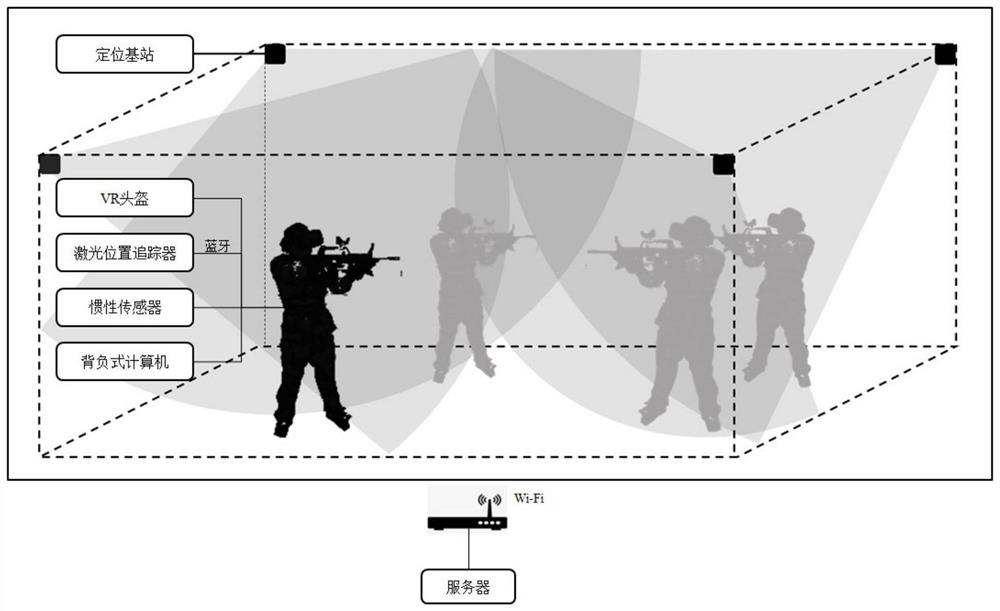

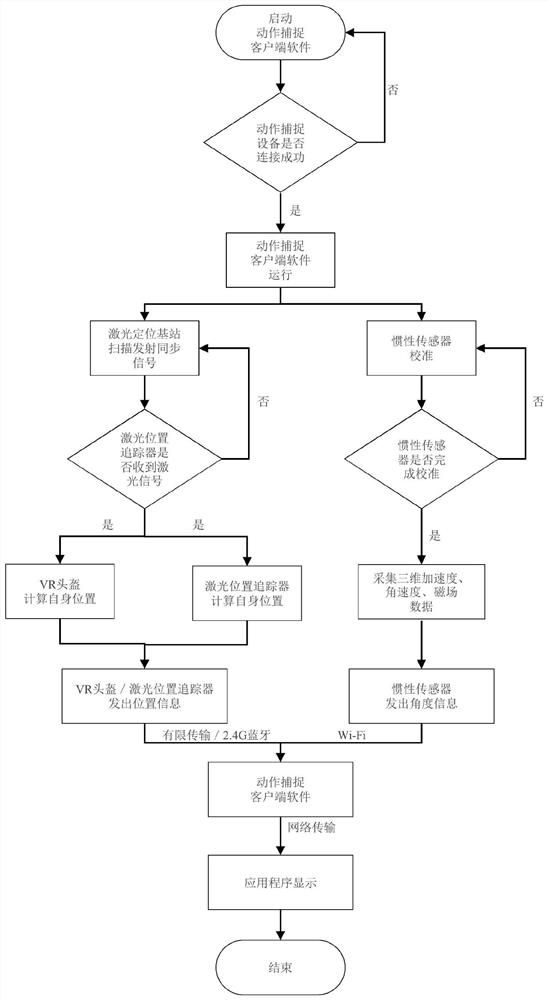

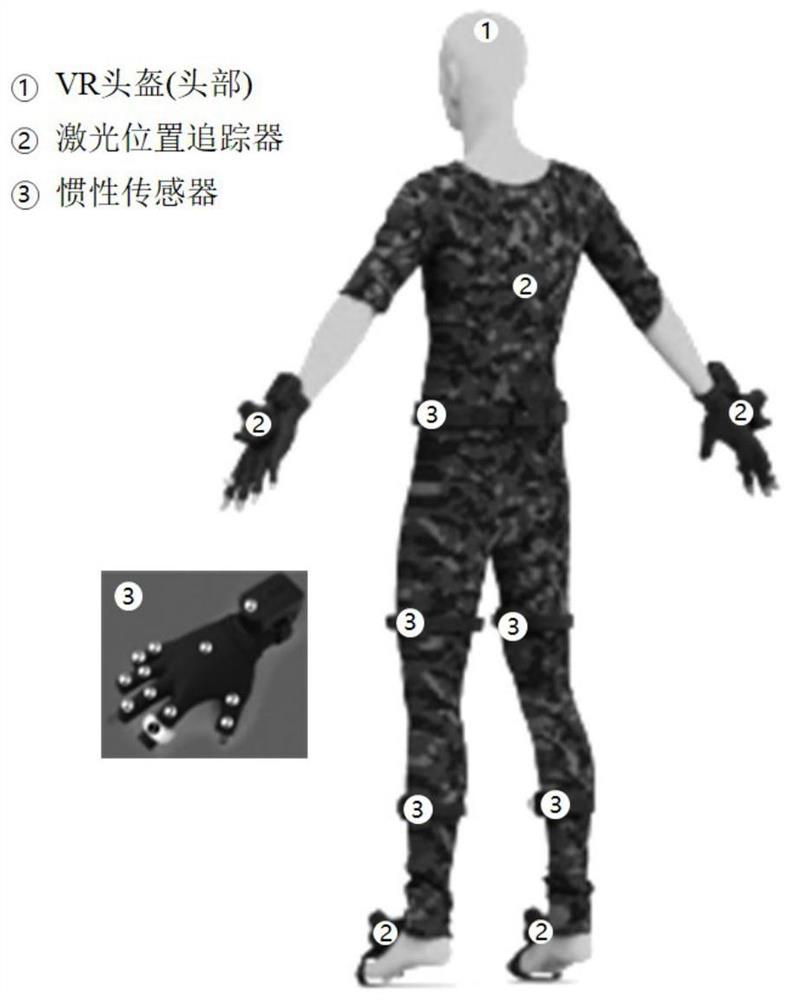

[0054] The embodiment of the present invention discloses a motion capture method based on laser large-space positioning and light-inertia complementarity. This method is applied to users in military and civilian fields such as troops, armed police, and public security, and provides virtual immersive simulations for application scenarios such as daily training and emergency drills. The training solution can support the trainees to enter a highly simulated virtual training environment, and realize the synchronous mapping of the virtual person to the real person's action through the motion capture system, so as to complete the cooperation and confrontation between the real person and the real person, and the real person and the virtual...

PUM

| Property | Measurement | Unit |

|---|---|---|

| Installation height | aaaaa | aaaaa |

Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com