Pipelined computing acceleration co-processing method and system

A pipelined, co-processing technology, applied in computing, electrical digital data processing, program control design, etc., can solve the problems of high computing efficiency, difficult to obtain, waste of resources, etc., to reduce computing time slots, improve throughput, and improve work. The effect of efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

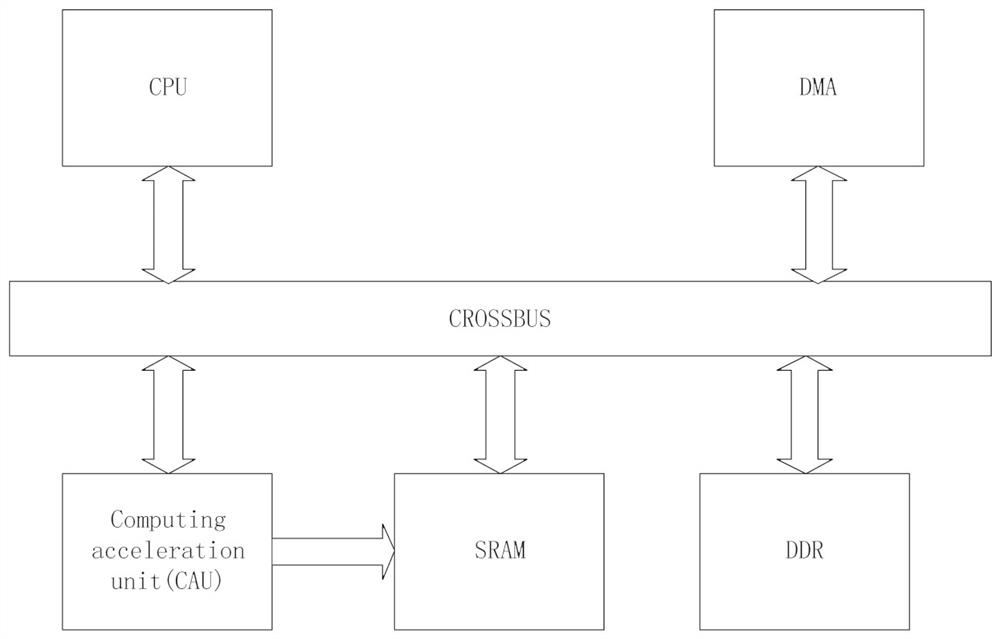

[0033] see image 3 , image 3 It is a schematic flowchart of a pipelined computing acceleration co-processing method disclosed in an embodiment of the present invention. Wherein, the pipelined computing acceleration co-processing can be applied in a computing acceleration co-processing system, and the system includes multiple computing units respectively used to perform operations of different levels. The embodiment of the present invention does not limit the computing acceleration co-processing system. Such as image 3 As shown, the pipelined calculation acceleration co-processing method may include the following operations:

[0034] 101. Receive a plurality of operation groups to be calculated, and analyze the operation groups to generate the number of operations to be performed and the operands of each level of operation.

[0035]Among them, for the sake of easy understanding, the implementation form of the calculation unit is CAU0, CAU1...CAUn pipelined structure. com...

Embodiment 2

[0051] see Figure 5 , Figure 5 It is a schematic flowchart of another pipelined computing acceleration co-processing method disclosed in the embodiment of the present invention. Wherein, the pipelined computing acceleration co-processing can be applied in a computing acceleration co-processing system, and the system includes multiple computing units respectively used to perform operations of different levels. The embodiment of the present invention does not limit the computing acceleration co-processing system. Such as Figure 5 As shown, the pipelined calculation acceleration co-processing method may include the following operations:

[0052] In this embodiment, the operation groups to be calculated include: the first operation group is K1=(A27(A26(...)0), the second operation group is K2=(B20(B19(...)0), and the second operation group is K2=(B20(B19(...)0). The three operation groups are K3=(C22(C21(...)0).

[0053] Firstly, the number of operations of K1, K2, and K3 a...

Embodiment 3

[0062] see Figure 7 , Figure 7 It is a schematic diagram of a pipelined computing acceleration co-processing system disclosed in an embodiment of the present invention. Such as Figure 7 As shown, the pipelined computing acceleration co-processing system may include:

[0063] A plurality of calculation units 1 are respectively used to perform calculations of different levels.

[0064] The operand management unit 2 is configured to receive a plurality of operation groups to be calculated, analyze and generate the number of operations to be performed and the operands of each level of operation for the operation groups.

[0065] The implementation form of the calculation unit is CAU0, CAU1...CAUn pipelined structure. combine Figure 4 An implementation manner of the shown pipelined computing acceleration co-processing method is described in detail. The implementation of this pipeline computing acceleration co-processing method includes an operand management unit (operands...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com