Image recognition method based on eye movement fixation point guidance, MR glasses and medium

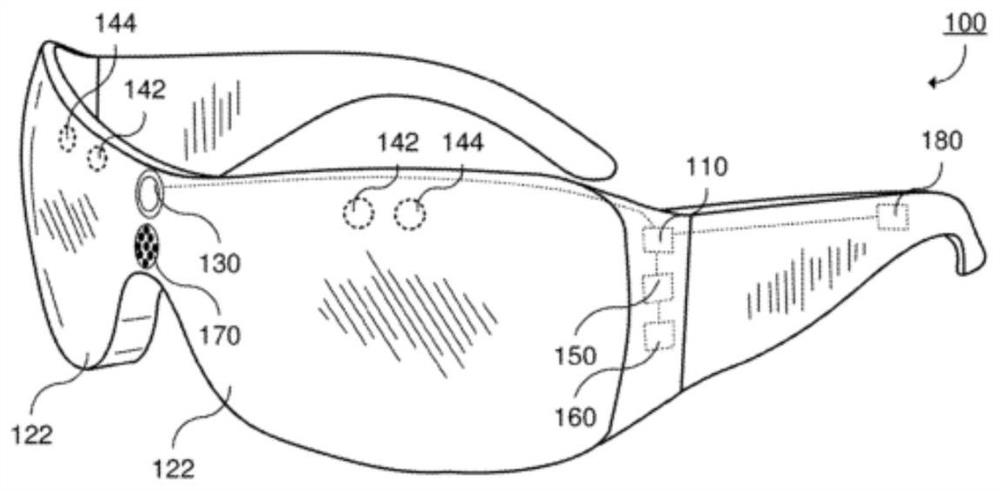

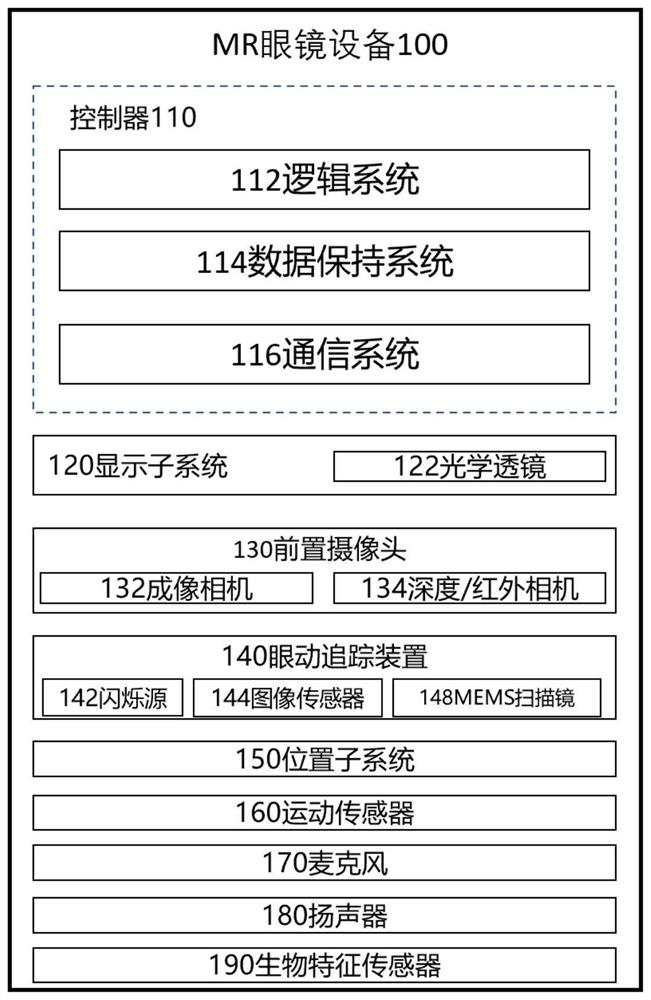

An image recognition and gaze point technology, applied in the field of image recognition, can solve problems such as poor interactive experience, failure to provide users with better interactive experience, less virtual holographic target recognition, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0276] Example 1: as Figure 4 As shown, the infrared + color camera is mixed to obtain the image

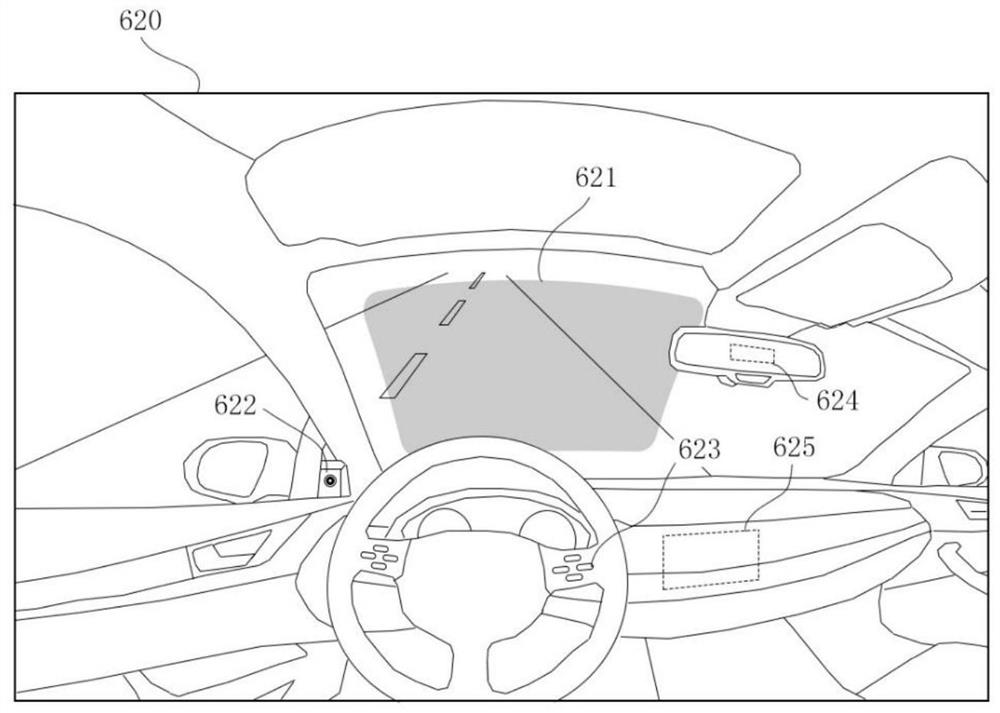

[0277] S101: The physical world is constructed into a three-dimensional space through the infrared camera of the MR glasses, and the real black and white image is captured by the infrared camera in real time.

[0278] S102: The eye tracking device of the MR glasses obtains the gaze direction of the user's line of sight or the head movement tracking device obtains the gaze point at the center of the user's field of vision, and obtains the user's gaze point / gaze in one or more front camera images and in the holographic space through a mapping algorithm point coordinate position.

[0279] S103: The local processor of the MR glasses and the local database perform AI image analysis on the black and white images captured by the mid-infrared camera in S101, identify at least one object in the image using the trained object feature library, and adaptively frame the target object in the...

Embodiment 2

[0343] Embodiment 2: The IR camera and the RGB camera are mixed to obtain a real scene image, and the scene analysis and behavior analysis predict the target object that the user is interested in and image recognition.

[0344] S201: The physical world is constructed into a three-dimensional space through the infrared camera of the MR glasses, and the real-time black and white image is captured by the infrared camera in real time.

[0345] S202: The eye tracking device of the MR glasses obtains the gaze direction of the user's line of sight, or the head movement tracking device obtains the gaze point at the center of the user's field of vision, and obtains the user's gaze point / gaze in one or more front camera images and in the holographic space through a mapping algorithm point coordinate position.

[0346] S203: Detect objects and sounds in the scene, the local processor of the MR glasses and the local database perform AI image analysis on the black and white images captured...

Embodiment 3

[0380] Embodiment 3: The IR camera and the RGB camera are mixed to obtain a real scene image, and the eye movement interaction intention is used to predict the target object that the user is interested in and image recognition.

[0381] S301: The physical world is constructed into a three-dimensional space through the infrared camera of the MR glasses, and the real-time black and white image is captured by the infrared camera in real time.

[0382] S302: The eye tracking device of the MR glasses obtains the gaze direction of the user's gaze or the head movement tracking device obtains the gaze point at the center of the user's field of vision, and obtains the user's gaze point / gaze in one or more front camera images and in the holographic space through a mapping algorithm point coordinate position.

[0383] S303: The local processor of the MR glasses and the local database perform AI image analysis on the black and white images captured by the mid-infrared camera in S301, iden...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com