Multi-sensor fusion sensing method and system for automatic driving under extreme working condition

A multi-sensor fusion and automatic driving technology, which is applied in the direction of instruments, character and pattern recognition, computer components, etc., can solve problems such as fault tolerance or robustness of fusion systems that are not well resolved

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048] In order to make the purpose, technical solution and advantages of the present application clearer, the present application will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain the present application, and are not intended to limit the present application.

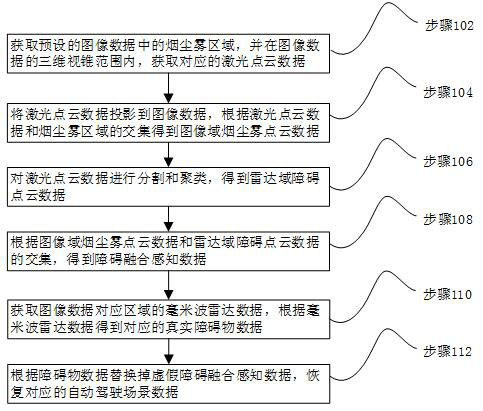

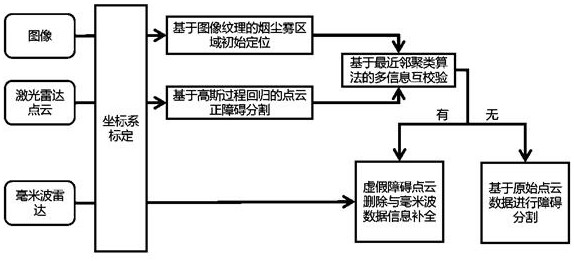

[0049] In one embodiment, such as figure 1 As shown, a multi-sensor fusion perception method for autonomous driving under extreme working conditions is provided, including the following steps:

[0050] Step 102, acquire the smoke and dust area in the preset image data, and acquire the corresponding laser point cloud data within the range of the three-dimensional viewing cone of the image data.

[0051] Since the visible light camera has rich texture information, it can initially locate the smoke and dust area based on the apparent information in the acquired image data, b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com