Assembly part relative pose estimation monitoring method based on deep learning

A technology of relative pose and deep learning, applied in computing, image analysis, image enhancement, etc., can solve problems such as occlusion of assembly parts, lack of overall correlation, failure to estimate the relative pose of assembly parts, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

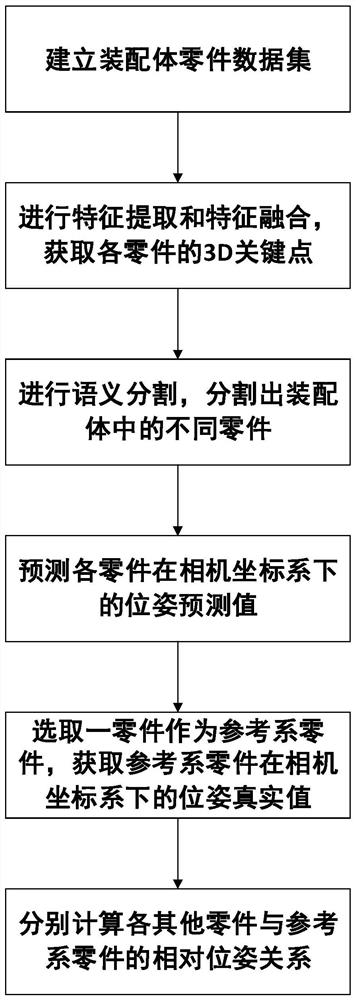

[0045] see figure 1 , a method for monitoring relative pose estimation of assembly parts based on deep learning, including the following steps:

[0046] Establish the assembly part data set, use the camera to move the surrounding ball along the target assembly, shoot the target assembly at a certain angle at intervals, obtain images of the target assembly at different angles, and generate different parts in the assembly through the collected images Corresponding point cloud data set, establish a sample data set;

[0047]Select 3D key points, load the sample data set into the deep learning network (in this example, a special extraction network is used) for feature extraction, obtain the surface information and geometric information of each part in the target assembly, and analyze the surface information of each part Perform feature fusion with geometric information to obtain the point-by-point features of each part; perform 3D key point detection on the point-by-point features...

Embodiment 2

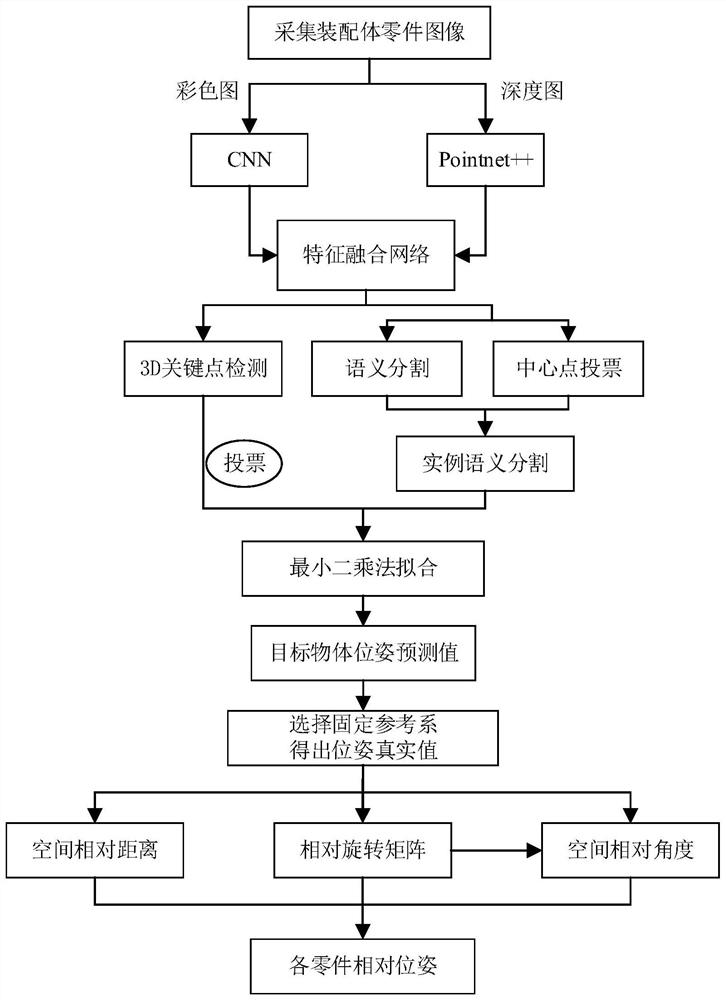

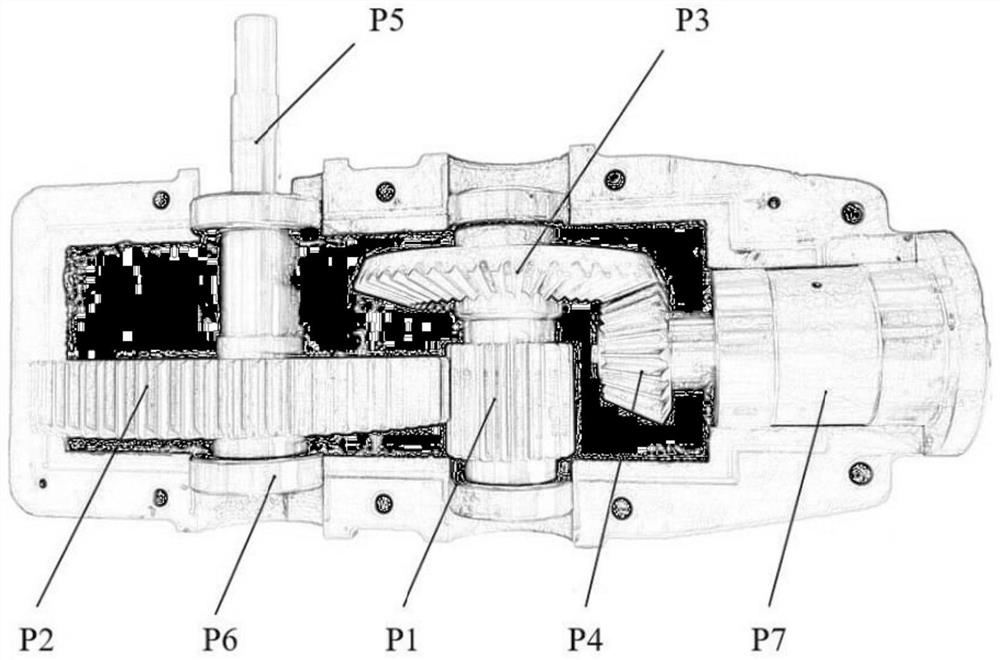

[0054] Further, see figure 2 , in this embodiment, the image includes a depth map and a color map, and the scene registration is performed through the depth map and the color map, and the gray value of each pixel in the depth map indicates the distance from a certain point in the scene to the camera, reflecting the scene According to the geometry of the surface of the visible object in the scene, according to a group of depth images obtained by shooting, the scene is reconstructed through the coordinate conversion of the pcl function library, and the point cloud data of the scene object is obtained; the scene includes multiple target objects, and the point cloud is repeatedly cropped using meshlab software , remove the background information and messy information in the scene, and then generate the 3D model of the assembly in the initial frame coordinate system. The 3D model of the assembly includes the 3D model of each part; the 3D model of the part includes each The coordin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com