Image description method based on intra-layer and inter-layer joint global representation

An image description and global technology, applied in neural learning methods, instruments, biological neural network models, etc., can solve problems such as bias, no explicit modeling of global features, missing objects, and relationships

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0058] The following embodiments will describe the technical solutions and beneficial effects of the present invention in detail in conjunction with the accompanying drawings.

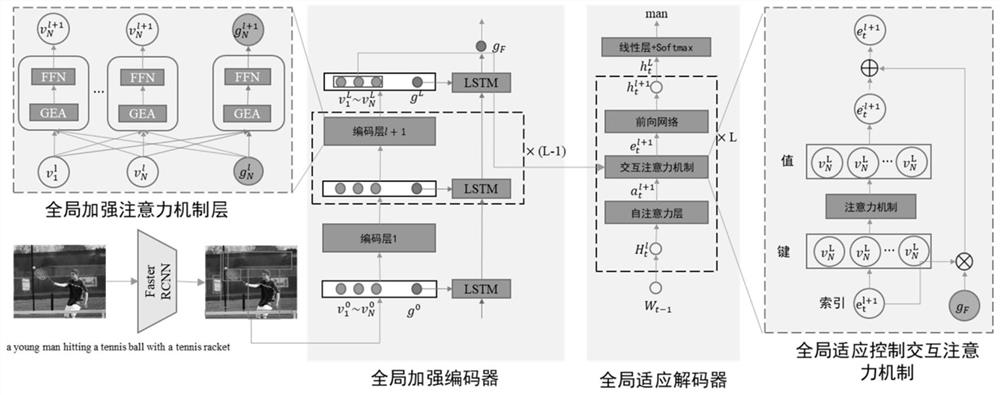

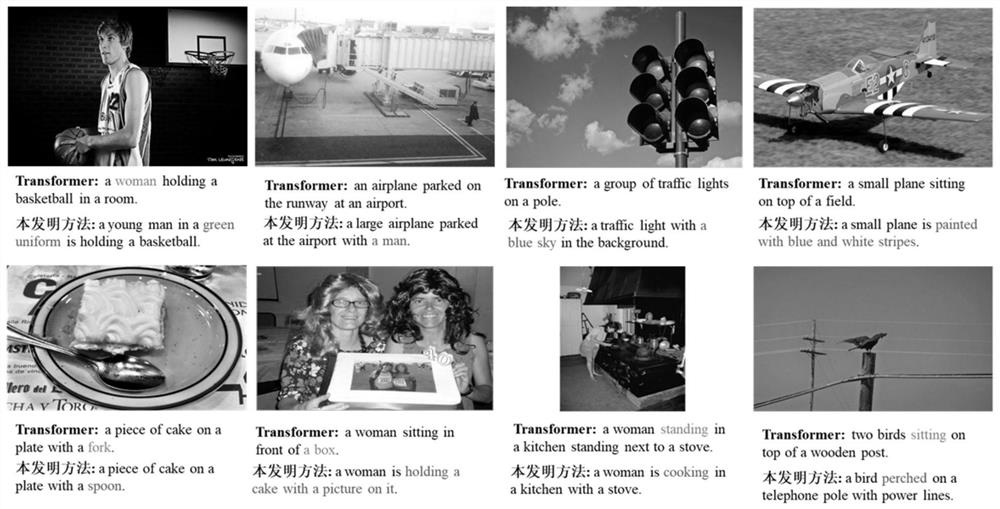

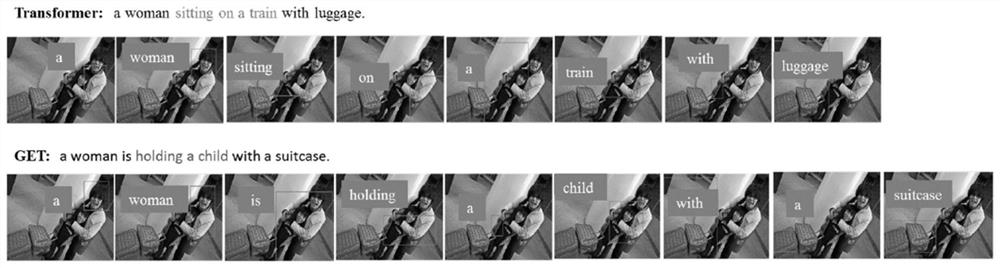

[0059] The purpose of the present invention is to solve the problem that the traditional transformer-based image description method does not explicitly model global features, resulting in missing objects and biased relationships. It proposes to connect different local features by modeling a more comprehensive and instructive global feature. information, thereby improving the accuracy of generated descriptions, and providing an image description method based on a joint global representation between layers within layers. The specific method flow is as figure 1 shown.

[0060] Embodiments of the present invention include the following steps:

[0061] 1) For the images in the image library, first use the convolutional neural network to extract the corresponding image features;

[0062] 2) Sen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com