Content caching method based on deep learning

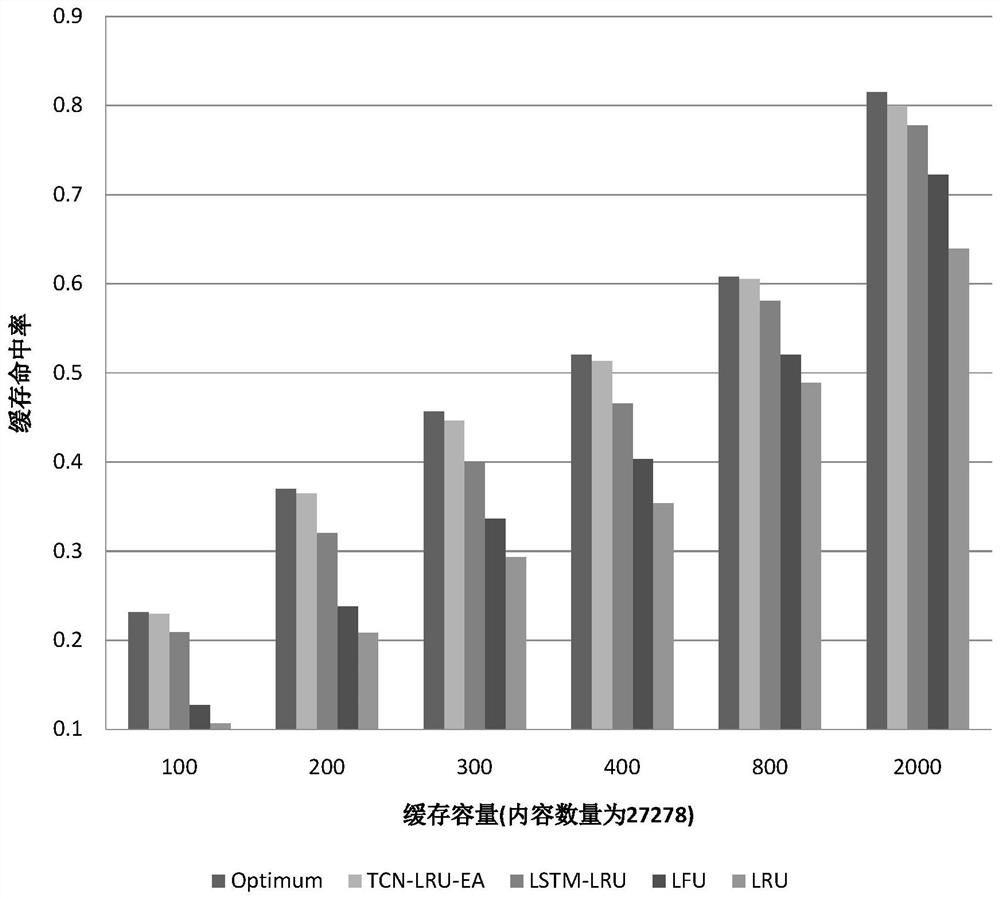

A content caching and deep learning technology, applied in the field of content caching based on deep learning, can solve the problems of inaccurate prediction of model popularity, low cache space utilization, low cache hit rate, etc. Good hit rate and good prediction accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

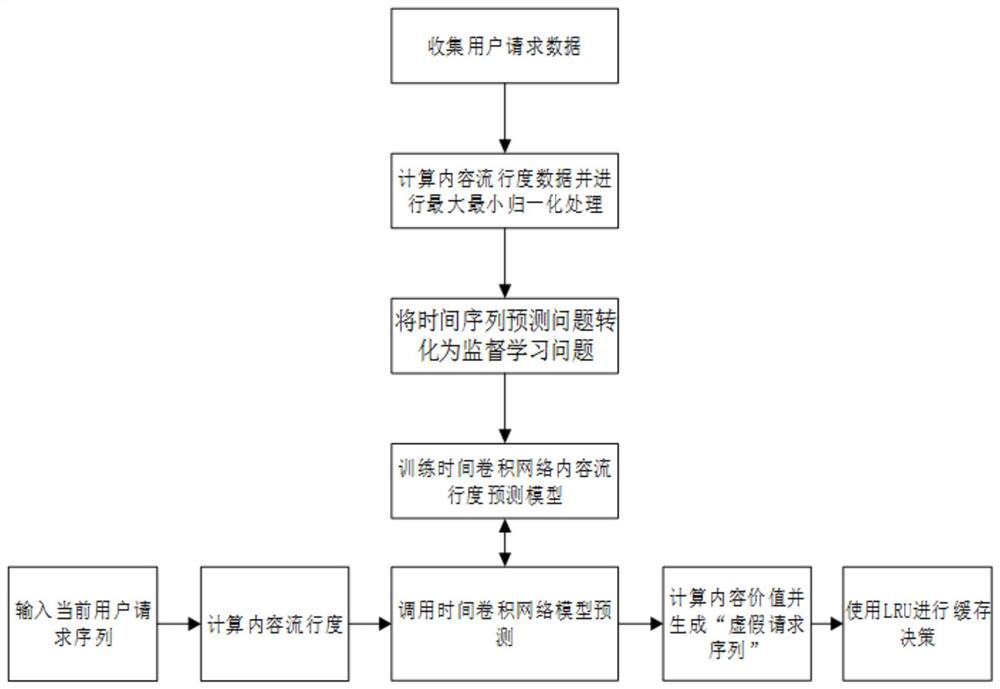

[0032] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

[0033] refer to figure 1 , a high-speed content caching method based on deep learning in this embodiment, the specific steps are as follows:

[0034] Step 1. Collect user request information of edge nodes in an area, mainly including the ID of the content requested by the user, the ID of the user, and the timestamp of the requested content, and sort according to the timestamp to construct a time series.

[0035] Step 2. According to the content request sequence sorted by time stamp collected in step 1, a probability sliding window is constructed based on a fixed request length N. The time step of the sliding window movement is m (mi (i=1, 23,..., n) the number of occurrences O in the probability window at the current moment t i (t), then the content C i The content popularity of the probability window at the current moment t is defined as: ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com