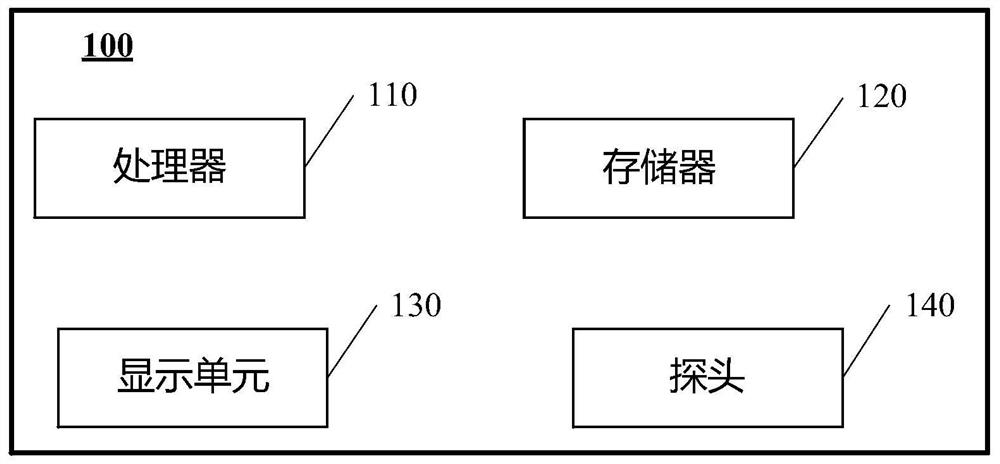

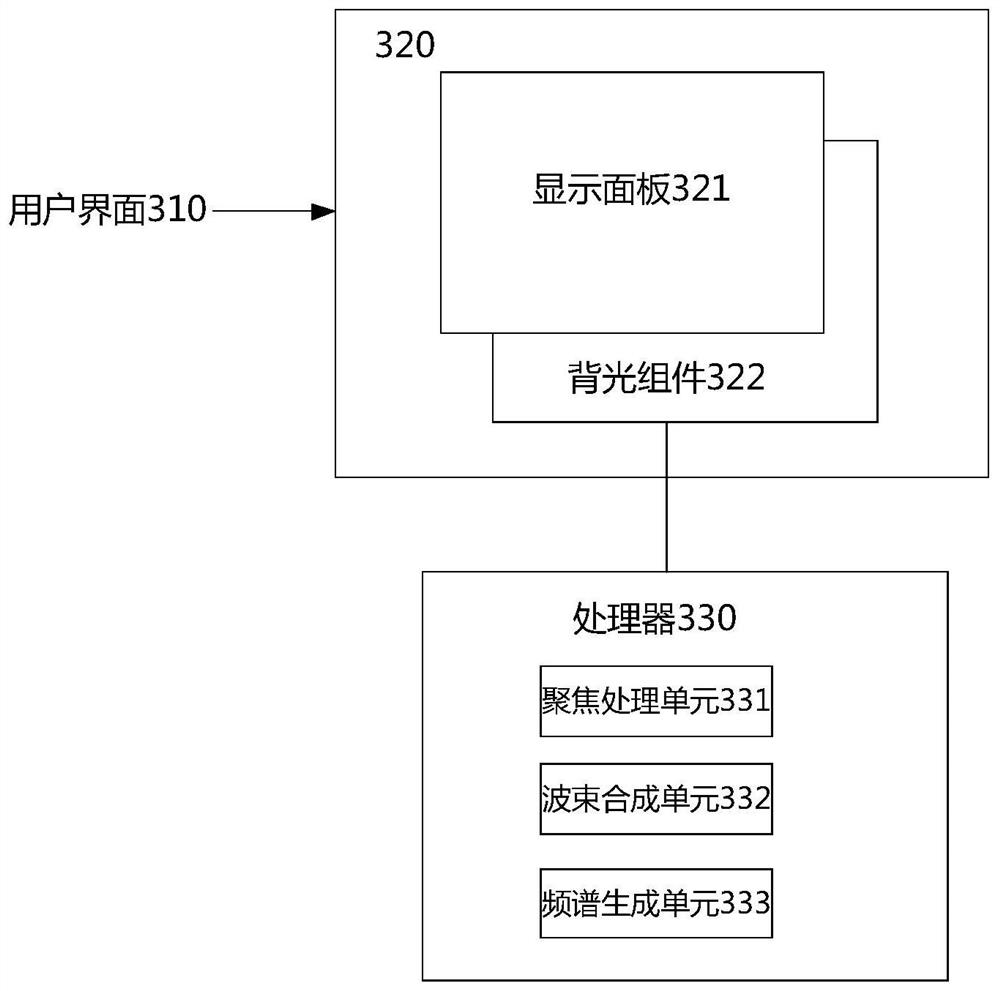

Position information processing method of ultrasonic puncture needle, ultrasonic equipment and storage medium

An information processing method and puncture needle technology, which are applied to puncture needles, trocars, etc., can solve the problems of difficult ultrasonic beams, poor imaging effect of ultrasonic puncture needles, and unsuitable non-linear probes, etc. Low complexity, real-time detection, and the effect of ensuring detection accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

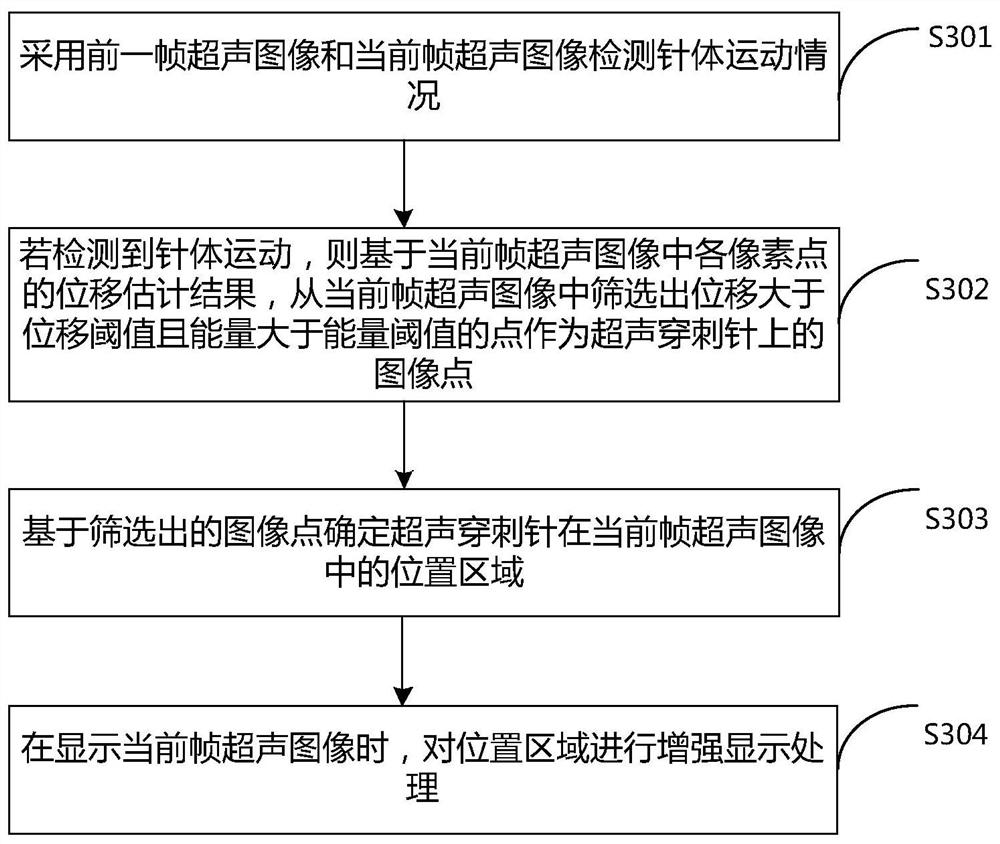

[0150] Perform displacement estimation on the previous frame of ultrasound image and the current frame of ultrasound image, obtain the displacement estimation result of each pixel in the current frame of ultrasound image, and determine the displacement average of each pixel displacement estimation result, if the displacement average is not greater than the first A preset threshold value, it is determined that the needle movement is detected, otherwise, it is determined that the needle movement is not detected.

[0151] Exemplarily, any one of a cross-correlation displacement estimation algorithm, a phase difference-based displacement estimation algorithm, and a zero-phase displacement estimation algorithm is used to perform displacement estimation on the previous frame of ultrasound image and the current frame of ultrasound image.

[0152] For example, using the cross-correlation displacement estimation algorithm to estimate the displacement of the previous frame of ultrasound ...

Embodiment approach 2

[0181] Determine the energy difference between the previous frame of ultrasound image and the current frame of ultrasound image, and determine the energy average of the absolute value of the energy difference of each pixel, if the energy average is not greater than the second preset threshold, then determine the detection If the needle movement is detected, otherwise, it is determined that no needle movement is detected.

[0182] Exemplarily, the energy value of each image point in the ultrasonic image of the previous frame and the ultrasonic image of the current frame can be calculated by the following energy formula (9):

[0183] Power=sqrt(I*I+Q*Q) (9)

[0184] Among them, Power represents the energy value of the image point, I is determined by the I part of the IQ data, Q is determined by the Q part of the IQ data, and the IQ data is determined by the ultrasonic image where it is located.

[0185] After determining the energy value of each image point in the ultrasonic im...

Embodiment approach 3

[0188] Perform displacement estimation on the previous frame of ultrasound image and the current frame of ultrasound image, obtain the displacement estimation result of each pixel in the current frame of ultrasound image, and determine the displacement average value of the displacement estimation result of each pixel;

[0189] Determine the energy difference between the previous frame of ultrasound image and the current frame of ultrasound image, and determine the energy average value of the absolute value of the energy difference of each pixel;

[0190]If the average displacement value is not greater than the first preset threshold and the average energy value is not greater than the second preset threshold, it is determined that the needle motion is detected; otherwise, it is determined that the needle motion is not detected.

[0191] For the specific implementation of the above steps, reference may be made to Embodiment 1 of the needle motion detection and Embodiment 2 of th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com